Executive Summary

To provide even more actionable data, the findings are broken down by country, industry sector, and protocol, with special sections on the effect of the pandemic and technological shifts like the movement to the cloud. This helps diagnose what is vulnerable, what is improving or getting worse, and what solutions are available for policymakers, business leaders, and innovators to make the internet more secure.

Executive Summary

The Global Pandemic, As Seen From the Internet

Executive Summary

The Global Pandemic, As Seen From the Internet

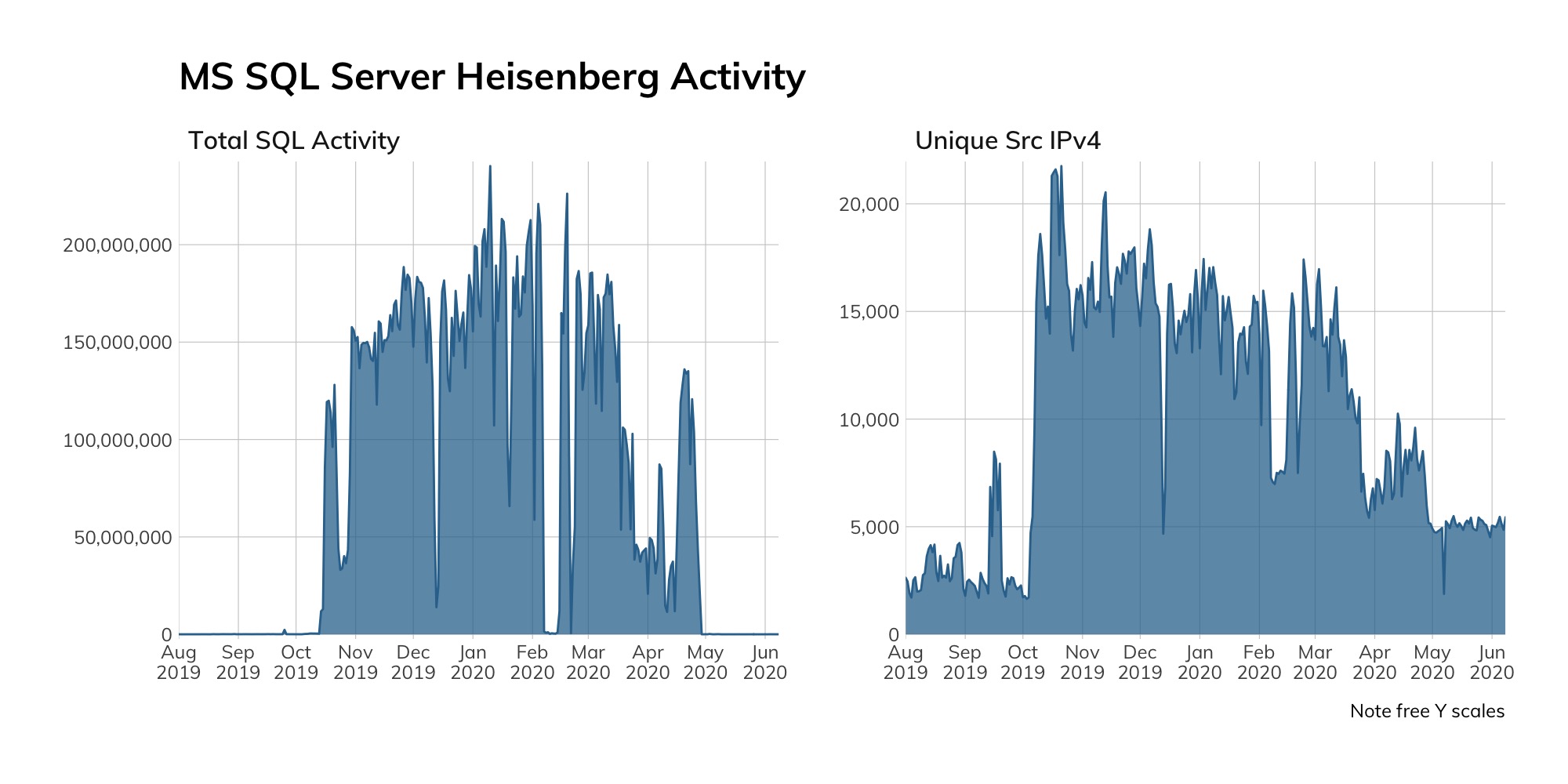

By the middle of 2020, the entire world has been coping with a virus outbreak of the sort we don't usually cover in computer science fields: the biological pandemic of COVID-19[1] and the nearly immediate economic recession following the resultant lockdown. At Rapid7, we were already planning on producing another survey of the internet and the state of security worldwide, but we now have a unique opportunity to capture this unprecedented period of tumult as it reshapes our world in sudden, chaotic ways.

The first question we tasked ourselves with answering was, "How did the pandemic, lockdown, and job loss affect the character and composition of the internet?" We expected to see a renaissance of poorly configured, hastily deployed, and wholly insecure services dropped on the public internet, as people scrambled to “just make things work” once they were locked out of their offices, coworking spaces, and schools, suddenly shifted to study-at-home models of work and study. Fears of thousands of new Windows SMB services for file sharing between work and home, rsync servers collecting backup data across the internet, and unconfigured IoT devices offering Telnet-based consoles haunted us as we started to collect the April and May data for this project.

But, the year 2020 is nothing if not full of surprises—even pleasant ones! Indeed, we found that the populations of grossly insecure services such as SMB, Telnet, and rsync, along with the core email protocols, actually decreased from the levels seen in 2019, while more secure alternatives to insecure protocols, like SSH (Secure Shell) and DoT (DNS-over-TLS) increased overall. So, while there are regional differences and certainly areas with troubling levels of exposure—which we explore in depth in this paper—the internet as a whole seems to be moving in the right direction when it comes to secure versus insecure services.

This is a frankly shocking finding. The global disasters of disease and recession, along with the uncertainty they bring, appear to have had no obvious effect on the fundamental nature of the internet. It is possible that this is because we have yet to see the full impact of the pandemic, recession, and greater adoption of remote working. Rapid7 will continue to monitor and report as things develop.

Executive Summary

The Myth of the Silver City

Executive Summary

The Myth of the Silver City

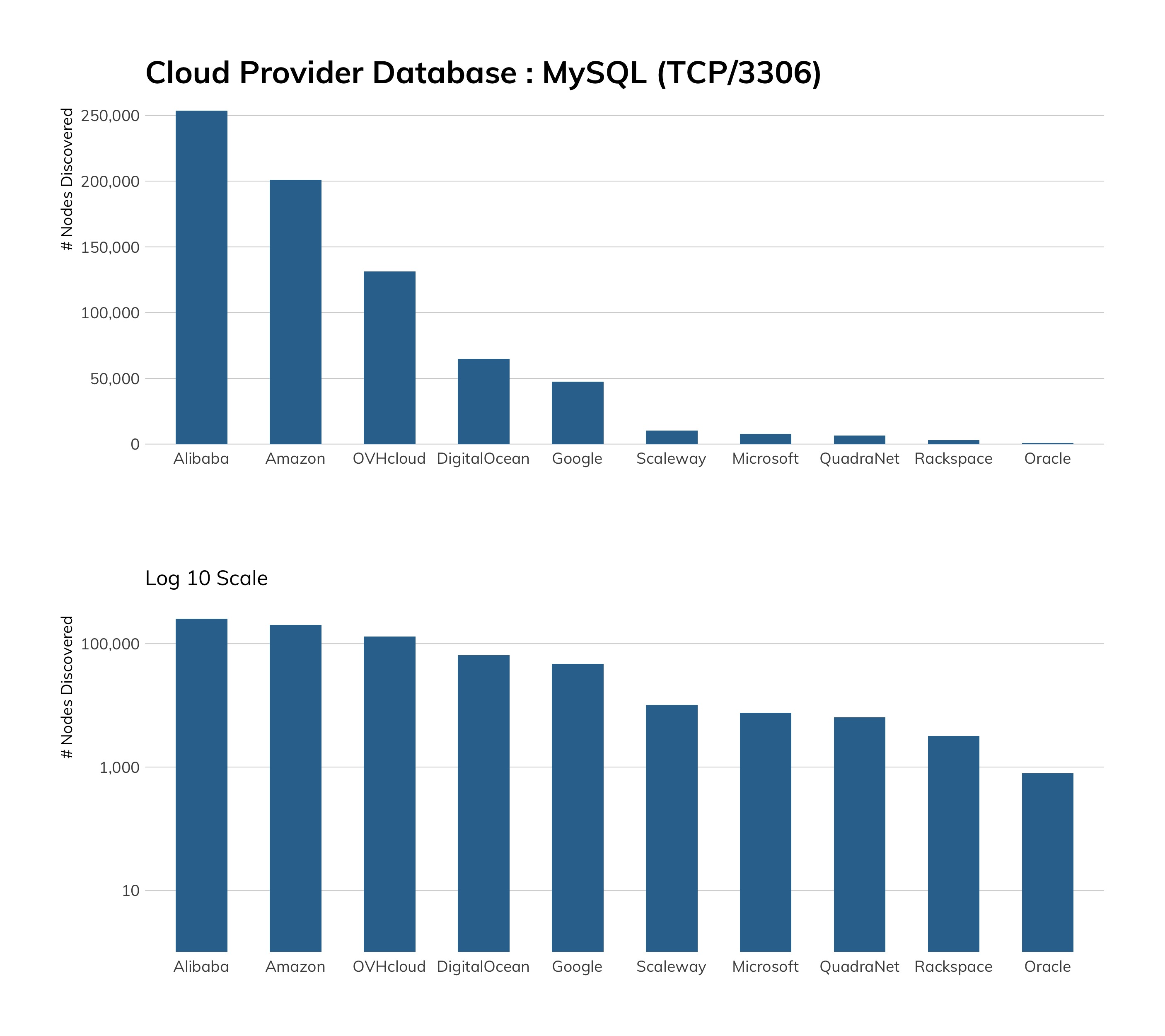

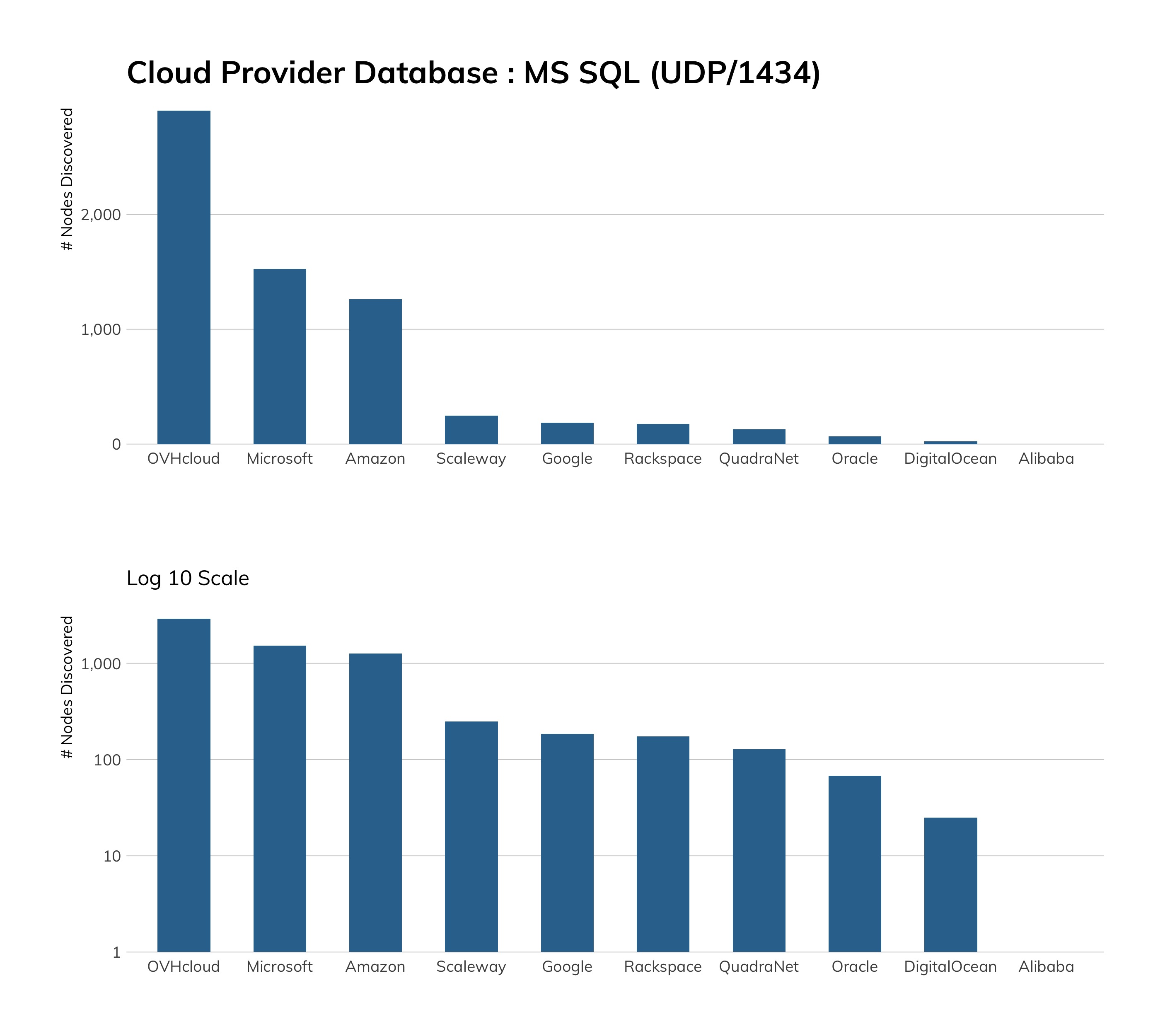

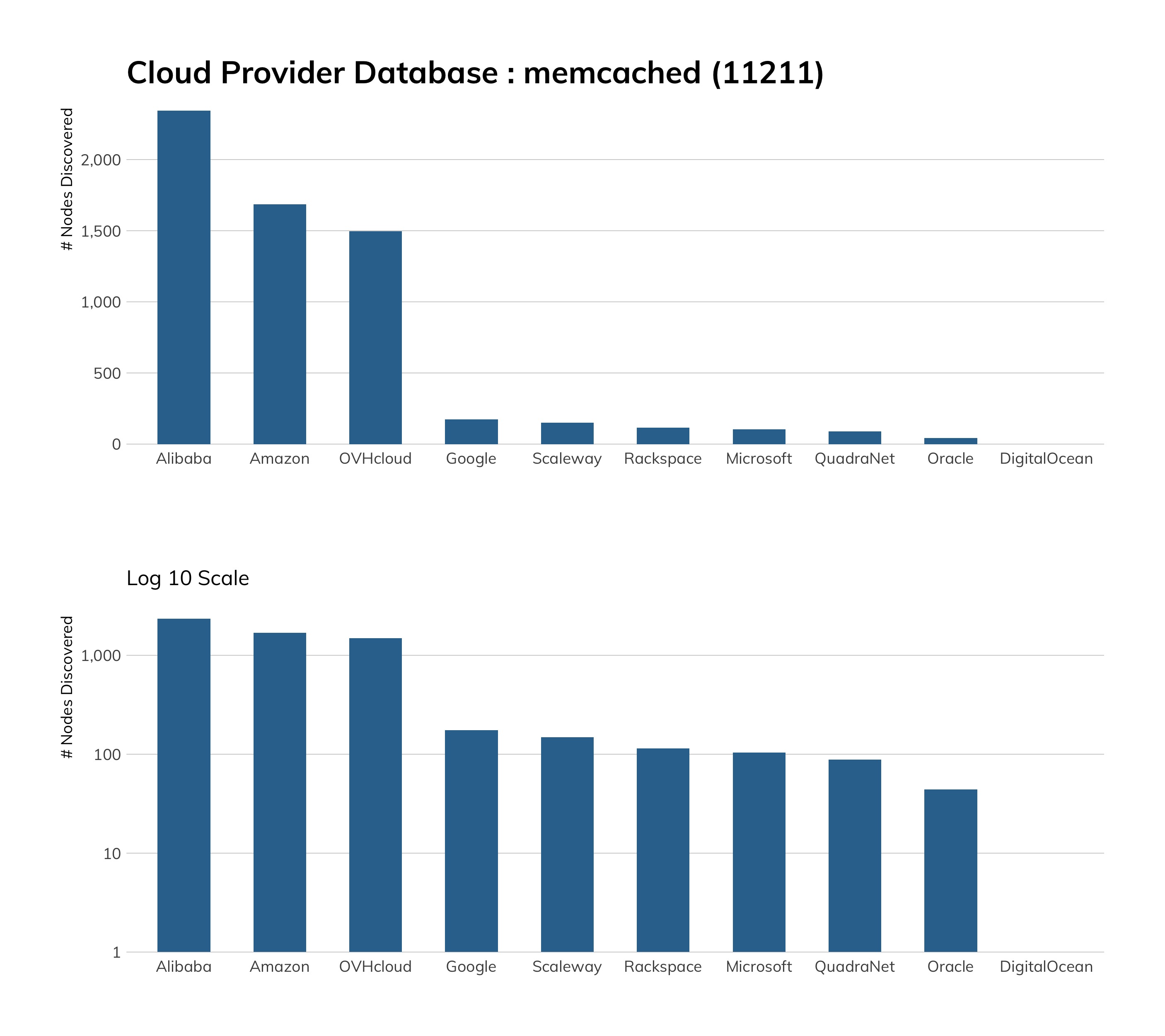

Meanwhile, the cloud is home to more “internet stuff” than ever before. Platform-as-a-service (PaaS) and infrastructure-as-a-service (IaaS) providers enable even small organizations to launch professionally managed and maintained server infrastructure to run every sort of internet-based venture you can think of. Given the good news of a decrease of insecure-by-design services on the internet, we expected to find that cloud providers were responsible for this decrease. Perhaps naively, we imagined that the global leaders in cloud provisioning were constructing glittering silver cities in the sky, resplendent with the perfect architecture of containerized software deployments, all secure by design.

This was not the case. It turns out that cloud providers are often dogged by the same problems that traditional, on-premises IT shops struggle with; the easy path is not necessarily the secure path to getting internet services configured and up and running, even in those providers’ own documentation and defaults. Furthermore, there seems to be a fairly common “set it and forget it” mindset with many users of these services given the number of unpatched/out-of-date Ubuntu (and other Linux distributions) instances and associated installed services in these networks. This finding was a sobering reminder that the security of the internet still trails the desire to just get things working, and working quickly.

Executive Summary

National Rankings

Executive Summary

National Rankings

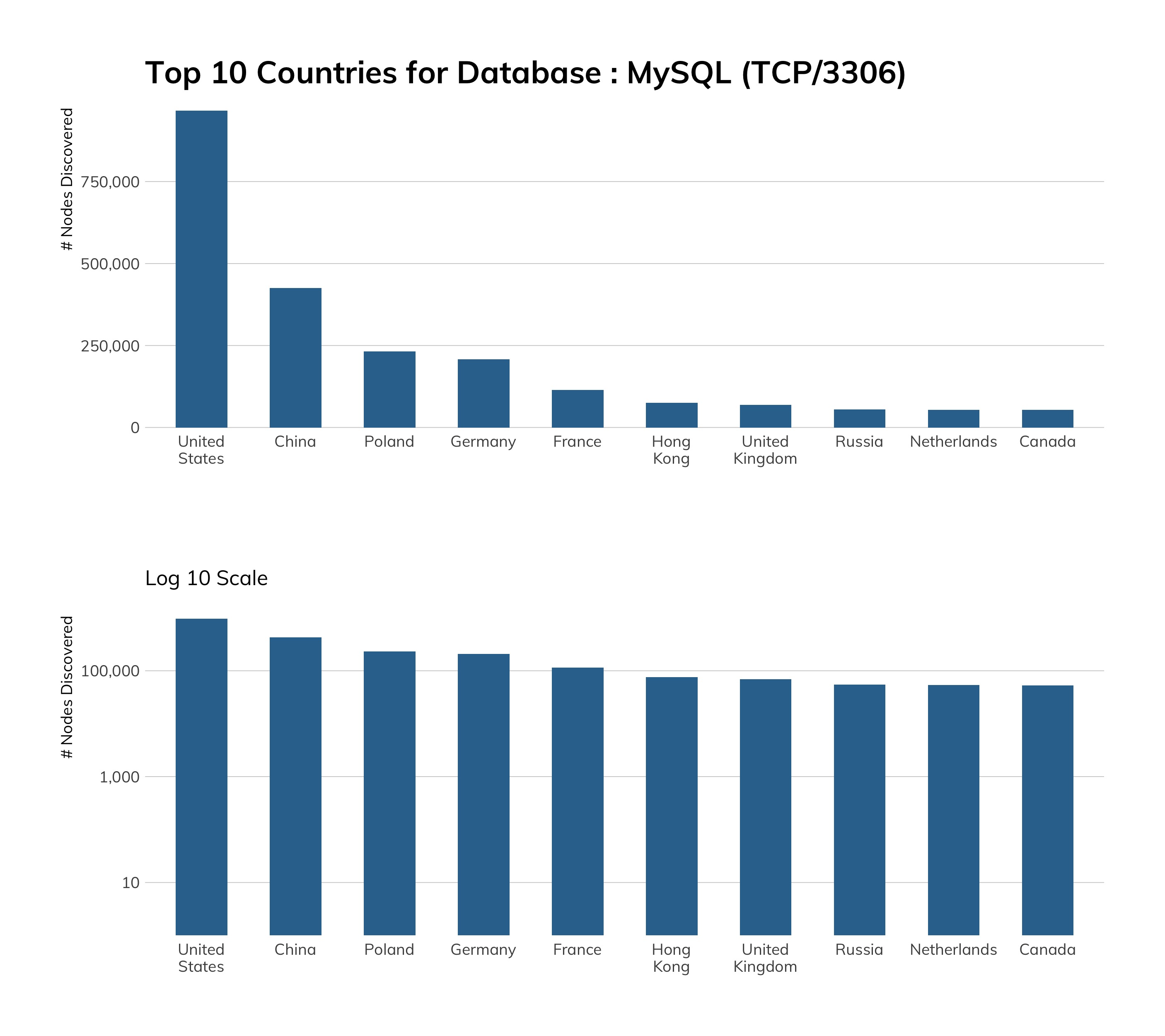

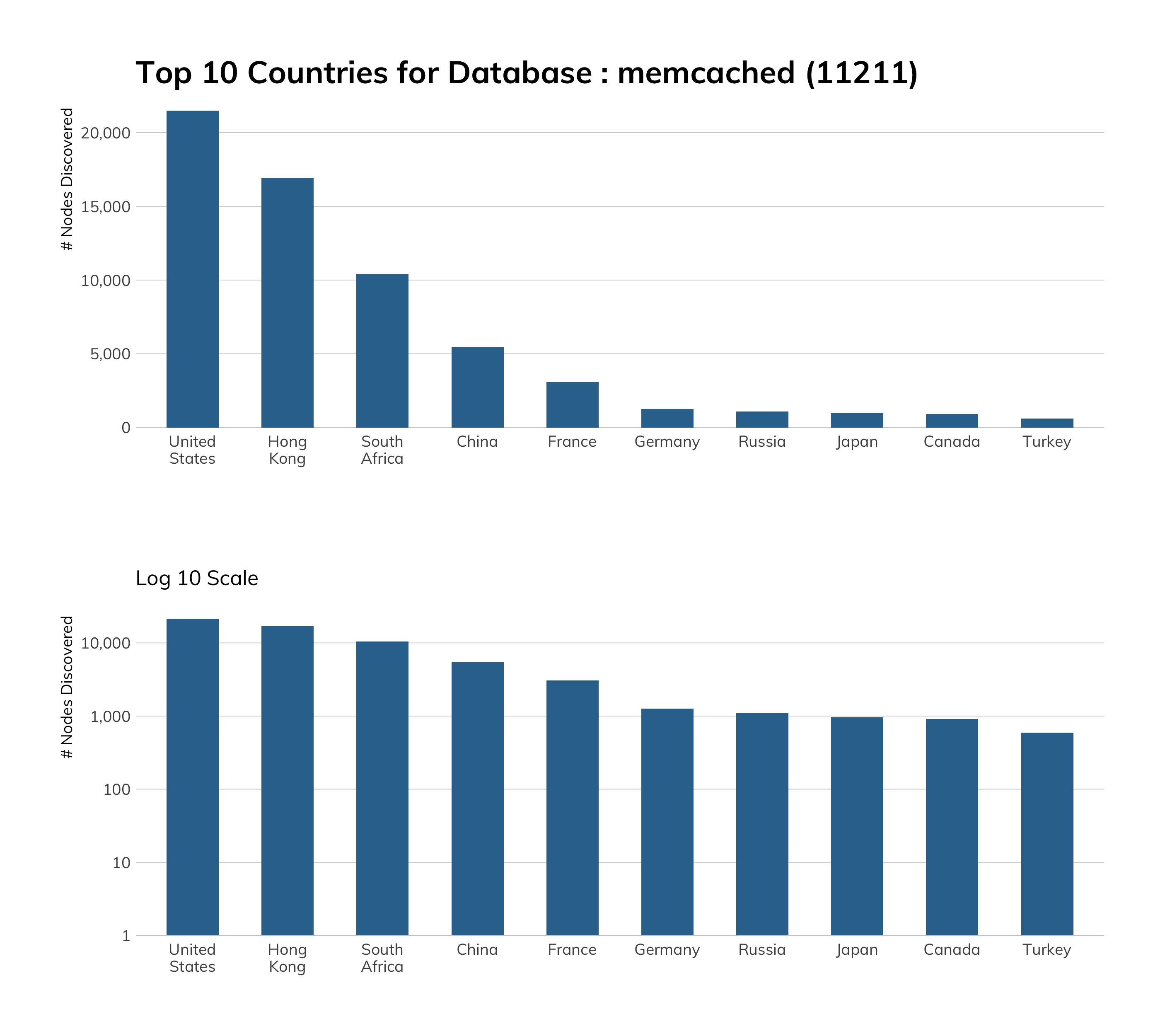

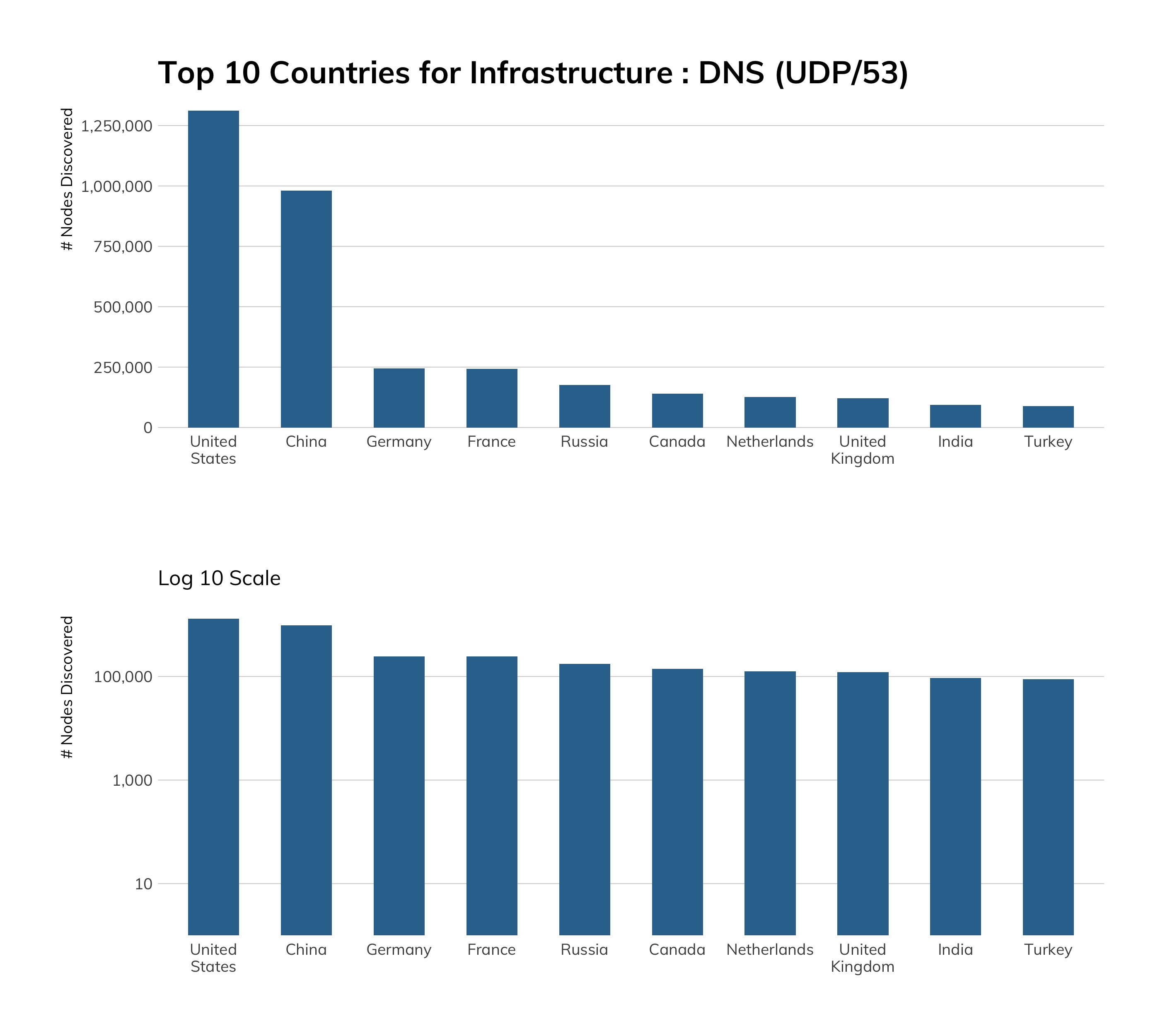

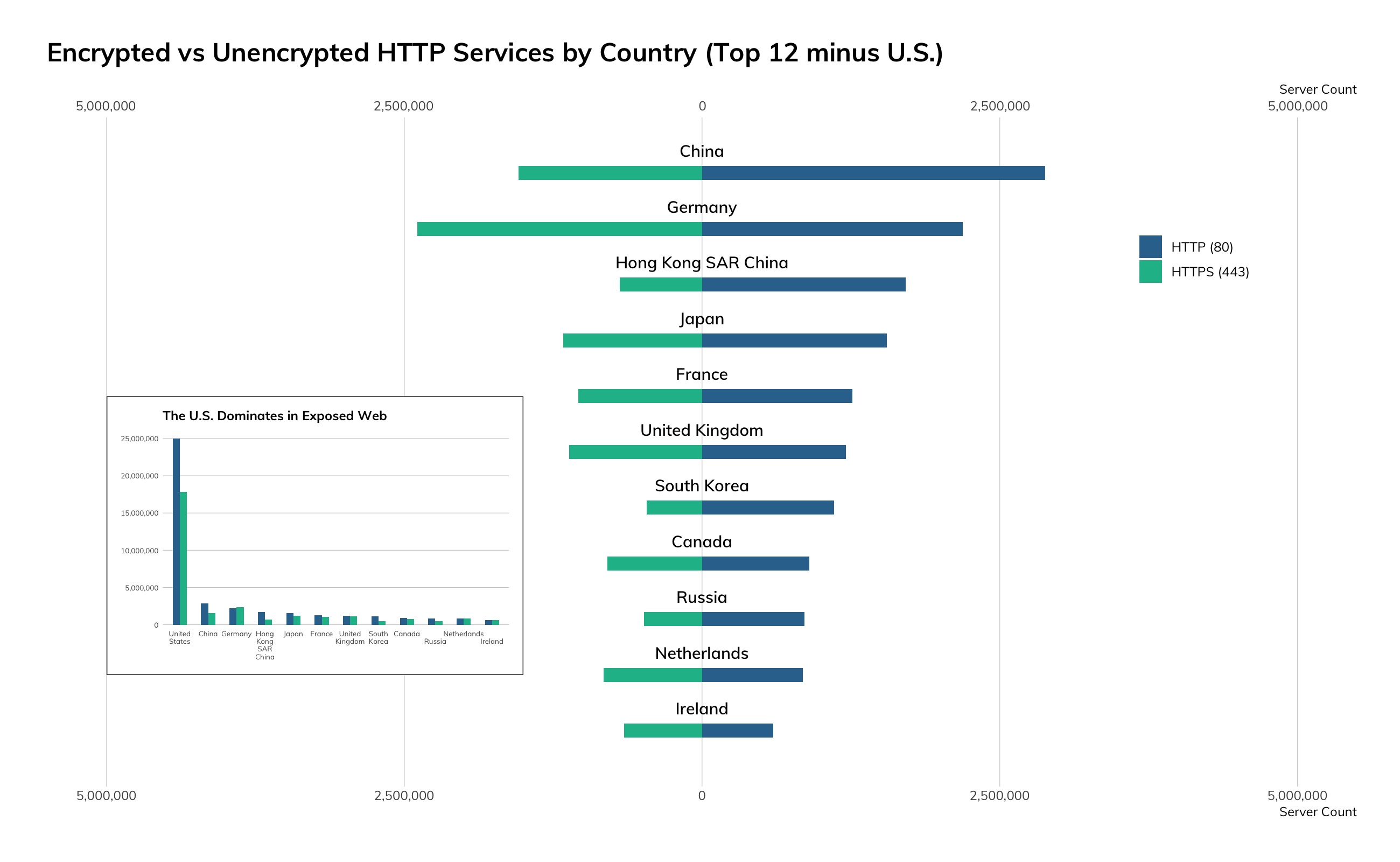

While network operators may know exactly what the autonomous system “AS4809” refers to, most of the rest of us do not. As such, it is much easier to just refer to the computers there as simply being “in China” or “in networks attributed or allocated to China.” These network assignments, along with other characteristics, are what IP geolocation services use to attribute a given IP address to a given location. We’ve chosen to use “country” as the attribution feature since it is the most accurate out of all possible fields.

While we use terms like “nation” and “country” interchangeably to describe the regions discussed in this report, we occasionally refer to other named regions on Earth as well, without making any distinction as to their political statuses as special administrative regions, scientific preserves, or claimed territories of other countries. The rule for inclusion is simply, “Does the region have an ISO 3166 3-alpha country code?”[2] If so, it's a “country” or “nation” for our research purposes, regardless of the political realities of the day. For example, regions like “Hong Kong” (HKG) can and do appear in this research (Hong Kong has some interesting features when it comes to exposure), while “California” does not, even though it is home to plenty of poorly configured internet-connected devices.

In case you're reading this report and wondering only what the "most exposed" countries in the world are, we hope to save you the trouble by providing you with the discussion below and the findings in Table 1.

Most Exposed Countries

Table 1 shows the most exposed countries, calculated by weighting a country higher on the list (i.e., more bad exposure) by:

- Total attack surface (i.e., number of total IPv4s in use exposing something during the study period). Rationale: More stuff = more stuff to attack.

- Total exposure of selected services. Specifically SMB, SQL Server, and Telnet. Rationale: These should never be exposed. Ever.

- Distinct number of CVEs present across all services. Rationale: More known vulnerabilities = more exposure.

- The center of the distribution of vulnerability rates. Vulnerability rate is defined as the number of exposed services with vulnerabilities/exposed services. Rationale: Higher vulnerability concentration across all exposed services should contribute more to the rank penalty.

- Maximum vulnerability rate. Rationale: To break any ties that remain after the previous steps, penalize a nation state with the highest vulnerability rate.

As one might expect, our ranking methodology highlights countries with large swaths of IP space, so the U.S. and China being in the No. 1 and No. 2 spots of “most exposed” shouldn't be too surprising. The interesting, and perhaps more surprising, findings are found from No. 3 through the remainder of the 50 most-exposed regions. For example, while Canada and Iran both have sophisticated, extensive internet presence, Canada has less than half the human population of Iran. Even so, Canada and Iran have very similar exposure rates, with Canada edging out Iran for the No. 9 exposure spot.

More to Come

In the coming weeks, we expect to produce supplemental reports that take a closer look at certain countries and groups of countries, such as Japan and Latin America. If you have a favorite region you'd like to visit with this particular magnifying glass, please let us know!

Executive Summary

Industry Rankings

Executive Summary

Industry Rankings

In 2019, Rapid7 produced a series of reports, the Industry Cyber-Exposure Reports (or ICERs), which looked at the security posture of the Fortune 500 in the United States, the FTSE 250+ in the United Kingdom, the Deutsche Börse Prime Standard 320 in Germany, the ASX 200 in Australia, and the Nikkei 225 in Japan. Because we've already gone to the trouble of identifying these 1,500 or so highly successful, well-resourced, publicly traded companies, we've also taken a moment to grade and rank these industries against each other.

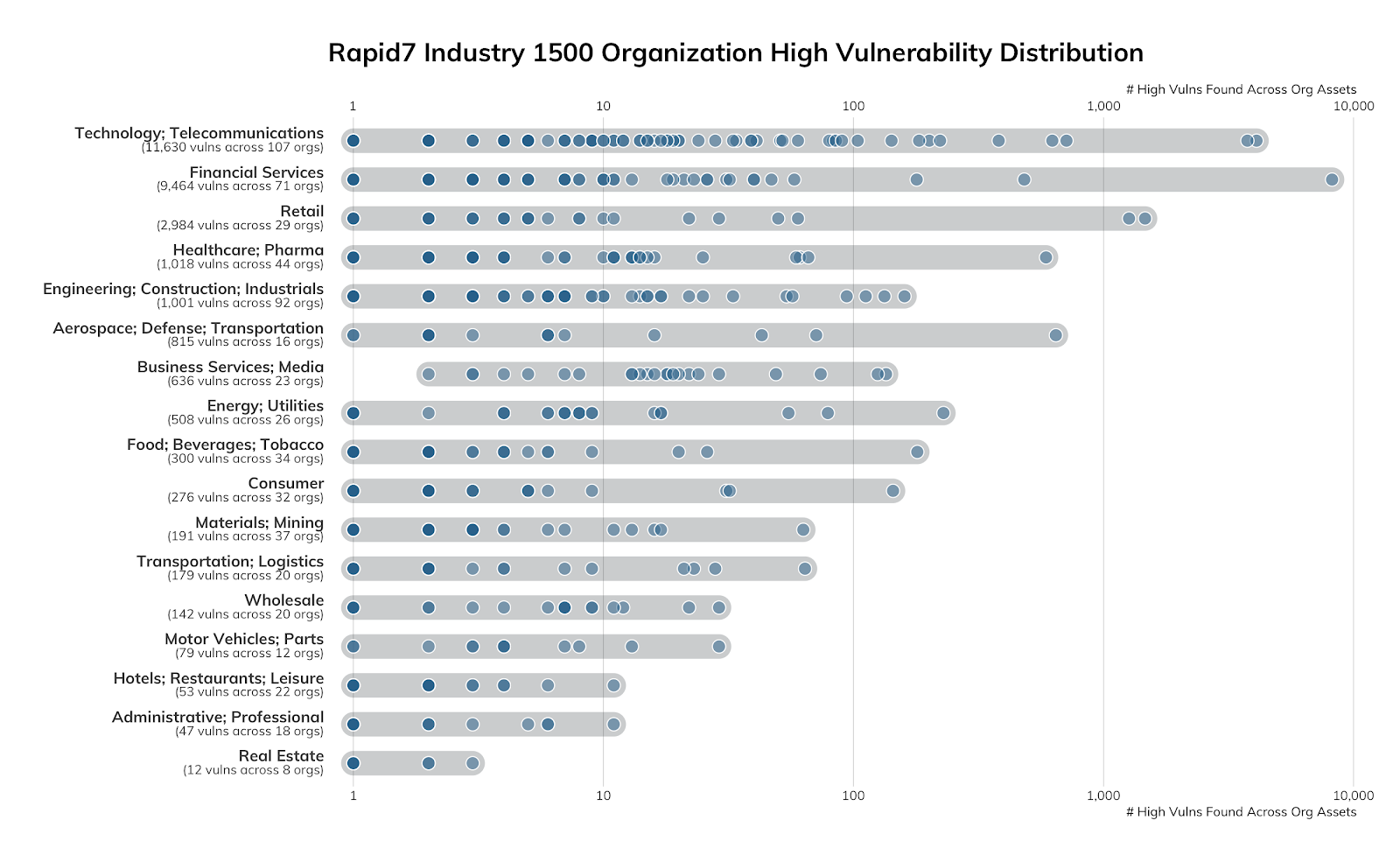

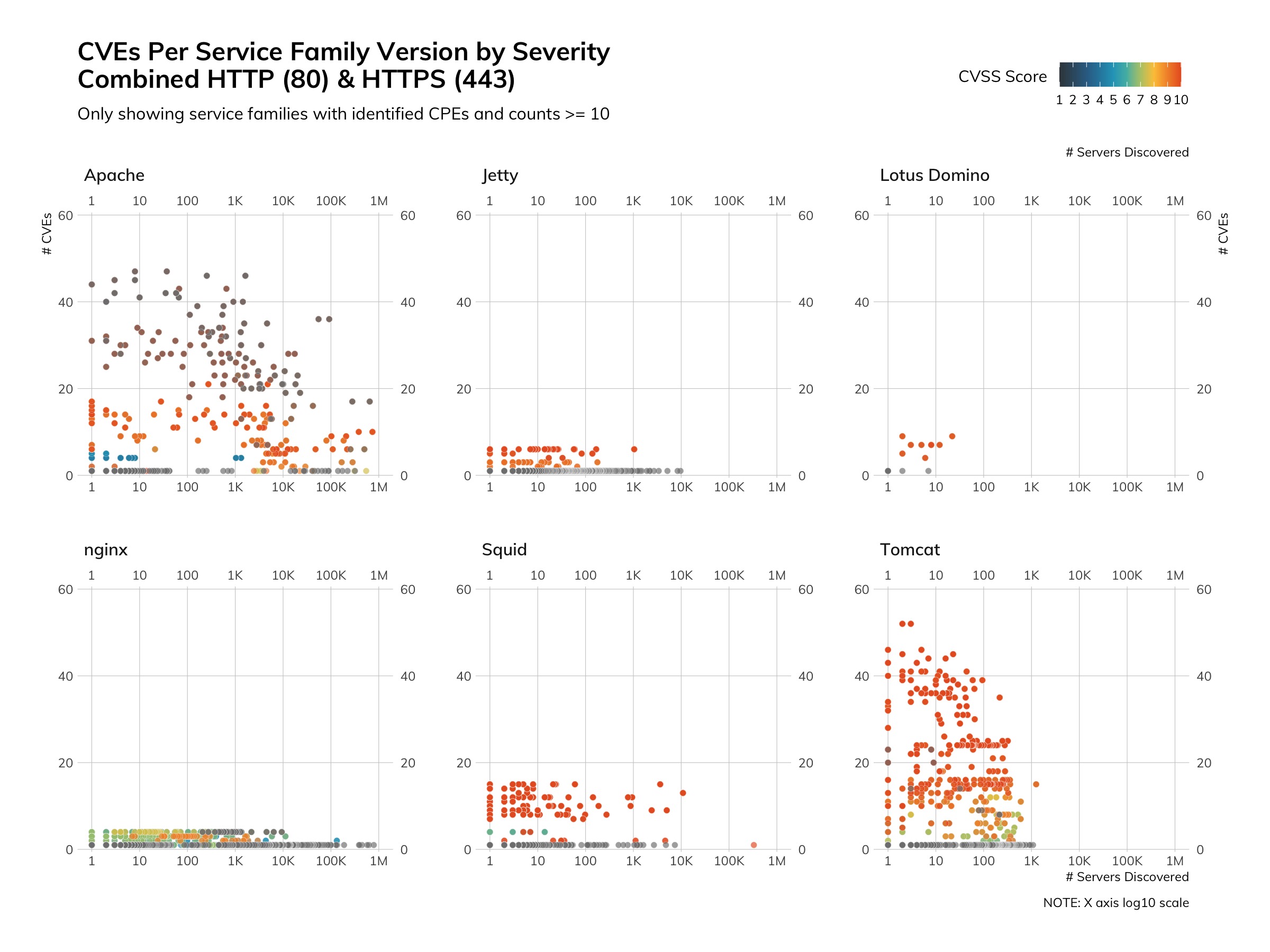

In the above chart, each dot represents one organization, and the position on the X axis represents how many high-severity vulnerabilities were discovered in components of their internet-facing attack surface. These components range from web servers to caching servers to the operating systems these services run on. The majority of the weakness are in poorly maintained Apache HTTP web servers with a smattering of “the usual suspects” (e.g., DNS, SMTP, SSH) quickly trailing behind:

Apache components—in this case, the HTTPD web server and Java servlet runner—have the distinct disadvantage of being much older than, say, nginx, and also being part of “appliances” that many organizations have little visibility into or control over (making a great case for the need of some type of “software bill of materials”3 so IT and security teams can better manage their exposure).

While the chart shows the overall distribution, we’ve approached ranking these industries in a slightly different way than we did country ranks, since we know a bit more about the population that is exposing these services. These are well-financed organizations; most of them must adhere to at least one regulatory framework; they employ dedicated information technology and information security experts; most have been around for at least 10 years; and, all of them should have some basic IT and cybersecurity hygiene practices in place.

With all this in mind, we defined our own cyber-version of grime and rodent infestations as the number of “high” (CVSS 8.5 or higher) vulnerabilities in discovered services for each industry grouping4, and assigned a letter grade similar to what New York City does for restaurants. The assigned letter is determined by the logarithmic mean high exposure per-industry (since some industries have far fewer member organizations than others and certain industries lend themselves to exposing hundreds or even thousands of assets), which results in the following scores, shown in Table 3.

Looking back at just the past 12 or so months, all of the industries with a “D” grade have garnered the majority of breach and ransomware headlines, so it’s not too surprising to see them trailing behind the others.

However, none of these industries come close to “perfect,” and our previous ICER work showed each regional list of companies had a lot of work to do when it comes to cyber-hygiene. This is underscored by the fact that 80% of the Apache HTTPD high exposure lies in versions ranging from three to 14 years old, as seen in Table 4.

However, the news is not all bad. We’ve mapped the public IPv4 space of just under 1,500 organizations across the ICERs, and only a minority slice—611 (~40%)—had high-severity exposure. We hope to see that percentage drop as more organizations replace aging IT applications and infrastructure with more modern components.

Executive Summary

Categorizing Internet Security: Key Findings

Executive Summary

Categorizing Internet Security: Key Findings

While we are bolstered by the evidence that progress is being made in cybersecurity, and the trend is heading in the right direction, progress continues to be slow. Arguably, progress is happening far too slowly when considered in the context of the exploding adoption of, and reliance on, connected technologies; the prevalence of both automated, untargeted attacks, and highly sophisticated and well-resourced attackers; and the increasing complexity of our systems.

With that in mind, let’s examine which aspects contribute to the overall security posture of this advanced global telecommunications network we all depend on to conduct commerce, express our culture, and, more than ever, live our daily lives. We believe that it comes down to four considerations, all measured throughout this paper across the 24 protocols we assessed: the design, deployment, access, and maintenance of internet-based services.

Cryptographic Design

The internet was invented in the 1960s, exploded in the 1990s, and has continued to grow and change throughout the 21st century. Along the way, thousands of universities, companies, and individuals have developed novel solutions for transmitting and receiving data across a public network, yet only recently are services designed with at least some security concepts in mind. The most important design element of any new service on the internet is the incorporation of modern cryptography, which serves two functions. First, cryptography ensures that communications and data maintain a high degree of confidentiality and cannot be easily eavesdropped upon or altered while traversing the public internet. Second, cryptographic controls establish the identities of the machines and people involved in secure conversations—cryptography ensures that you know with certainty that the machine that purports to be part of your bank is actually your bank’s, and your bank can know that you are, in fact, you. Whenever cryptography isn't used—known as “cleartext” communications—neither of these assertions of confidentiality nor identity can be guaranteed in any meaningful way.

- Key finding: A technical assessment of the 24 service protocols surveyed finds that, on the whole, unencrypted, cleartext protocols are still the rule, rather than the exception, on how information flows around the world, with 42% more plaintext HTTP servers than HTTPS, 3 million databases awaiting insecure queries, and 2.9 million routers, switches, and servers accepting Telnet connections.

- Key recommendation: If it touches the internet, it should be encrypted. This goes for data, service identification, everything.

Service Deployment

This area is the one we tend to spend the most time and effort in measuring—what services are actually available on the internet, and who can reach those services? The internet was created with a decentralized and open design, but this openness is a double-edged sword. On the one hand, it makes the internet, as a whole, very difficult to destroy. When parts of the internet are damaged and destroyed, the internet tends to simply route around the damage, continuing to function for other services and their users. On the other hand, it means that every computer can normally reach and exchange information with any other computer, even if some of those computers are operated by malicious attackers. Therefore, when we find services that should not be reachable by just anyone, such as databases, command and control consoles, or other services that external strangers have no business talking to in the first place, we mark them as dangerously exposed.

- Key finding: One bit of positive news was that we found the population of insecure services has gone down over the past year, with an average 13% decrease in exposed, dangerous services such as SMB, Telnet, and rsync, crushing the predicted doom-and-gloom jump of newly exposed insecure services such as Telnet and SMB, despite the sudden shift to work-at-home for millions of people and the continued rise of Internet of Things (IoT) devices crowding residential networks.

- Key recommendation: Keep up the good work! ISPs, enterprises, and governments should continue to work together to kick these inappropriate services off the internet.

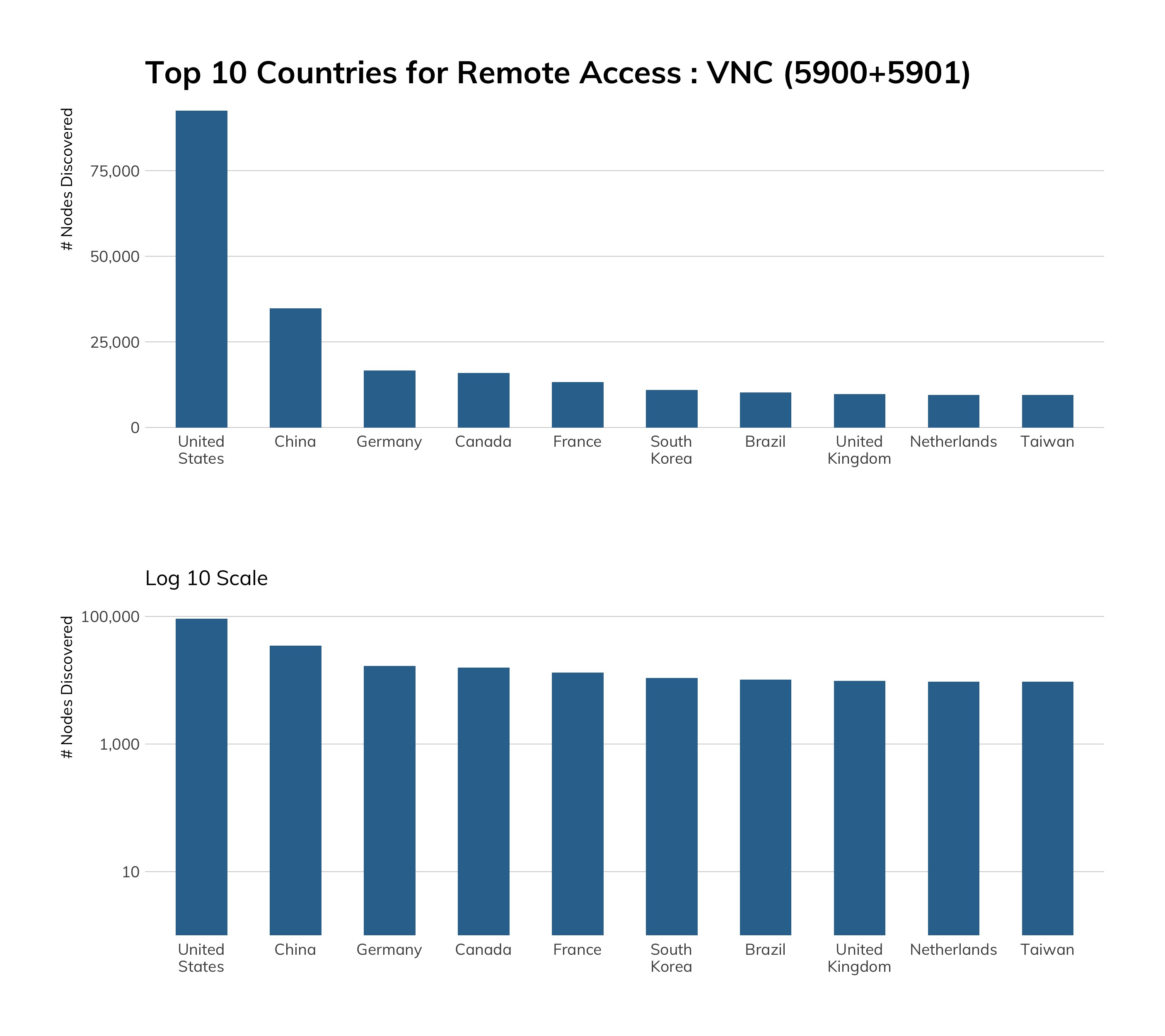

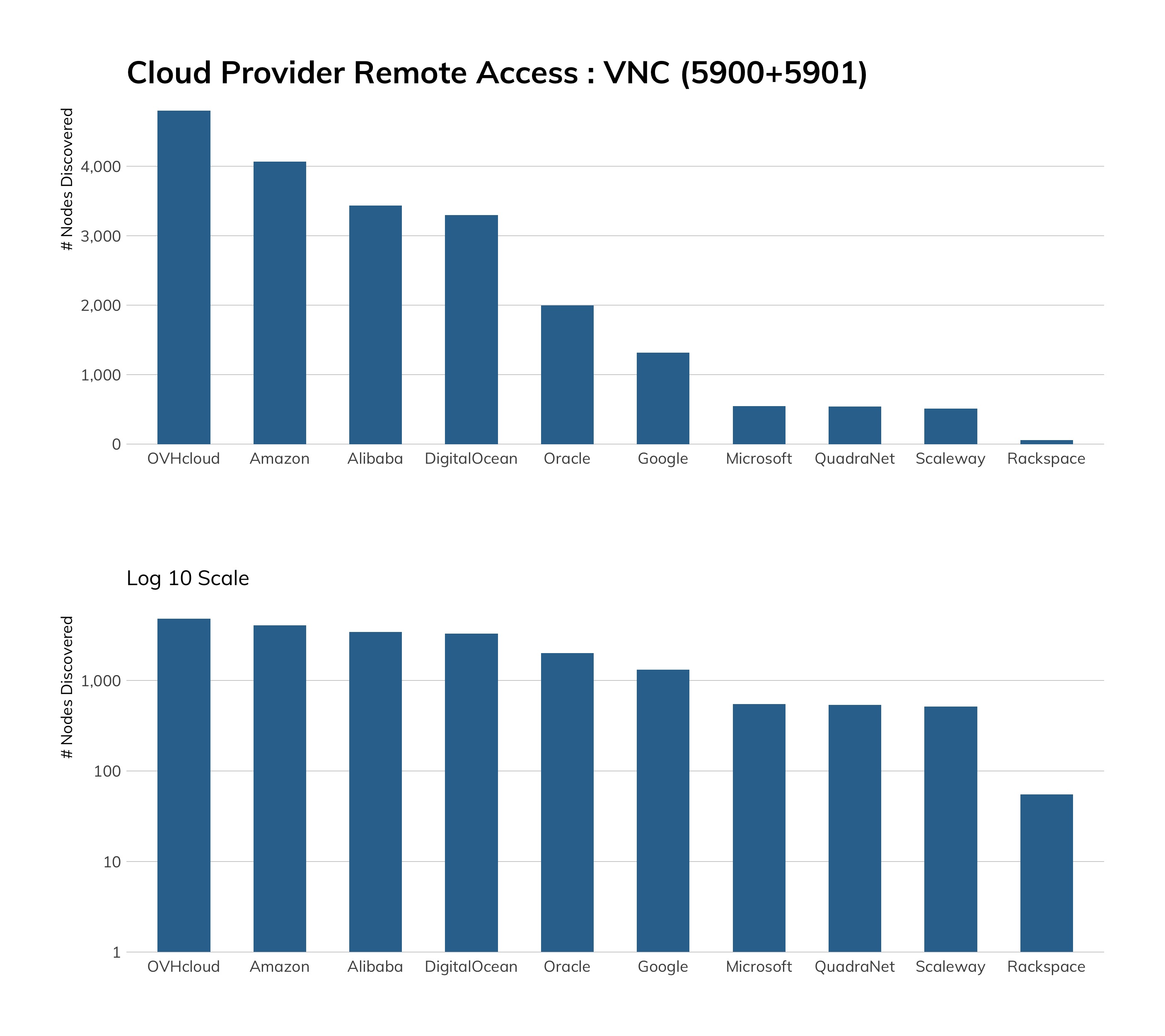

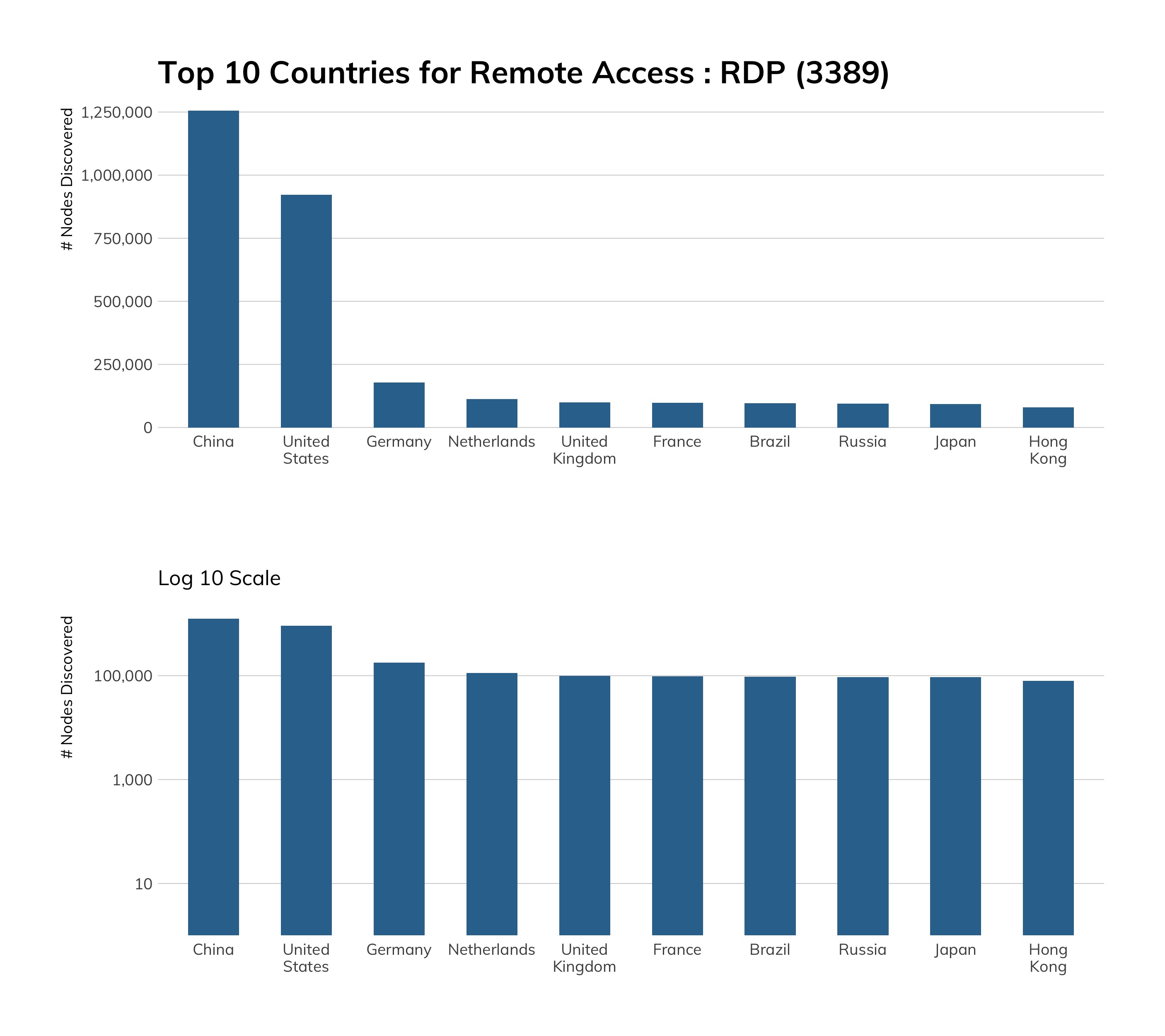

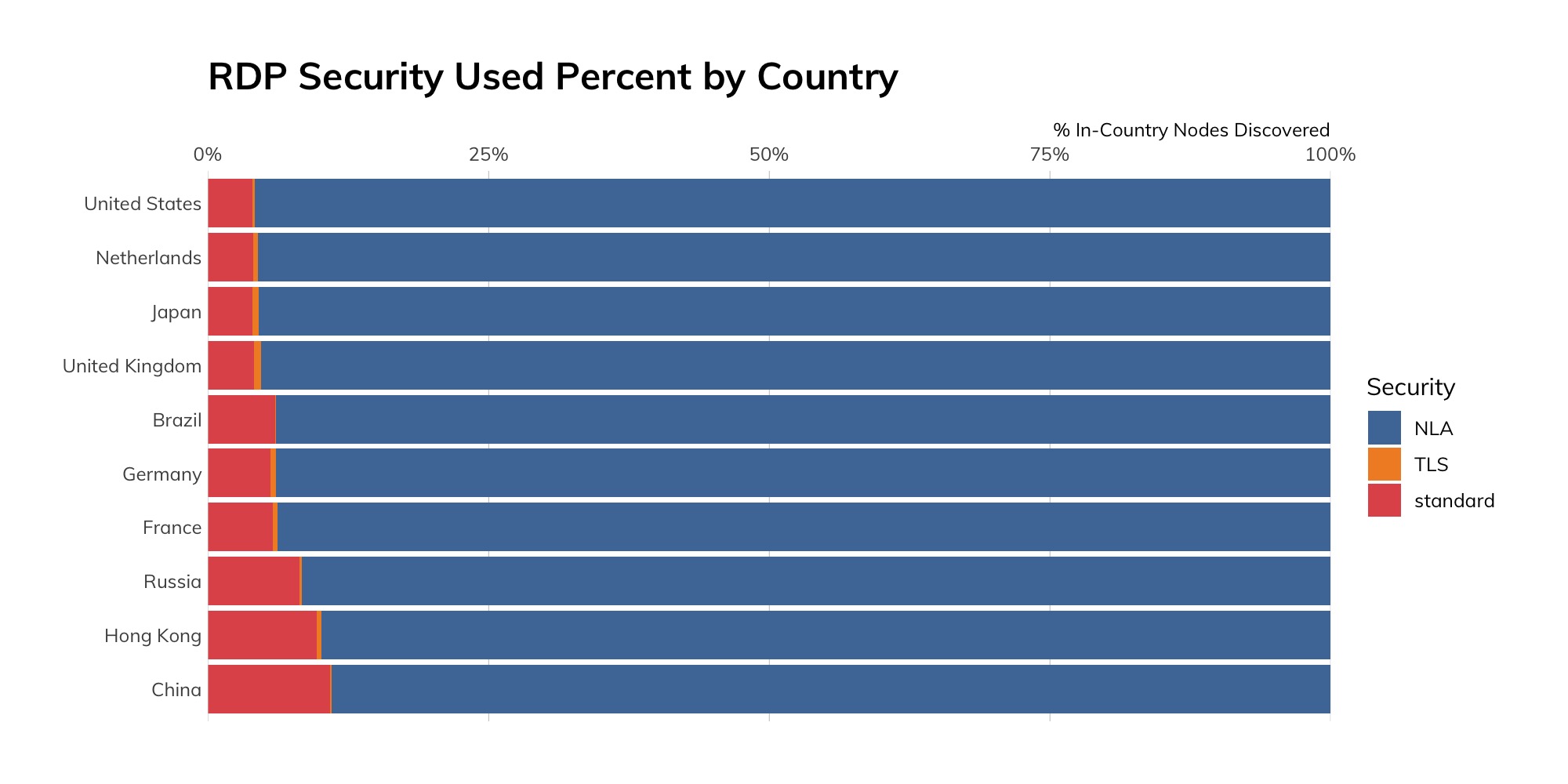

Console Access

The first job of the internet was to provide remote system operators with a capability to log on to far-flung computers, run commands, and get results, and securing those remotely accessible consoles was second to connectivity. Today, when we find console access, security professionals tend to take a dim view of that entry point. At the very least, these Telnet, SSH, RDP, and VNC consoles should be hardened against internet-based attackers by incorporating some type of second-factor authentication or control. Usually, incorporating a virtual private network (VPN) control removes the possibility of direct access; potential attackers must first breach that cryptographically secure VPN layer before they can even find the consoles to try passwords or exploit authentication vulnerabilities. Services lacking this first line of defense are otherwise open to anyone who cares to “jiggle the doorknobs” to see how serious the locks are, and automated attacks can jiggle thousands of doorknobs per second across the world.

- Key finding: We have discovered nearly 3 million Telnet servers still active and available on the internet, and many of those are associated with core routing and switching gear. This is 3 million too many. While remote console access is a fundamental design goal of the internet, there is no reason to rely on this ancient technology on the routers and switches that are most responsible for keeping the internet humming.

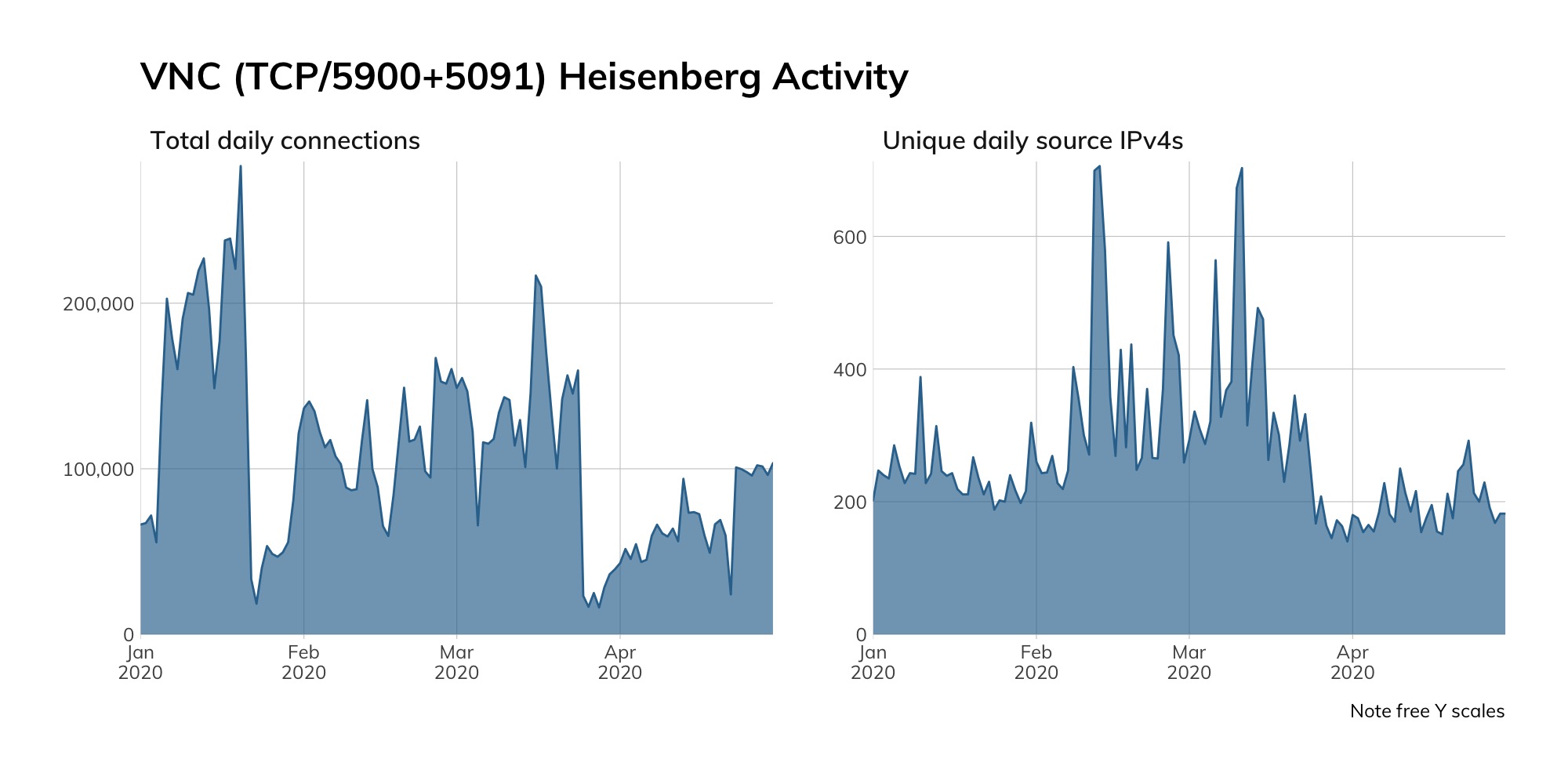

- Key recommendation: Telnet, SSH, RDP, and VNC should all enjoy at least one extra layer of security—either multifactor authentication, or available only in a VPN-ed environment.

Software Maintenance

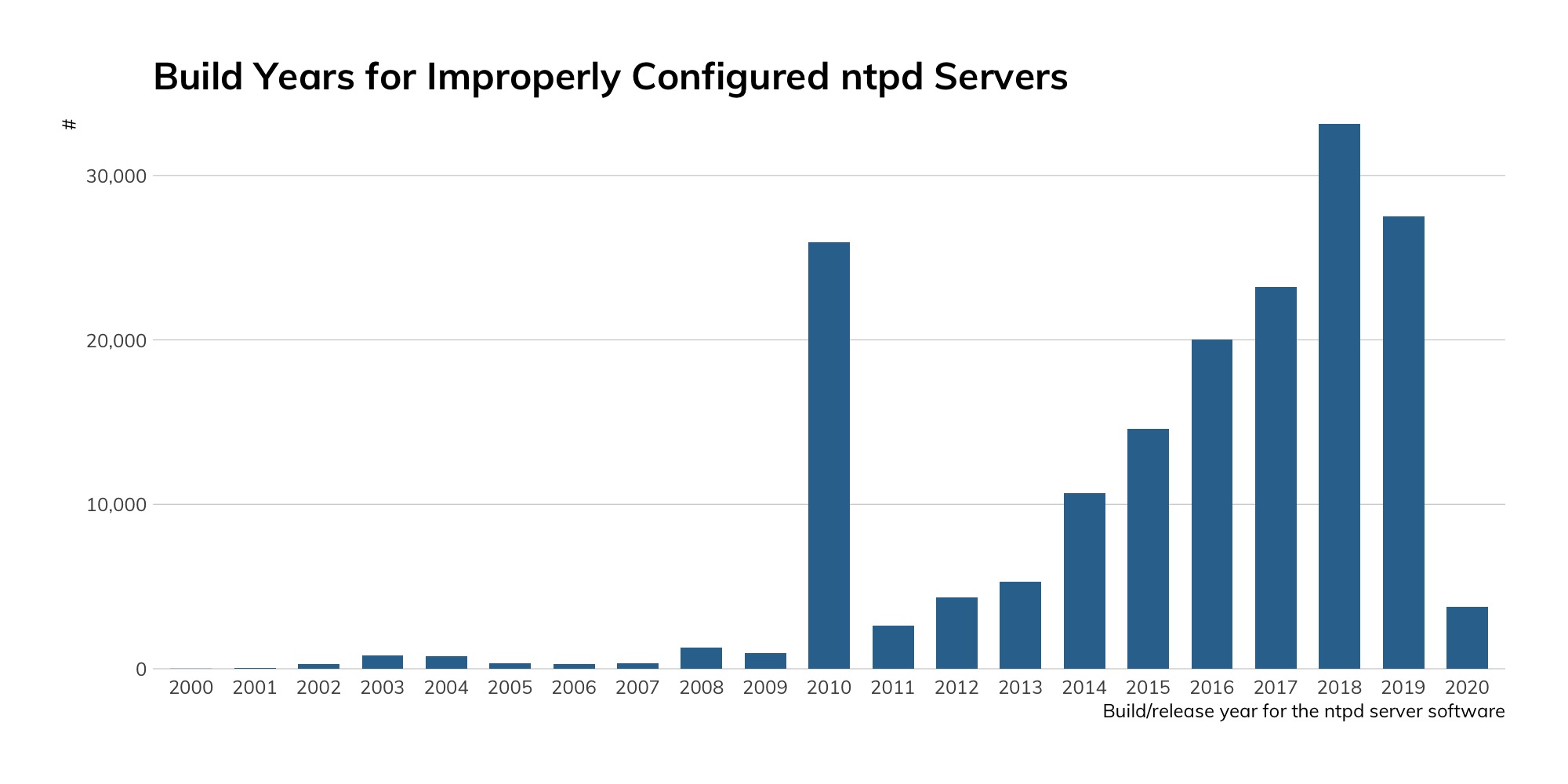

We often talk about the internet in terms of the machines that swap the bits of information around the world, but recall that these machines are remarkably durable. They are not designed with physical pistons or gears that can wear out, but instead are largely made of solid-state, unmoving electronics, with the most mechanical part of a machine being a cooling fan. These machines can run, without incident or human attention, for many years. While this may seem like a feature, it can, in fact, lead to complacency. The complex software that runs on this hardware absolutely does require routine maintenance in the form of patches, upgrades, and eventual replacements. As our knowledge and understanding of security and secure design concepts expand, new software must be deployed to satisfy the same business, academic, or culture requirements. Unfortunately, we have found evidence that this routine maintenance is largely absent in many areas of internet service, as we find decades-old software running with decades-old software vulnerabilities, just waiting for exploitation.

- Key finding: Patch and update adoption continues to be slow, even for modern services with reports of active exploitation. This is particularly true in the areas of email handling and remote console access where, for example, 3.6 million SSH servers are sporting vulnerable versions between five and 14 years old. More troubling, the top publicly traded companies of the United States, the United Kingdom, Australia, Germany, and Japan are hosting a surprisingly high number of unpatched services with known vulnerabilities, especially in financial services and telecommunications, which each have ~10,000 high-rated CVEs across their public-facing assets. Despite their vast collective reservoirs of wealth and expertise, this level of vulnerability exposure is unlikely to get better in a time of global recession.

- Key recommendation: Given that all software has bugs and patching is inevitable, enterprises should bake in regular patching windows and decommissioning schedules to their internet-facing infrastructure.

Executive Summary

Disaster, Slouching Toward the Internet

Executive Summary

Disaster, Slouching Toward the Internet

With these aspects of internet engineering in mind, we are certain that there is much more work to do. While the internet has gotten incrementally “better” in the past year, and so far appears to be resistant to biologically and economically influenced disaster, vulnerability and exposure abound on the modern internet. These exposures continue to pose serious risk through the continued use of poorly designed, outmoded software, through an over-availability of critical “backend” services, through uncontrolled operating system console access points, and through a lack of routine patch and upgrade maintenance.

Furthermore, we know that internet-based attackers are well aware of these weaknesses in design, deployment, access, and maintenance, and are already exploiting soft internet targets with essentially no consequences. We know there are no effective “internet police” patrolling the internet, so it is up to IT and cybersecurity professionals around the world to secure their internet infrastructure, with the assistance of those who teach, train, advise, and pay them.

This is a fairly weighty tome of observations. Specific, actionable security advice is given for each of the 24 protocols and their seven service categories of console access, file sharing, email, graphical remote access, database services, core internet infrastructure, and the web. If you take nothing else away from this research report, we hope we can convince you of this: The internet is not an automatic money- and culture-generating machine; it depends on the heroic efforts of thousands and thousands of professionals who are committed to its well-being, even in the face of daily attacks from a wide array of technically savvy criminals and spies.

The Role of Policymakers

The Role of Policymakers

The Pen Is Mightier Than The Firewall

The Role of Policymakers

The Pen Is Mightier Than The Firewall

Policymakers—those folks in governments around the world who promulgate and enforce regulations, determine public budgets and procurement priorities, and oversee tax-funded grants—should and do play a crucial role in the security of the internet. Security is not manifested through technology alone, but also through the right mix of incentives, information sharing, and leadership, ideally informed by up-to-date and accurate information on the evolving security landscape and expert guidance on potential solutions to complex security challenges. Where could policymakers find some research like that? Why, right here!

The NICER 2020 outlines the risks and multinational prevalence of protocols that are inherently flawed or too dangerous to expose to the internet—such as FTP, Telnet, SMB, and open, insecure databases—which have direct bearing on public- and private-sector security. Policymakers can review this corpus of current internet research to help inform decisions regarding online privacy, security, and safety. Policymakers should consider using this information to encourage both public- and private-sector organizations to reduce their collective dependence on high-risk protocols, mitigate known vulnerabilities and exposures, and promote the use of more secure protocols. For example:

- Issue guidance on the use of specific high-risk protocols and software applications, such as in the context of implementing security and privacy regulations;

- Evaluate the presence of high-risk protocols and applications in government IT, and transition government systems to more secure and modernized protocols;

- Conduct oversight into agencies’ efforts to mitigate known exposures in government IT;

- Consider exposure to dangerous protocols in inquiries, audits, or other actions related to private-sector security;

- Use data regarding the regional prevalence of known exposures and dangerous protocols to enhance multinational cooperative efforts to share threat information;

- Direct additional studies into the impact of cleartext, dangerous, and inherently insecure protocols and applications on national security, national economies, and consumer protection, and the challenges organizations face in mitigating exposure.

Measuring Exposure: A Per-Protocol Deep Dive

The remainder of this report is concerned with in-depth analysis of 24 protocols across seven families of services—everything from Telnet to DNS-over-TLS. First, we compare the raw number of services providing each protocol compared to counts from the same time last year, in 2019. Then, each protocol section will have a brief “Too Long, Didn’t Read” (or TLDR) overview of that protocol with an executive summary of:

- What the protocol is for

- How many of these services we found

- The general vulnerability profile of that protocol

- Alternatives to that protocol for its business function

- Our advice regarding that protocol

- Whether things are getting better or worse in terms of deployment

Following this overview, we provide detailed data and analysis about these protocols, including the per-country, per-cloud, and per-version breakdowns of what we saw. Each one of these analyses would make for fine papers in and of themselves, but lucky you, you get all of them at once. So, strap on your cyber-scuba rebreather, and let's dive deep!

Measuring Exposure

Internet Services, Year Over Year

Measuring Exposure

Internet Services, Year Over Year

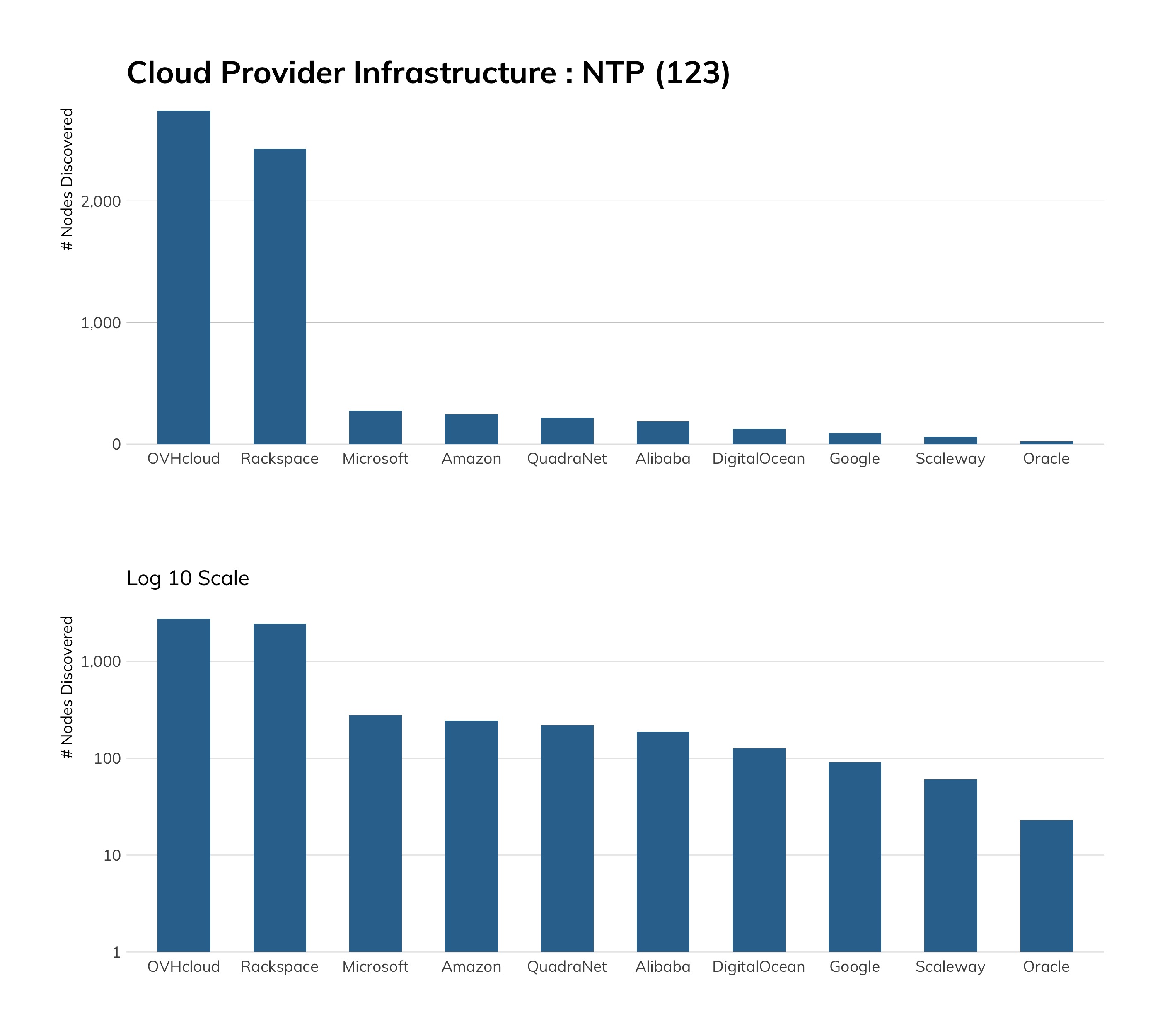

Providing a point-in-time exposure view has tons of merit, but the Rapid7 Labs team was curious as to how April 2020 compares to April 2019 since quite a bit has happened since then.

We approached the report with some hypotheses regarding the impact of the global pandemic and a looming worldwide recession, such as “we will probably see more RDP servers” as people are forced to work from home, but still need access to their GUI consoles, or, “we'll probably see a spike in SMB” as people hastily reconfigured Windows workflows to accommodate a remote workforce. It turns out that we were woefully wrong. Table 5 shows the rise and fall—and mostly fall—of the protocols covered in these 2020 studies compared to a sampling of 2019 studies around this time last year.

| Sonar Study | Port | 2019 | 2020 | Change | Percentage |

|---|---|---|---|---|---|

| SMB | 445 | 709,715 | 594,021 | -115,694 | -16.30% |

| Telnet | 23 | 3,250,417 | 2,830,759 | -419,658 | -12.91% |

| rsync | 873 | 233,296 | 208,882 | -24,414 | -10.46% |

| POP3 | 110 | 4,818,758 | 4,335,533 | -483,225 | -10.03% |

| SMTP | 25 | 6,439,139 | 5,809,982 | -629,157 | -9.77% |

| IMAP | 143 | 4,296,778 | 4,049,427 | -247,351 | -5.76% |

| SMTP | 587 | 4,220,184 | 4,011,697 | -208,487 | -4.94% |

| RDP | 3389 | 4,171,666 | 3,979,356 | -192,310 | -4.61% |

| POP3S | 995 | 3,887,033 | 3,717,883 | -169,150 | -4.35% |

| IMAPS | 993 | 4,008,577 | 3,852,613 | -155,964 | -3.89% |

| MS SQL Server | 1434 | 102,449 | 98,771 | -3,678 | -3.59% |

| SMTPS | 465 | 3,592,678 | 3,497,791 | -94,887 | -2.64% |

| DNS (Do53) | 53 | 8,498,166 | 8,341,012 | -157,154 | -1.85% |

| FTP | 21 | 13,237,027 | 13,002,452 | -234,575 | -1.77% |

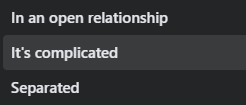

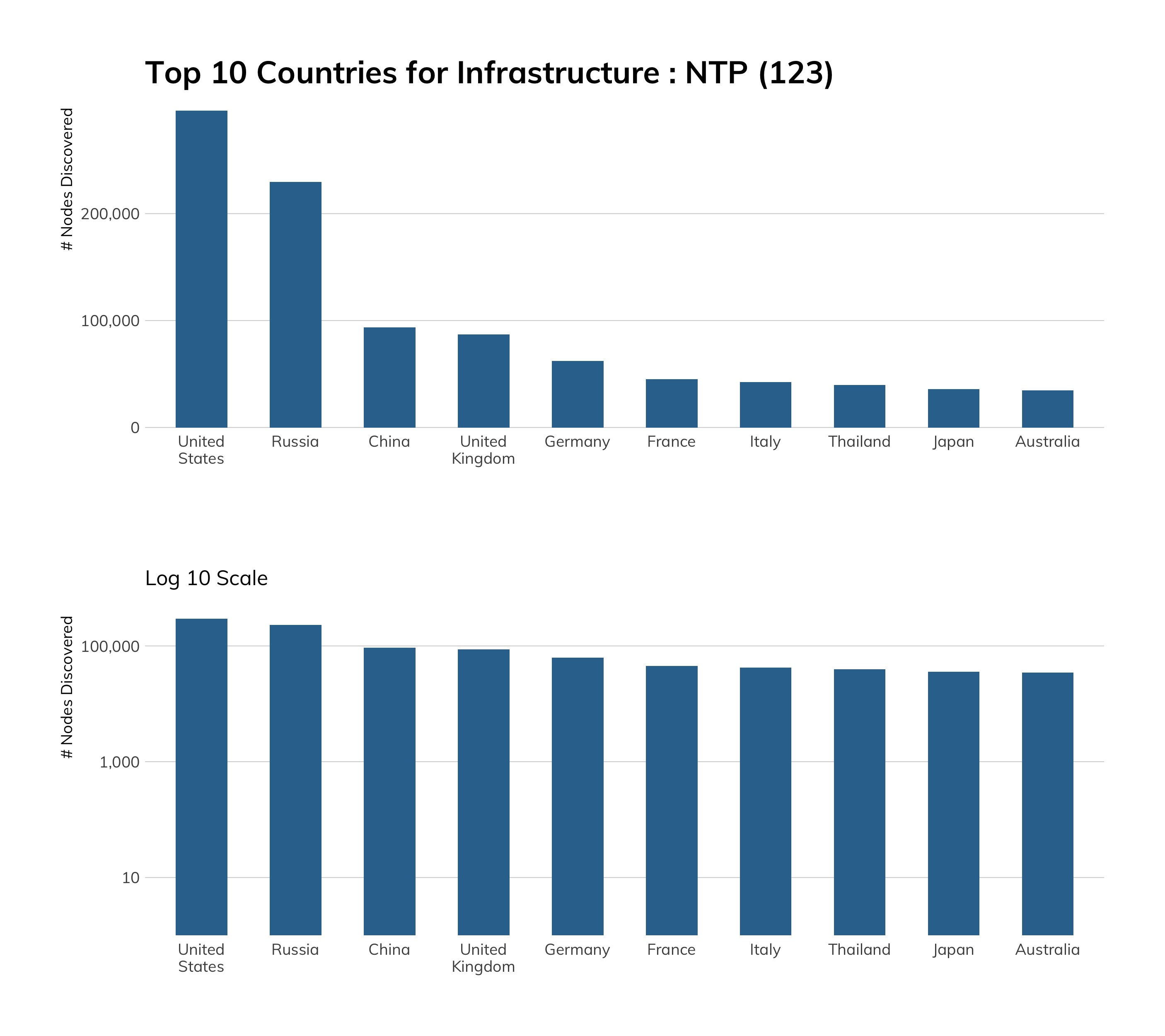

| NTP | 123 | 1,653,599 | 1,638,577 | -15,022 | -0.91% |

| FTPS | 990 | 443,299 | 460,054 | 16,755 | 3.78% |

| SSH | 22 | 15,890,566 | 18,111,811 | 2,221,245 | 13.98% |

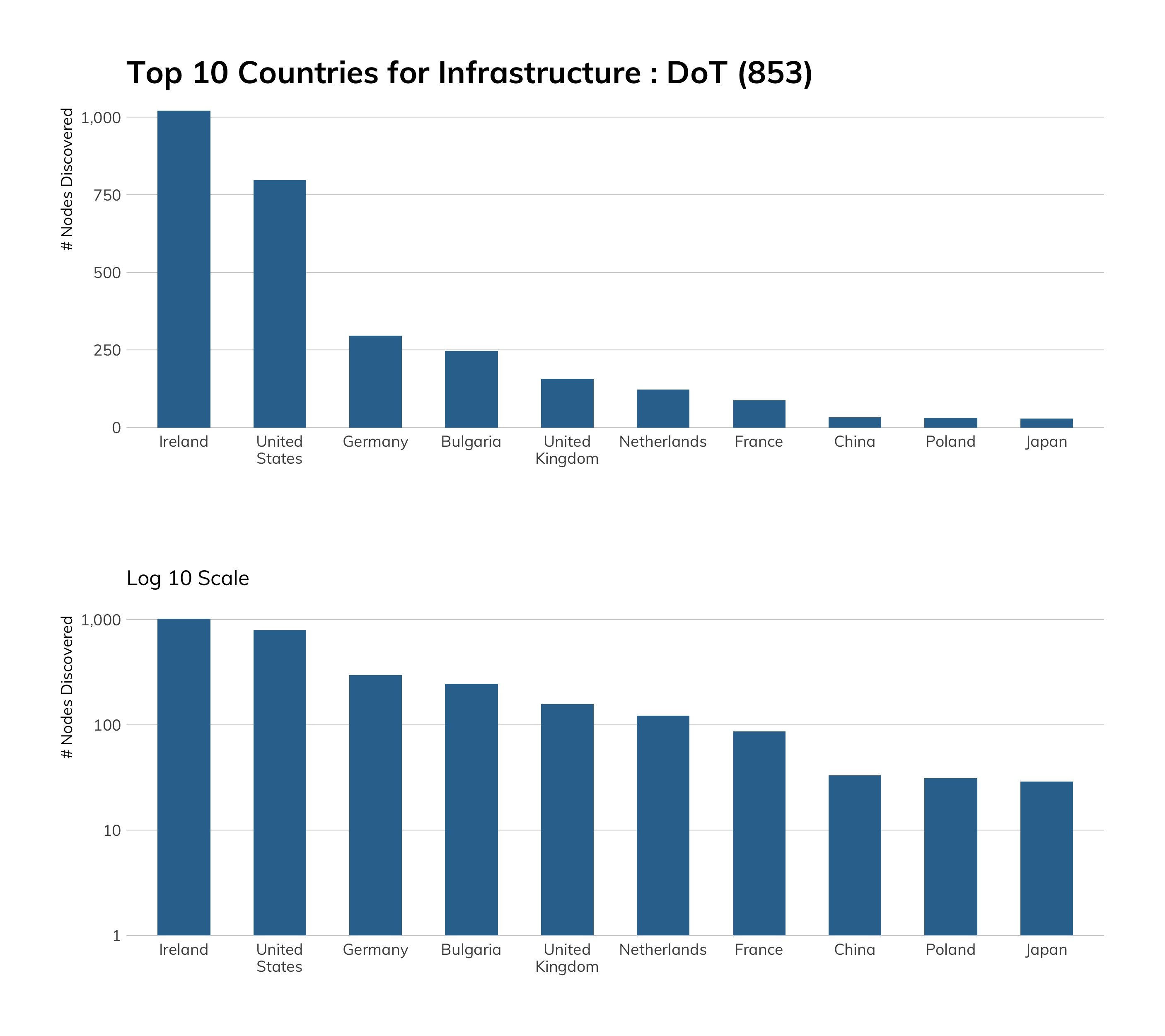

| DNS over TLS (DoT) | 853 | 1,801 | 3,237 | 1,436 | 79.73% |

Before we break down the table, we need to emphatically state that the internet is getting safer, at least where these core services are concerned. As certified security curmudgeons, that sentence was extremely difficult to type. But the survey data does not lie, so let’s dig into the results.

First, we’ve left a few services without a highlight color in the “percent change” column. Scanning the internet is fraught with peril, as noted in the Methodology appendix, and we’ve collected sufficient scan data over the course of six years to understand how “off” a given scan might be (it’s become a bit worse over the years due to the increase in dynamic service provisioning in cloud providers, too). Each Sonar study has its own accuracy range, but across all of them, any results differing +/- 4% means we cannot make definitive statements about whether anything’s “gone up” or “gone down.” All that said, it turns out that most services had numbers well outside that range.

Microsoft SMB has seen almost a 20% drop in exposure, mostly due to ISPs blocking SMB ports. There are still over half a million Windows and Linux systems exposing SMB services, so let’s not break out the champagne just yet.

Telnet being down nearly 15% warms our hearts, since we’ve spent the last four or so years begging y’all to stop exposing it. Still, a sizable chunk of those remaining 2.8 million devices with Telnet exposed are routers and switches, so just because those services are stuck in 1999 configuration mode does not mean we can party like it is that year, either.

It’s great to see folks giving up on hosting their own mail infrastructure. While this transition to professionally hosted email services like Outlook 365 and G Suite may have seen a more accelerated adoption because of the sudden shutdown, we believe that this transition to more centralized email was already in progress and will continue.

Seeing a near 13% increase in SSH leaves us a bit concerned (see the SSH section to see how seriously vulnerable the SSH service and their host operating systems are). We’re hoping to see a reduction in SSH over time as more Linux distributions mimic Ubuntu and adopt WireGuard[6] for secure remote system connections, as it is much safer and as or more secure than SSH, and provides even better connectivity.

Finally, we’re not surprised that the number of DoT servers nearly doubled over the past year, and expect to see another doubling (or more) in 2021.

So, while we have seen a major shift in the way people work and use the internet in this time of pandemic lockdown and economic uncertainty, we haven't detected a major shift in the nature and character of the internet itself. After all, the internet was designed with global disaster in mind, and to that end, it appears to be performing admirably.

That said, let's jump into the per-protocol analysis. Don't worry, we'll get back to our usual cynical selves.

Measuring Exposure

Console Access

Measuring Exposure

Console Access

In the beginning, the entire purpose of the capital-I Internet was to facilitate connections from one text-based terminal to the other, usually so an operator could run commands “here” and have them executed “there.” While we usually think of the (now small-i) internet as indistinguishable from the (capital W's) World Wide Web, full of (essentially) read-only text, images, audio, video, and eventually interactive multimedia applications, those innovations came much later. Connecting to the login interface of a remote system was the foundational feature of the fledgling internet, and that legacy of remote terminal access is alive and not-so-well today.

Telnet (TCP/23)

It wasn't the first console protocol, but it's the stickiest.

Discovery Details

Way back in RFC 15,[7] Telnet was first described as “a shell program around the network system primitives, allowing a teletype or similar terminal at a remote host to function as a teletype at the serving host.” It is obvious from this RFC that this was intended to be a temporary solution and that "more sophisticated subsystems will be developed in time," but to borrow from Milton Friedman, there is nothing quite so permanent as a temporary solution. Remote console access is still desirable over today's internet, and since Telnet gets the job done at its most basic level, it persists to this day, 50 years later.

Exposure Information

Technically, despite its half-century of use, Telnet (as a server) has suffered relatively few killer vulnerabilities; a quick search of the CVE corpus finds only 10 vulnerabilities with a CVSS score of 5 or higher. However, Telnet suffers from a few foundational flaws. For one, it is nearly always a cleartext protocol, which exposes both the authentication (username and password) and the data to passive eavesdropping. It's also relatively easy to replace commands and responses in the stream, should attackers find themselves in a privileged position to man-in-the-middle (MITM) the traffic. Essentially, Telnet makes little to no security assurances at all, so paradoxically, the code itself tends to remain relatively vulnerability-free.

The bigger issue with Telnet is the fact that, in practice, default usernames and passwords are so common that it's assumed to be the case whenever someone comes across a Telnet server. This is the central hypothesis of the Mirai worm of 2016, which used an extremely short list of common default Telnet usernames and passwords and, for its trouble, managed to take down internet giants like Twitter and Netflix, practically by accident.

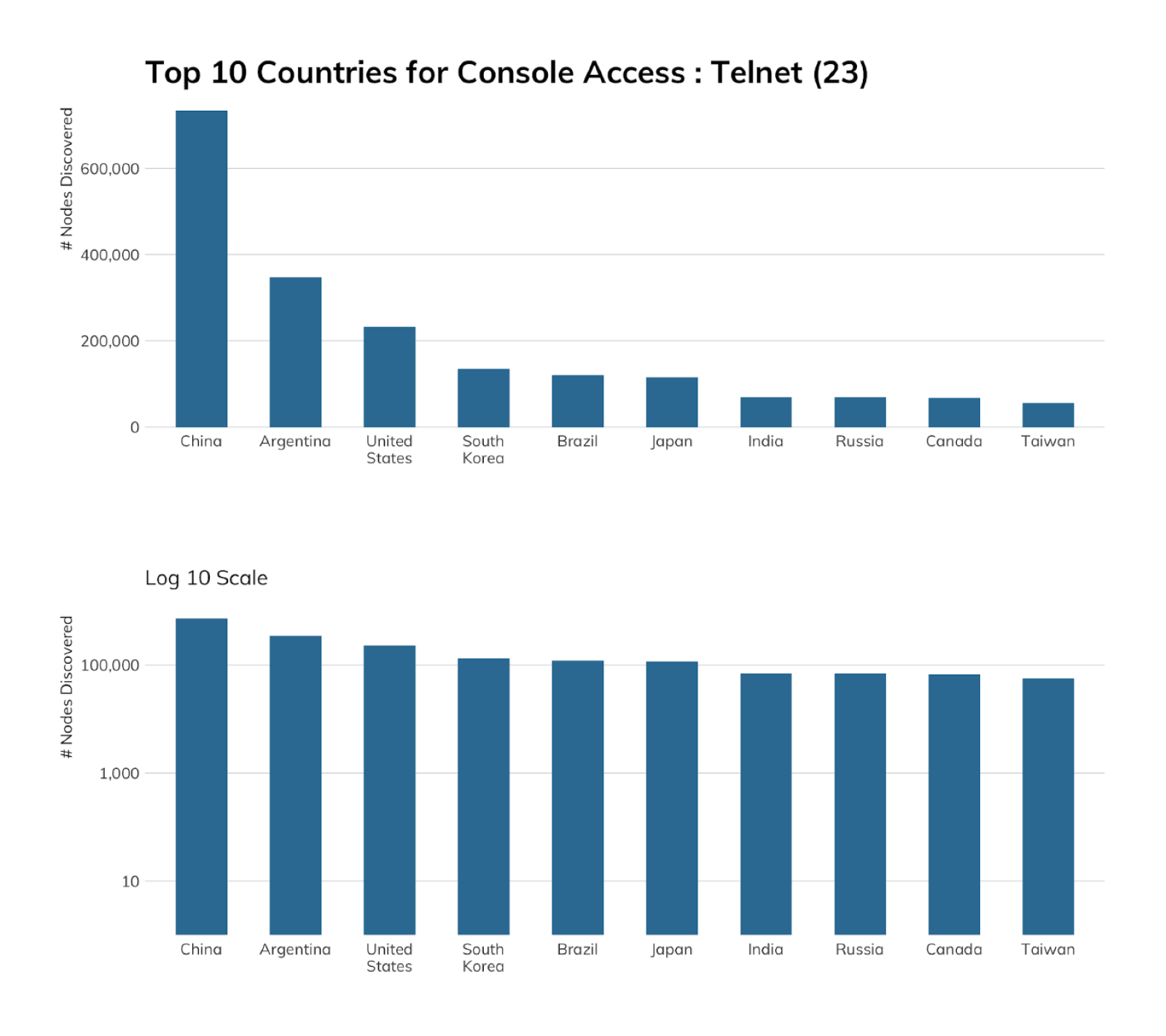

The chart to the right shows that China alone has a pretty serious Telnet problem, followed by Argentina and the United States.

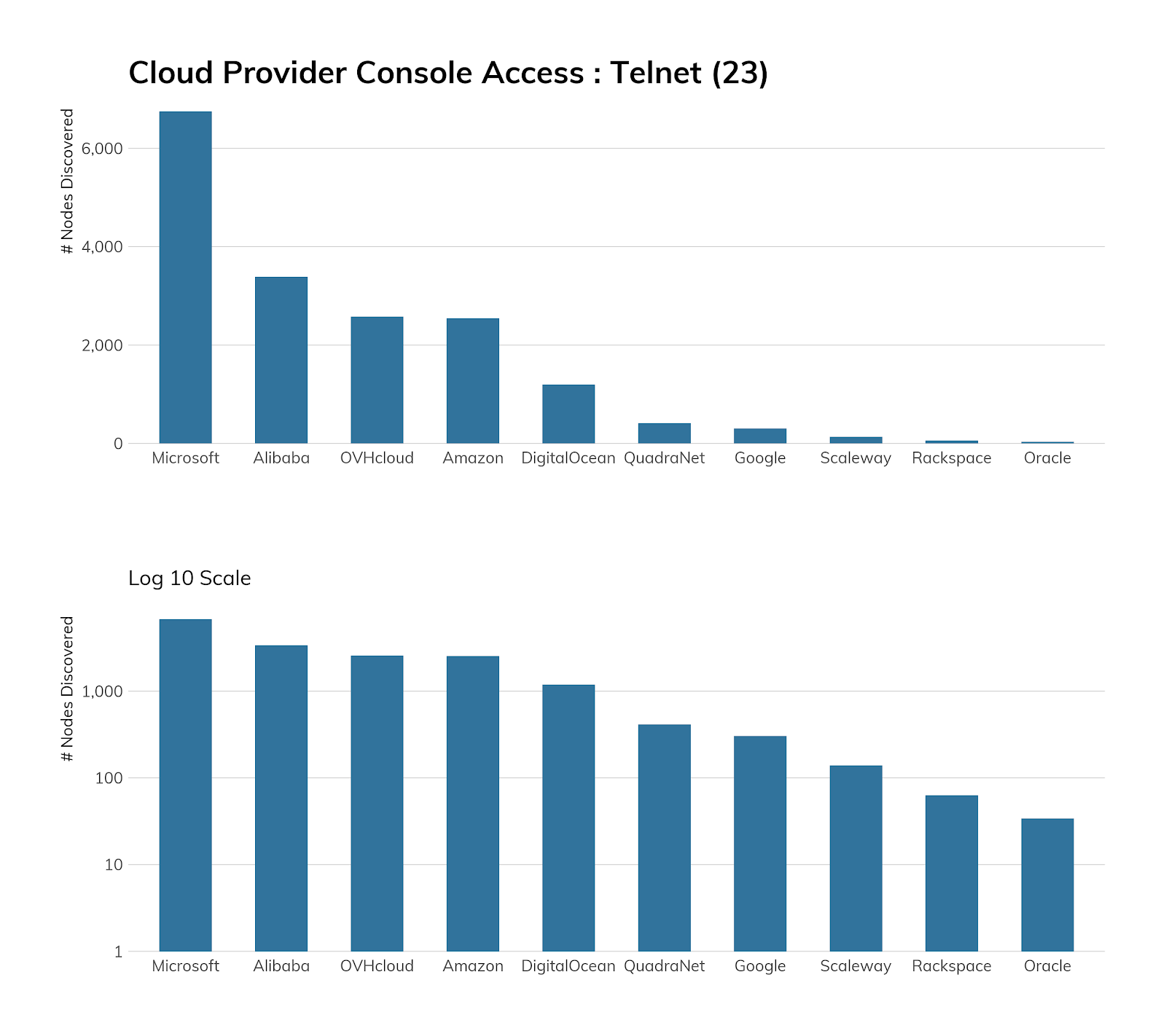

While we would prefer to see no Telnet among cloud service providers, we see that good progress seems to have been made here. Microsoft Azure tops the list with about 7,000 exposures, which is a little weird since Windows platforms don't normally have a built-in Telnet server.

Of the Telnet instances found on the internet that we could confidently identify by vendor, Table 6 describes those services from vendors that appear in Sonar with at least 10,000 responsive nodes.

| Vendor | Count |

|---|---|

|

Cisco |

278,472 |

|

Huawei |

108,065 |

|

MikroTik |

73,511 |

|

HP |

70,821 |

|

Ruijie |

17,565 |

|

ZTE |

15,558 |

What's interesting about these figures is that we can see right away that the vast majority of Telnet services exposed to the internet are strongly associated with core networking gear vendors. Cisco and Huawei, two of the largest router manufacturers on Earth, dominate the total count of all Telnet services. Furthermore, sources indicate that about 14% of Cisco devices and 11% of Huawei devices are accessible, today, using default credentials. This lack of care and maintenance of the backbone of thousands of organizations the world over is disappointing, and every one of these devices should be considered compromised in some way, today.

While Telnet is still prevalent far and wide across the internet, we can see that some ISPs are more casual about offering this service than others. The table below shows those regional ISPs that are hosting 10,000 or more Telnet services in their network. Furthermore, the vast majority of these exposures are not customer-based exposures, but are hosted instead on the core routing and switching gear provided by these ISPs. This practice (or oversight), in turn, puts all customers' network traffic originating or terminating in these providers at risk of compromise.

Attacker’s View

Telnet’s complete lack of security controls, such as strong authentication and encryption, makes it totally unsuitable for the internet. Hiding Telnet on a non-standard port is also generally useless, since modern Telnet scanners are all too aware of Telnet lurking on ports like 80 and 443, simply because firewall rules might prohibit port 23 exposure. Today, Telnet is most commonly associated with low-quality IoT devices with weak to no security hardening, so exposing these devices to the internet is, at the very least, a signal that there is no security rigor in that organization.

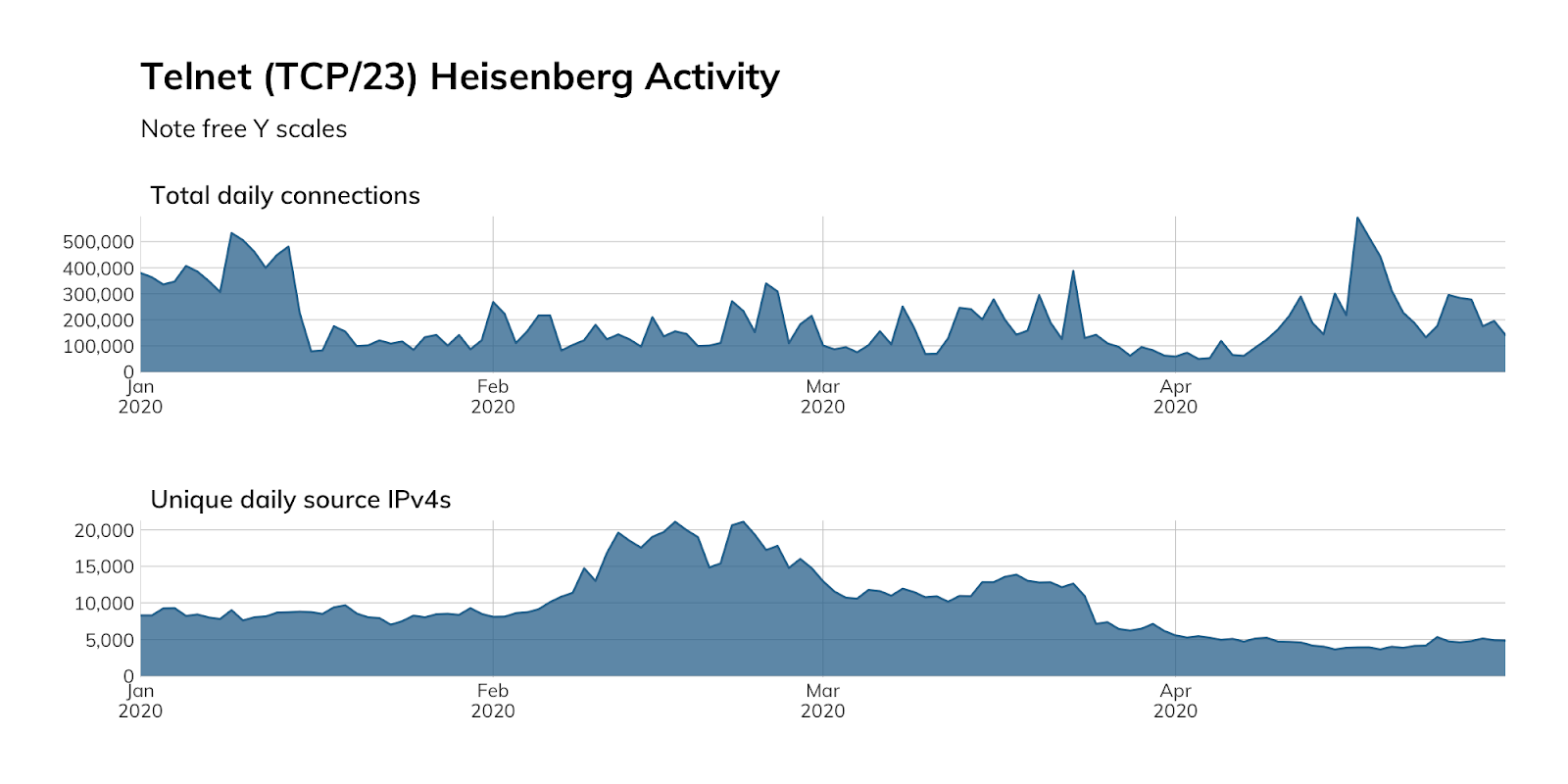

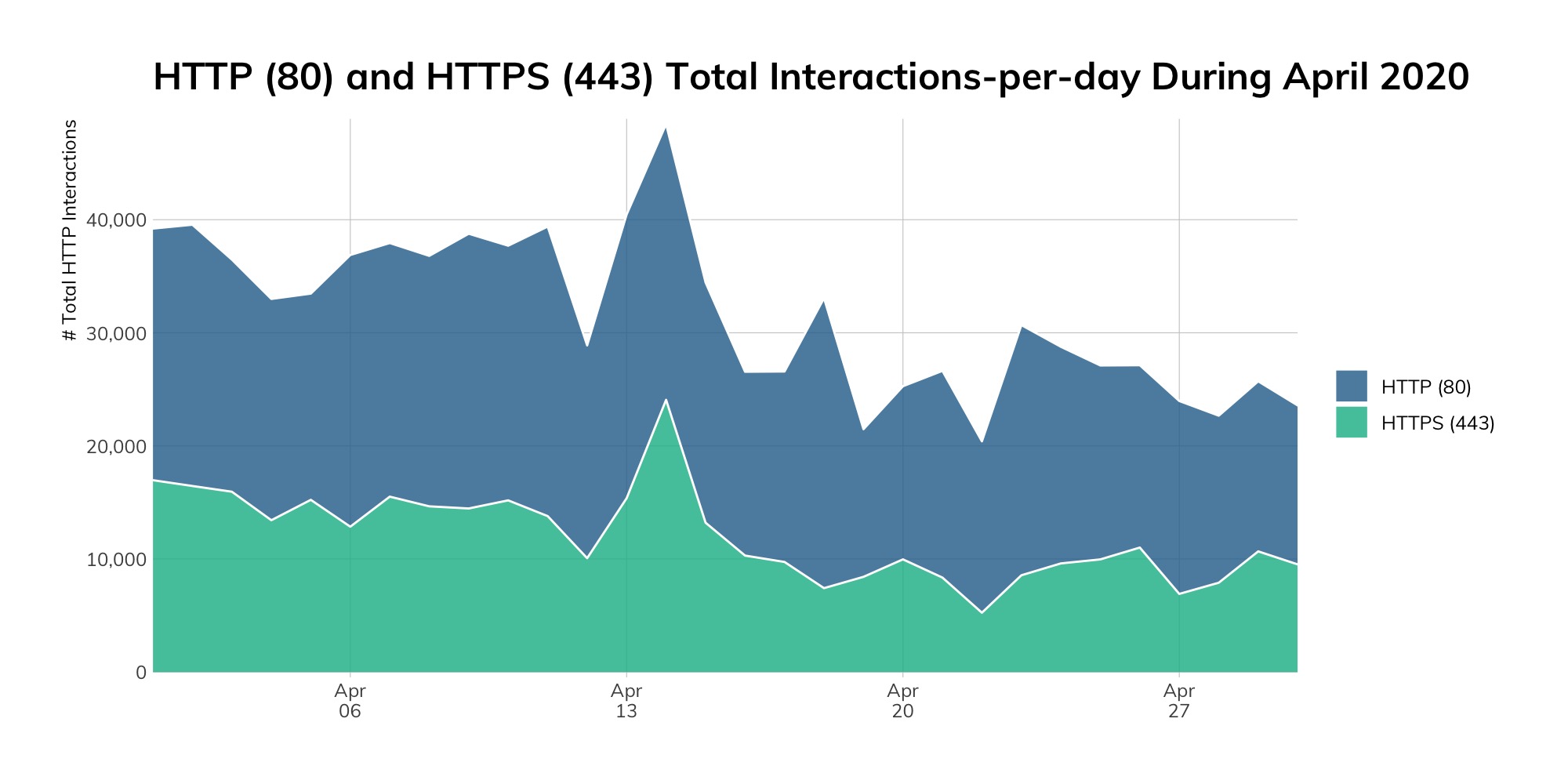

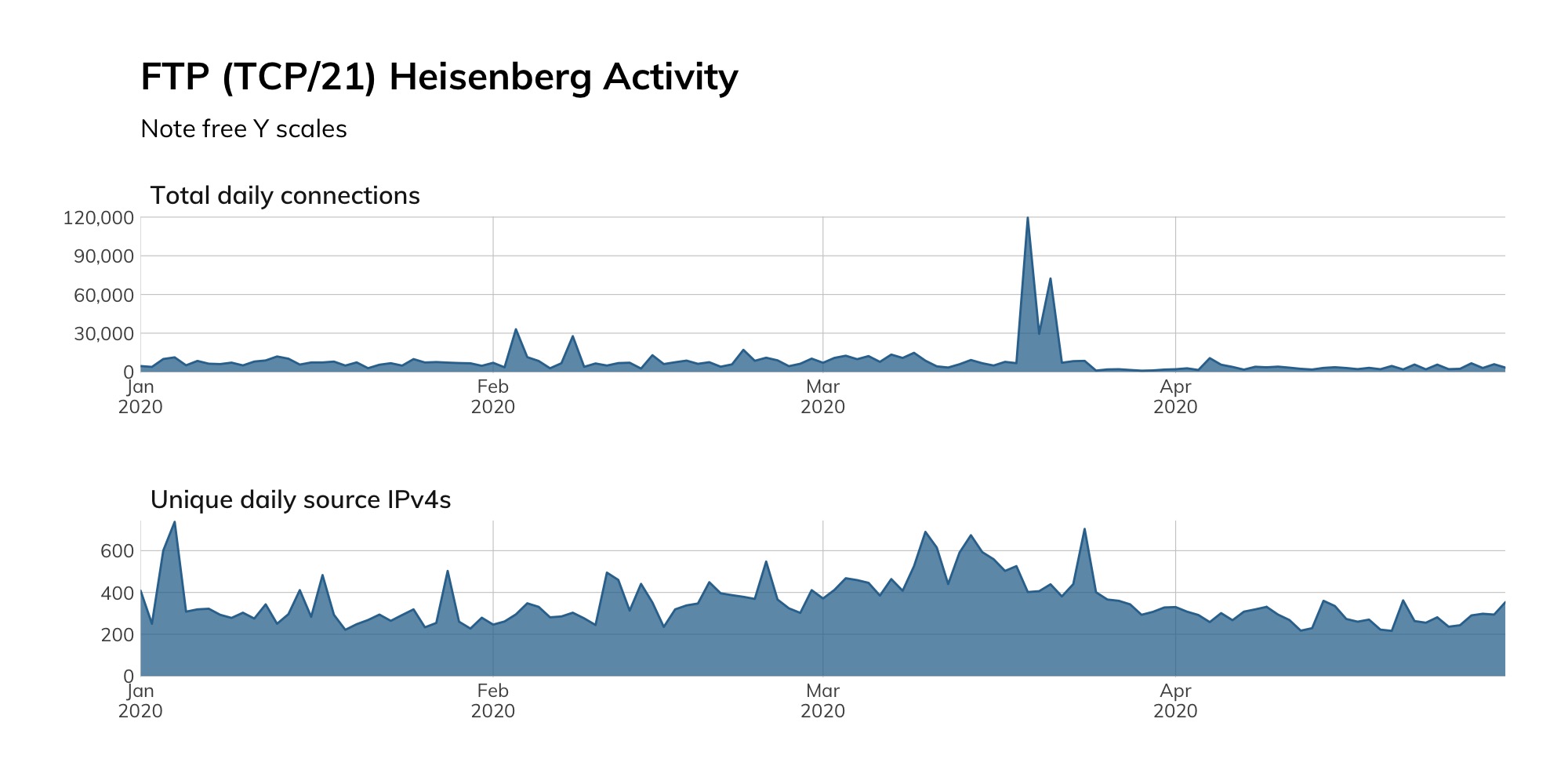

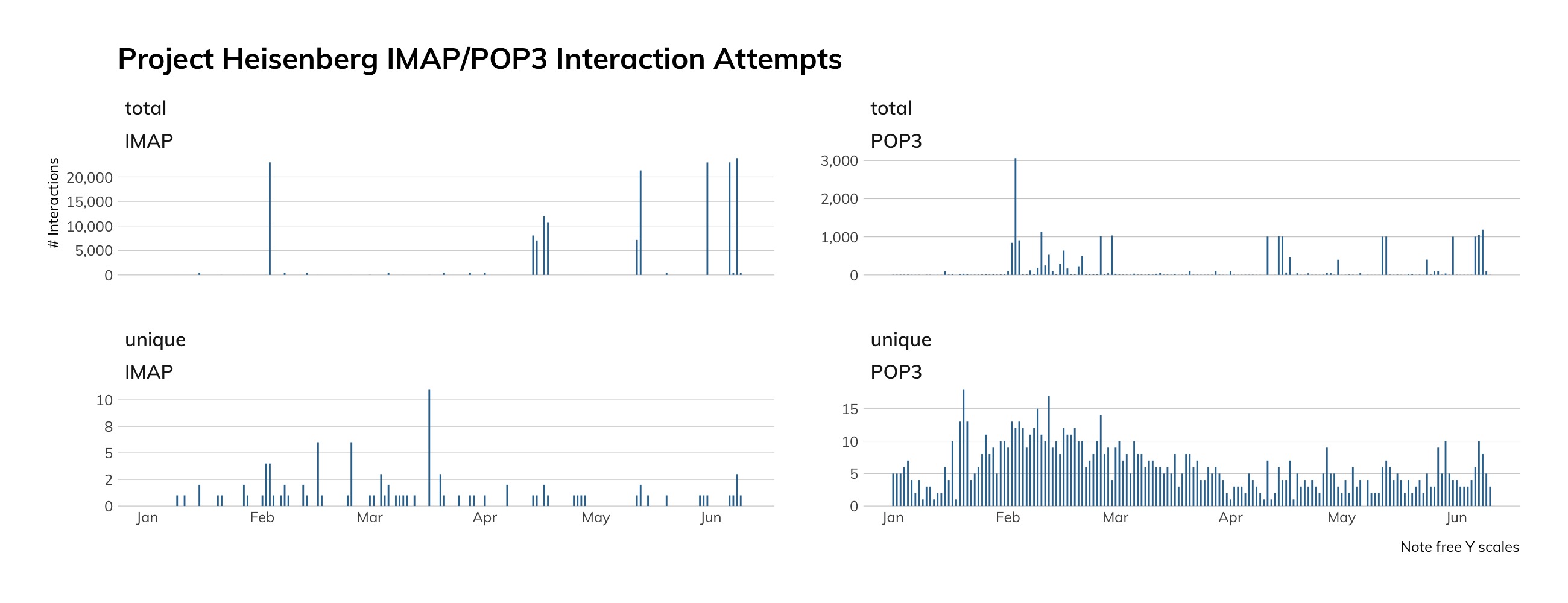

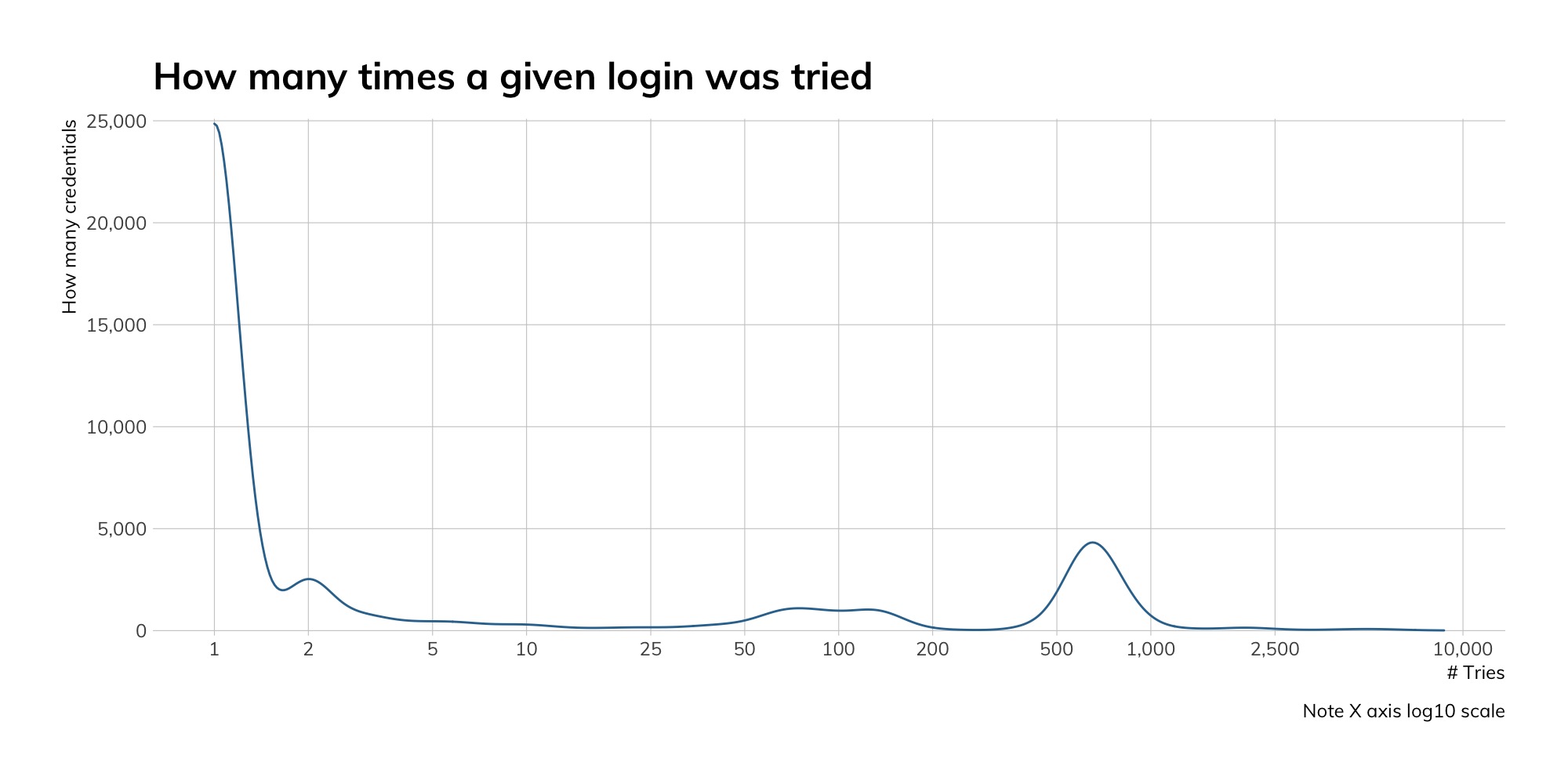

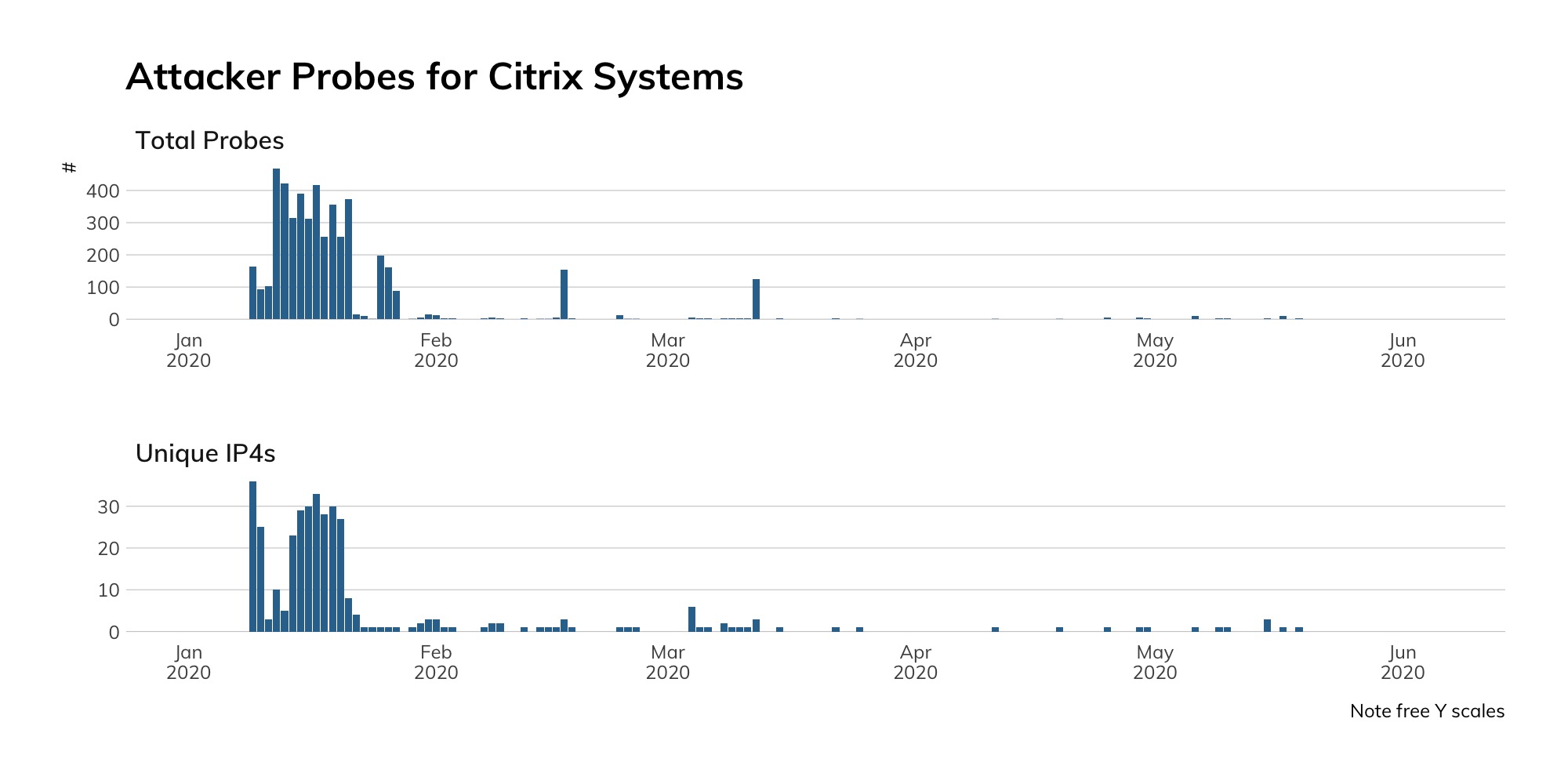

Judging from our honeypot network, attackers continue to actively seek out Telnet services, even four years after the Mirai attacks that took several hundred thousand offline. Approximately 90% of the traffic shown below represents basic access attempts using common usernames and passwords. The spikes represent concentrated credential stuffing attacks, using new username and password combinations pulled from publicly available dumps of other credentials.[8]

Our Advice

IT and IT security teams should prohibit Telnet traffic both to and from their networks, both through blocking standard Telnet ports and through deep packet inspection (DPI) solutions, whenever possible. They should continuously monitor their network for Telnet services, and track down offending devices and disable Telnet connectivity. There is no reason to expose Telnet to the modern internet, ever. In the rare case a network operator wants someone to log in to a text-based console over the internet, a well-maintained SSH server will be far more reliable, flexible, and secure.

Cloud providers should operate similarly to the above organizations. They should, by default, prohibit all Telnet traffic through automatic, technical means. It's possible that some customers are relying on Telnet today, but those customers should be gently, but firmly, moved to SSH-based alternatives. After all, Telnet clients are not available by default on modern versions of Windows, OSX, or Ubuntu Linux for a reason, so it would seem that few future clients will suffer from its absence on their hosted machines.

Government cybersecurity agencies should actively pursue those ISPs that are offering an egregious amount of Telnet access to their core network operations hardware, and give those operators a stern talking-to, along with migration documentation on how to set up SSH, if needed. ISPs, at this point, should know better.

Secure Shell (SSH) (TCP/22)

It’s got “secure” right in its name!

Discovery Details

Secure Shell, commonly abbreviated to SSH, was designed and deployed in 1995 as a means to defend against passive eavesdropping on authentication that had grown common against other cleartext protocols such as Telnet, rlogin, and FTP. While it is usually thought of as simply a cryptographically secure drop-in replacement for Telnet, the SSH suite of applications can be used to secure or replace virtually any protocol, either through native applications like SCP for file transfers, or through SSH tunneling.

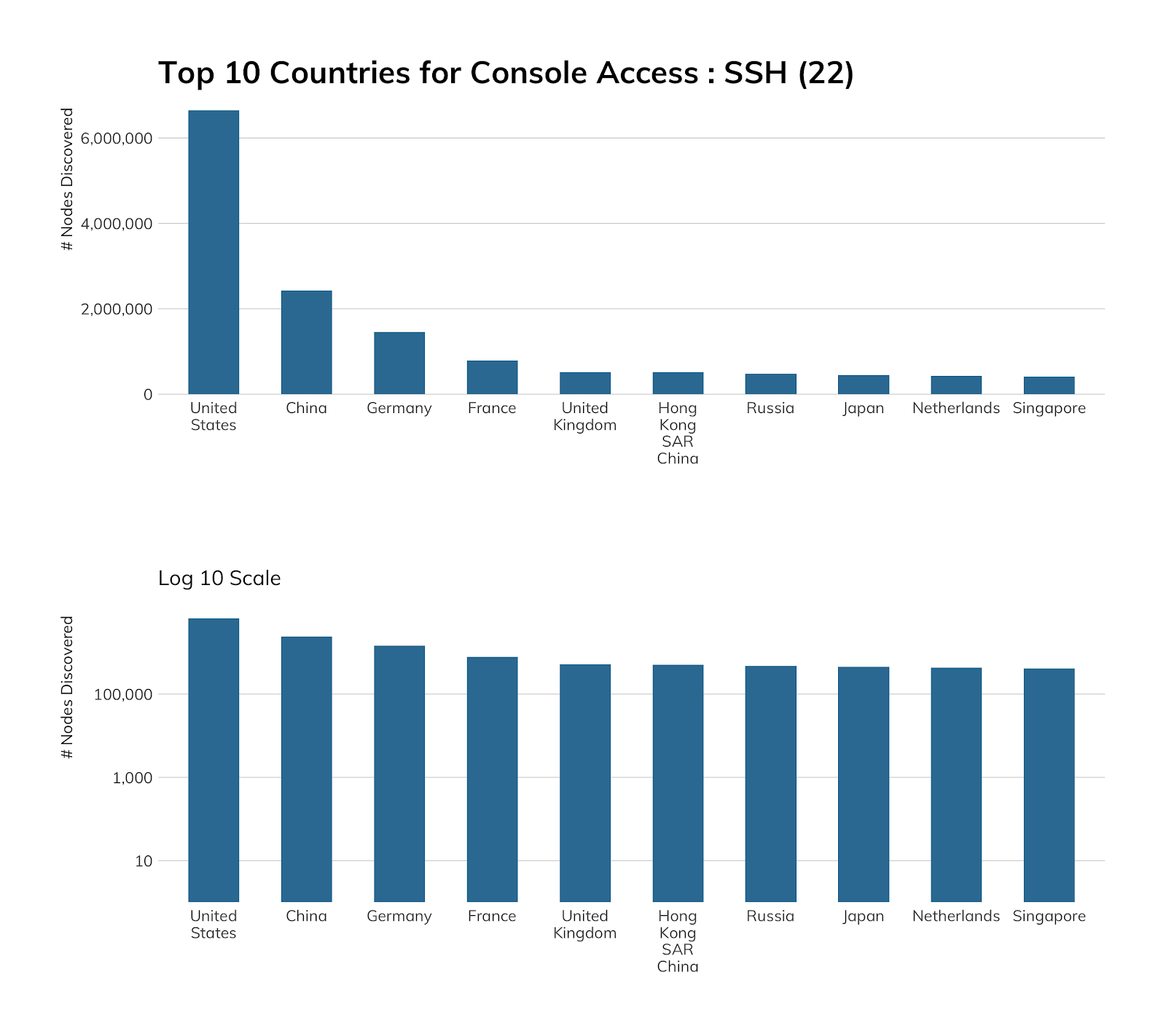

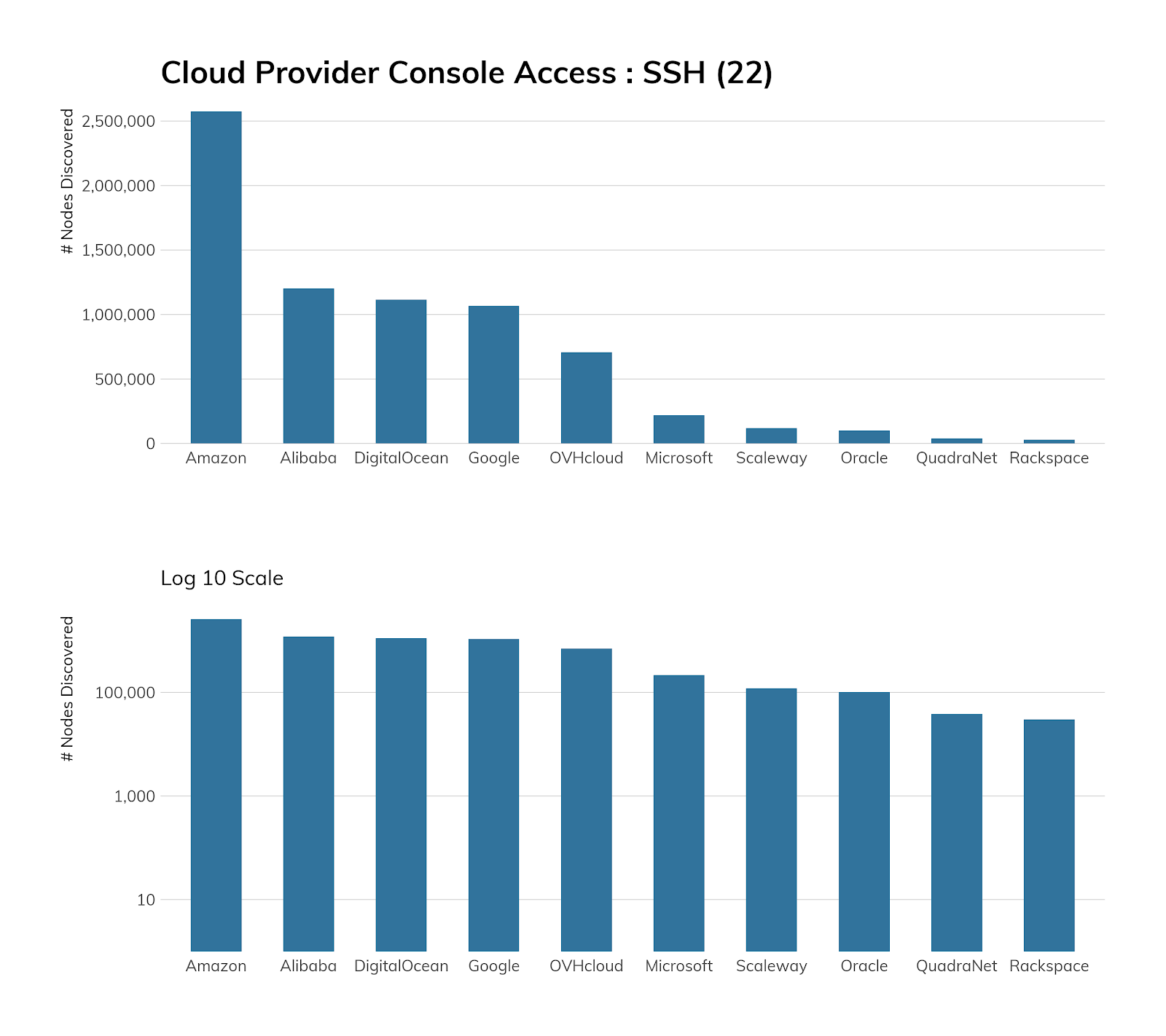

One of the bright spots of this report analysis is the fact that SSH deployment has now outpaced Telnet exposure at a rate of six to one—it seems the world has gotten the message that, while direct console access from the internet might not be the wisest move in the world, about 85% of those exposed shells are secure shells, which takes whole classes of eavesdropping, spoofing, and in-transit data manipulation attacks off the table. Good job, internet, and especially the American network operators around the country—the United States exposes a ratio of 28:1 SSH servers (6.6 million) than Telnet servers (a mere 232,000). Compare this to the 3:1 ratio in China, which is 2.4 million SSH to 734,161. Given that SSH provides both console access and the capability of securing other protocols, whereas Telnet is used almost exclusively for console access, that United States ratio is pretty outstanding.

Exposure Information

Being more complex and making more explicit security guarantees, SSH is not without its own vulnerabilities. Occasionally, traditional stack-based buffer overflows surface as with other network applications written in memory-unsafe languages. In addition, new vulnerabilities in implementations tend to present themselves in non-default combinations of configuration options, or are surfaced in SSH through vulnerabilities in the cryptographic libraries used by SSH to ensure secure communications. Most commonly, though, vulnerabilities in SSH are often associated with unchangeable, vendor-supplied usernames, passwords, and private keys that ship with IoT devices that (correctly) have moved away from Telnet. This is all to say that Secure Shell is not magically secure just due to its use of cryptography—password reuse is weirdly common in SSH-heavy environments, so protecting passwords and private keys is an important part of maintaining a truly secure SSH-based infrastructure.

As mentioned above, administrators and device manufacturers alike are strongly encouraged to adopt the open, free standards of SSH over their cleartext counterparts whenever possible. IoT, OT, and ICS equipment, in particular, is often cited as not having enough local resources to deal with the cryptographic overhead of running secure services, but if that is actually the case, these devices should never be exposed to an internet-connected network in the first place. As mentioned above, it is also not enough to simply move insecure practices such as default, reused passwords from a cleartext protocol to a “secure” protocol—the security offered by cryptography is only as good as the key material in use.

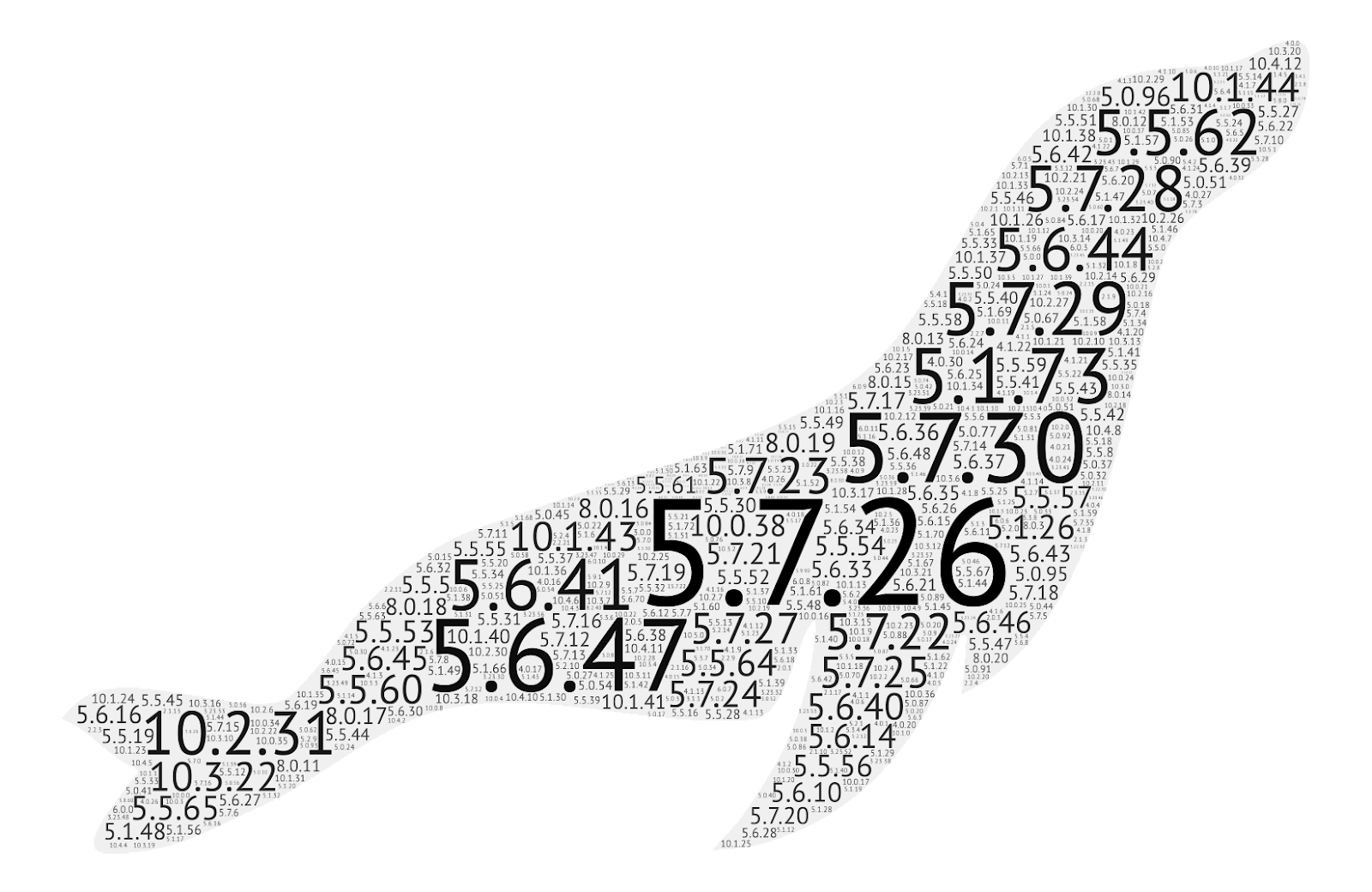

Of the SSH services discovered on the internet, the below table accounts for well over 99.9% of all offerings (only those fingerprintable services with at least 1,000 or more shown here.)

Attacker’s View

SSH provides console access in a better way than Telnet, but it is still just a piece of software with many features, including one that drops you to a command prompt after a successful login, so attackers perform credential stuffing (which can include using stolen certificates, too, for SSH) and vulnerability exploits against the service itself. As such, we’d be just repeating much of the same content as we did in Telnet (and we value your time too much to do that).

What we can do is focus more on two things: vulnerabilities associated with exposed SSH services and how much information exposed SSH services give to attackers.

The most prevalent version of OpenSSH is version 7.5. It turns four years old in December and has nine CVEs. Every single version in the top 20 has between two and 32 CVEs, meaning there’s a distinct lack of patch management happening across millions of systems exposed to the cold, hard internet.

You may be saying, “So what?” (a question we try to ask ourselves regularly when we opine about exposure on the internet). Another exposure view may help answer said question:

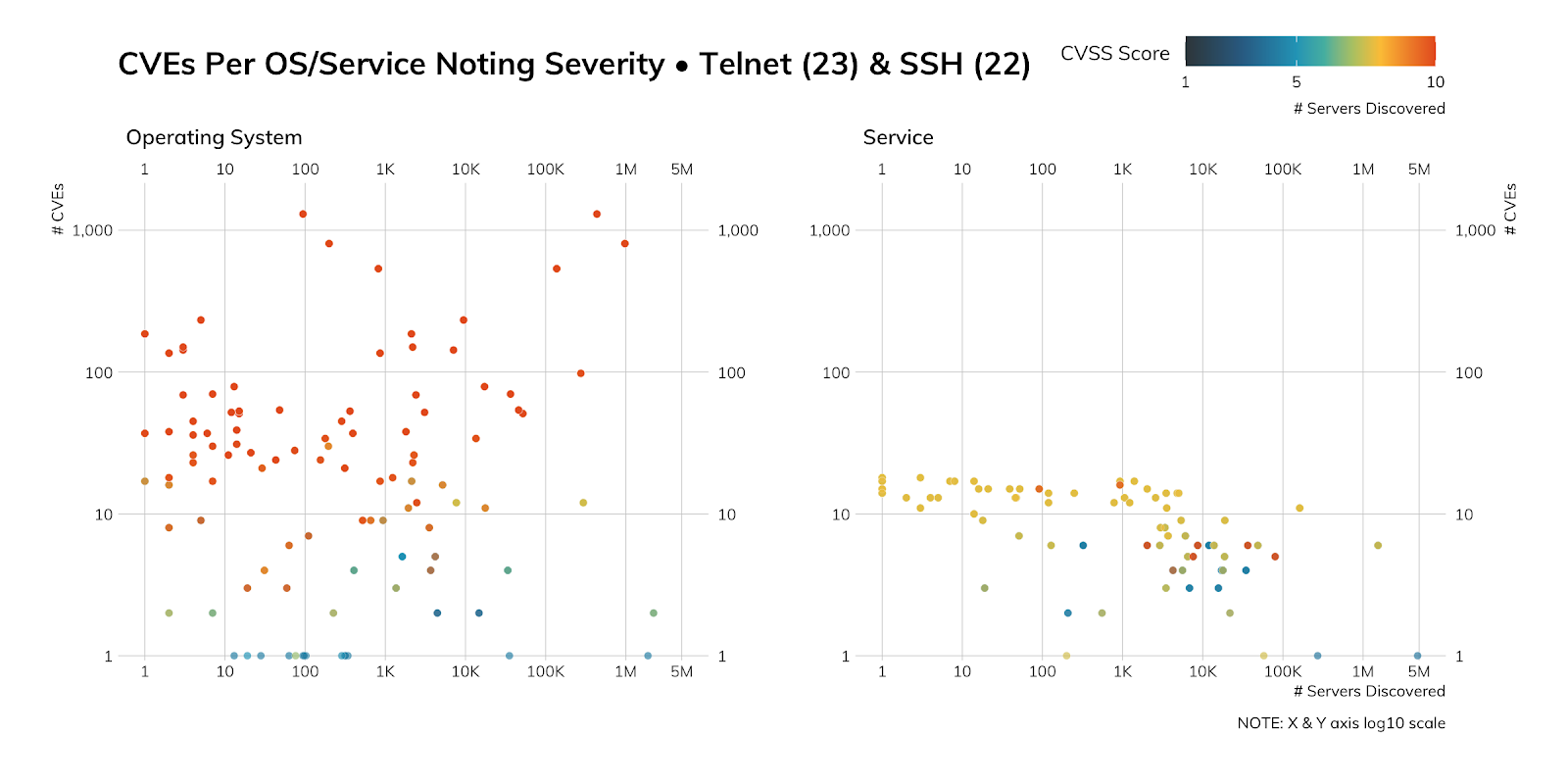

The above figure packs quite a bit of information into it, so let’s dig in as we hit our second point (how much information exposed SSH services[9] give to attackers).

The left panel says “Operating Systems,” which means we can figure out which operating system is in use just from the data we get back from our Sonar SSH connections (enumerated below). Each dot represents one operating system version, the position on the X axis represents how many servers we found, and the position on the Y axis represents the number of CVEs that have been assigned to it. The color represents the highest severity. There is quite a bit of CVSS 8+ on that chart, which means there are quite a few high-priority vulnerabilities on those systems (at the operating system level).

The right panel is the same, just for SSH versions (one of which we enumerated above). The count difference is due to both recog coverage[10] and the fact that a single operating system version can run different versions of SSH, so the aggregation up to operating system category will be higher. There are fewer major vulnerabilities within the SSH services but there are more above 7 than below, which means they’re also pretty high-priority.

Adversaries can use this information to plan which attack path they plan on taking and map vulnerabilities to exploits to add to their arsenal. Overall, we’d say attackers could have quite a bit of fun with this particular SSH corpus.

| OS | Version | Count | OS | Version | Count | OS | Version | Count | ||

|---|---|---|---|---|---|---|---|---|---|---|

| Ubuntu | 18.04 | 2,220,456 | Debian | 9 | 975,722 | FreeBSD | 11.2 | 23,503 | ||

| Ubuntu | 16.04 | 1,890,981 | Debian | 8 | 436,149 | FreeBSD | 12 | 19,360 | ||

| Ubuntu | 14.04 | 533,725 | Debian | 10 | 274,752 | FreeBSD | 9 | 17,646 | ||

| Ubuntu | 19.1 | 52,026 | Debian | 7 | 137,363 | FreeBSD | 11.1 | 4,687 | ||

| Ubuntu | 12.04 | 48,705 | Debian | 6 | 46,054 | FreeBSD | 9.2 | 4,193 | ||

| Ubuntu | 19.04 | 36,451 | Debian | 7.8 | 38,543 | FreeBSD | 8.1 | 3,681 | ||

| Ubuntu | 10.04 | 16,757 | Debian | 4 | 17,279 | FreeBSD | 7.1 | 2,468 | ||

| Ubuntu | 17.1 | 9,467 | Debian | 5 | 13,526 | FreeBSD | 10.4 | 2,179 | ||

| Ubuntu | 15.04 | 7,086 | Debian | 3.1 | 3,089 | FreeBSD | 8.3 | 1,939 | ||

| Ubuntu | 17.04 | 5,183 | Debian | 11 | 1,361 | FreeBSD | 8.4 | 1,627 | ||

| Ubuntu | 16.1 | 3,536 | Debian | 3 | 362 | FreeBSD | 10 | 937 | ||

| Ubuntu | 8.04 | 3,314 | FreeBSD | 8 | 656 | |||||

| Ubuntu | 12.1 | 2,408 | MikroTik | 2.9 | 419 | FreeBSD | 6 | 521 | ||

| Ubuntu | 11.04 | 2,276 | FreeBSD | 4.9 | 312 | |||||

| Ubuntu | 10.1 | 2,201 | vxWorks | 5.1.0p1 | 911 | FreeBSD | 5.3 | 155 | ||

| Ubuntu | 18.1 | 2,192 | vxWorks | 6.9.0 | 288 | FreeBSD | 4.11 | 110 | ||

| Ubuntu | 13.04 | 2,131 | vxWorks | 1.10.0 | 285 | FreeBSD | 4.7 | 74 | ||

| Ubuntu | 14.1 | 2,117 | vxWorks | 1.8.4 | 47 | FreeBSD | 5.5 | 63 | ||

| Ubuntu | 11.1 | 1,807 | vxWorks | 6.8.0 | 40 | FreeBSD | 4.8 | 43 | ||

| Ubuntu | 9.1 | 1,234 | vxWorks | 1.12.0 | 24 | FreeBSD | 5.2 | 21 | ||

| Ubuntu | 9.04 | 861 | vxWorks | 6.5.0 | 22 | FreeBSD | 5 | 14 | ||

| Ubuntu | 15.1 | 860 | vxWorks | 6.0.9 | 14 | FreeBSD | 4.6 | 14 | ||

| Ubuntu | 8.1 | 393 | vxWorks | 6.6.0 | 8 | FreeBSD | 5.1 | 11 | ||

| Ubuntu | 6.04 | 303 | vxWorks | 6.0.2 | 7 | FreeBSD | 4.5 | 7 | ||

| Ubuntu | 7.1 | 285 | vxWorks | 7.0.0 | 2 | FreeBSD | 4.3 | 6 | ||

| Ubuntu | 7.04 | 195 | FreeBSD | 4.4 | 4 | |||||

| Ubuntu | 5.1 | 29 | ||||||||

| Ubuntu | 5.04 | 5 |

Our Advice

IT and IT security teams should be actively replacing cleartext terminal and file transfer protocols with SSH alternatives whenever and wherever they are discovered. If such replacements are impossible, those devices should be removed from the public internet.

Cloud providers should provide SSH as an easy default for any sort of console or file transfer access, and provide ample documentation on how customers can wrap other protocols in SSH tunnels with specific examples and secure-by-default configurations.

Government cybersecurity agencies should actively promote the use of SSH over unencrypted alternatives, especially when it comes to IoT. These organizations can also help encourage industrial use of SSH in areas where Telnet and FTP are still common. In addition, cybersecurity experts can and should encourage good key management and discourage vendors from shipping SSH-enabled devices with long-lived, default passwords and keys.

Measuring Exposure

File Sharing

Measuring Exposure

File Sharing

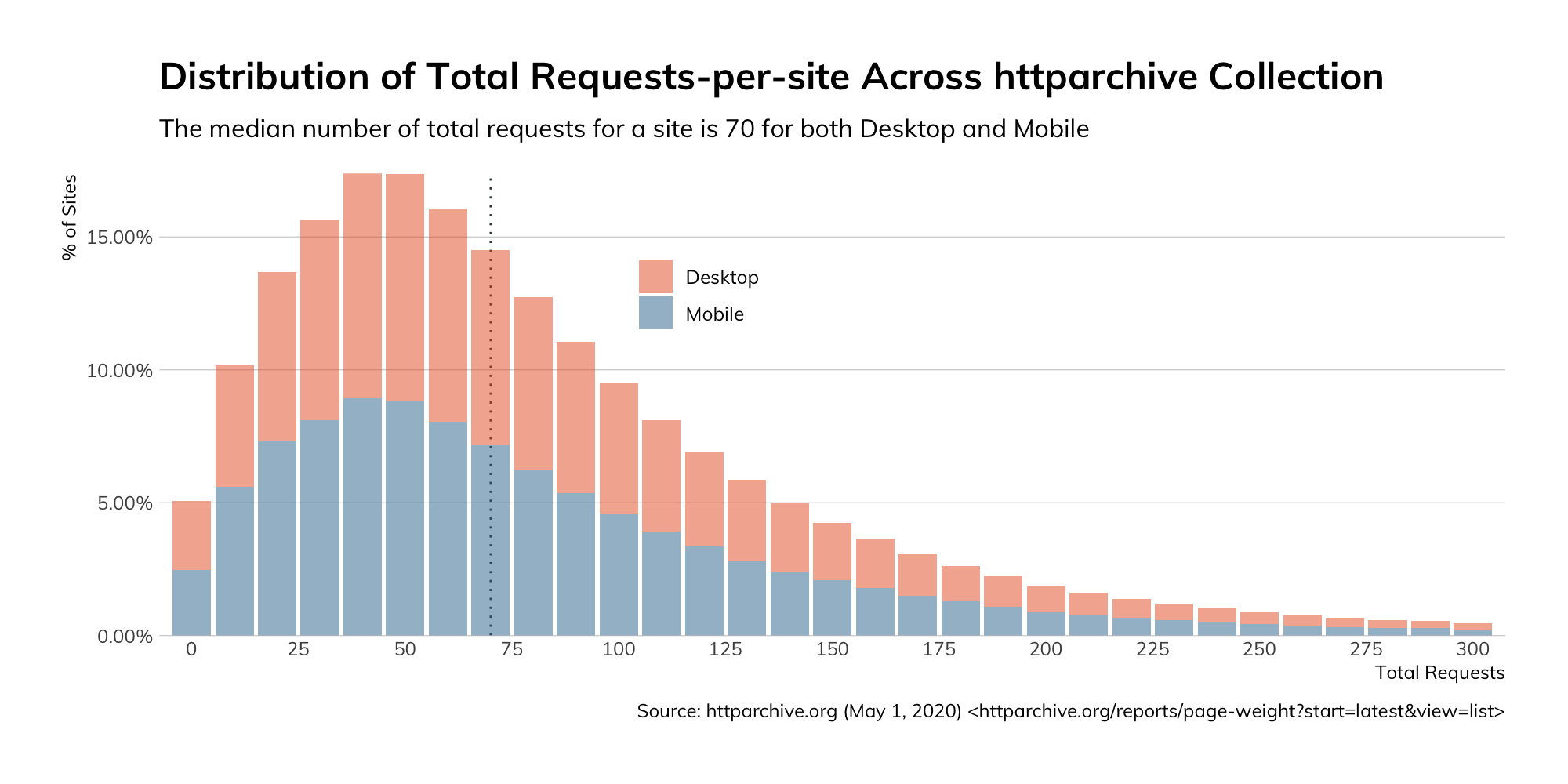

Once the internet patriarchs had console access, they needed way to get files to and from their remote systems, and file sharing constitutes the majority of modern internet traffic, whether it be encoded streams of movies to our devices, viewing far too many cat pictures, or interacting with websites, which download an average of about 70 files each time you visit an uncached page.[11]

Requests for web resources are not the only type of file sharing on the modern internet. For our report, we looked at three other services under this broad category of “File Sharing”:

- File Transfer Protocol (FTP) (TCP/21) and “Secure” FTP (TCP/990)

- Server Message Block (SMB) (TCP/445)

- Rsync (TCP/873)

Each of these services has its own, unique safety issues when it comes to exposing it on the internet, and we’ll start this breakdown with both types of FTP.

FTP (TCP/21)

A confusing data channel negotiation sequence and totally cleartext—what could go wrong?

Discovery Details

FTP (the TCP/21 variant) dates back to 1972 and was intended “to satisfy the diverse needs of users of maxi-HOSTs, mini-HOSTs, TIPs, and the Datacomputer, with a simple, elegant, and easily implemented protocol design.”[12] It is a cleartext, short command-driven protocol that has truly outlived its utility, especially now that the two main browser developers are dropping or have dropped support for it.[13]

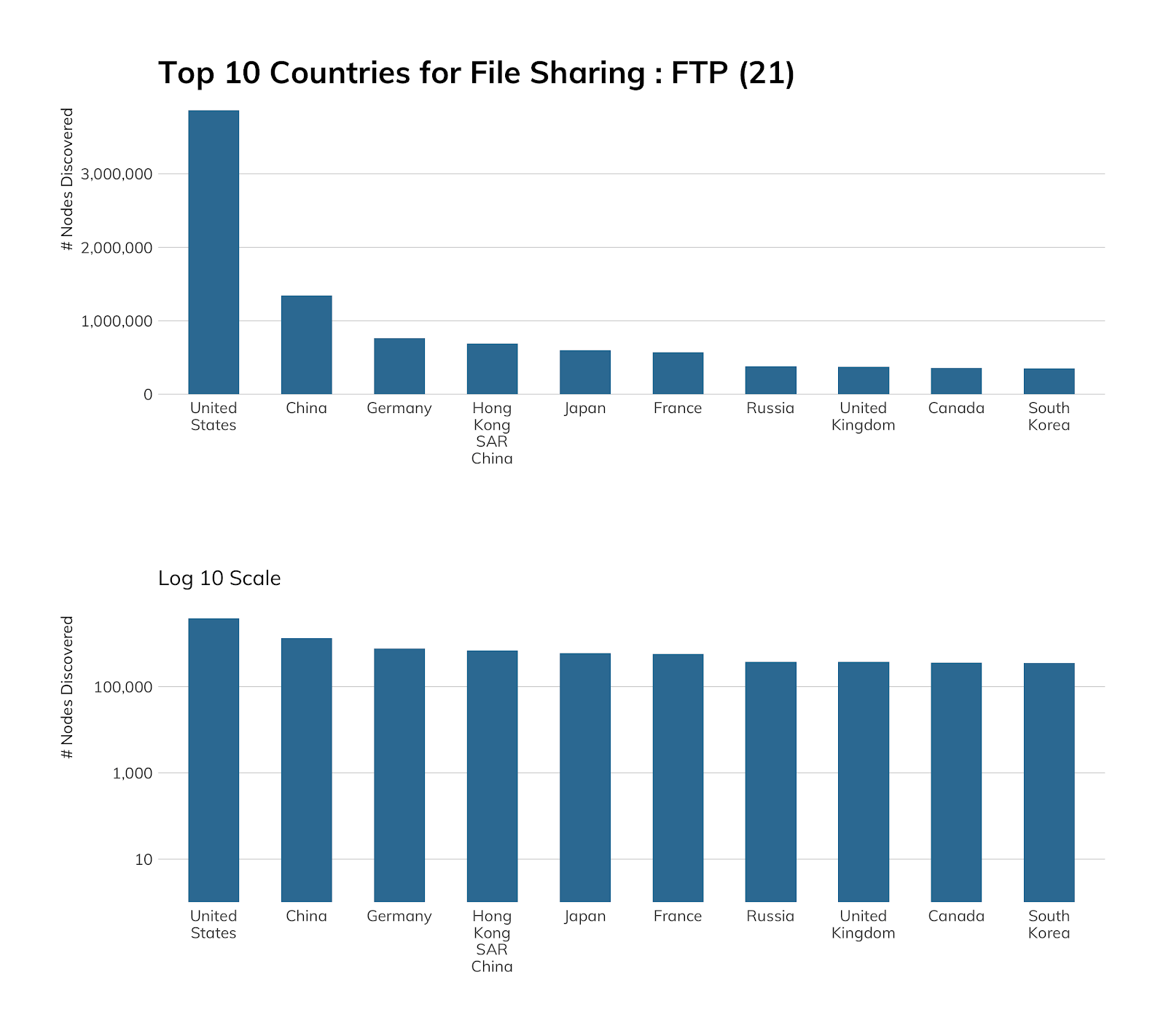

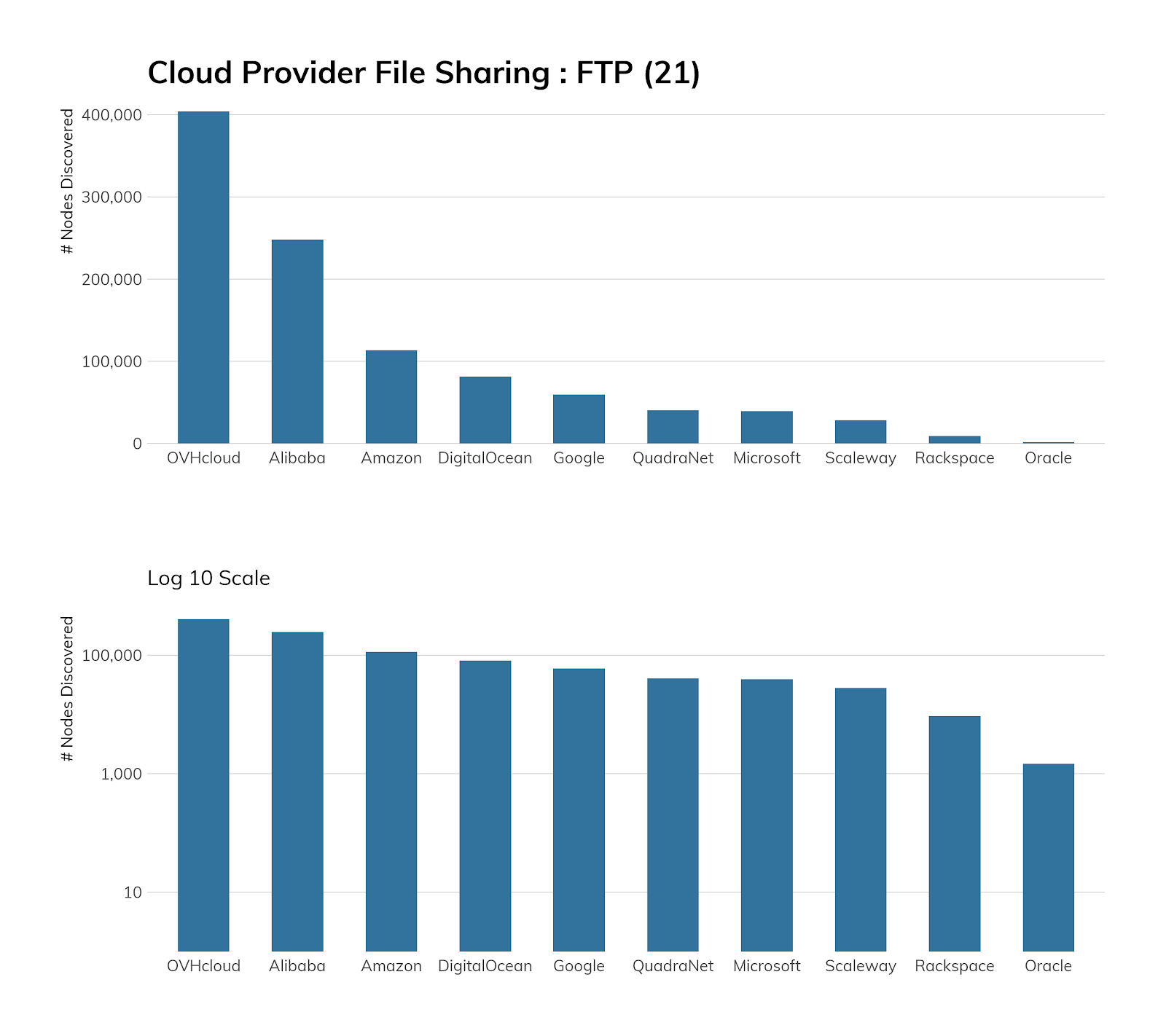

Project Sonar found just under 13 million active FTP sites (12,991,544), which is down from over 20 million from our previous National Exposure Index study. The United States leads the pack when it comes to exposing FTP to the internet with ~3.9 million hosts awaiting your OPEN.

Somewhat surprisingly, there is a large FTP presence in cloud environments, with Alibaba, Amazon, OVH, and Azure hosting around 13.5% of discovered nodes.

When we looked into this a bit more, we discovered that Alibaba generously provides detailed documentation on how to “Build an FTP site” on both Linux[14] and Windows,[15] and that Amazon has a brand-new FTP service offering.[16] Similarly, both OVH[17] and Microsoft[18] have documentation on FTP, and OVH goes so far as to actively encourage customers to use it to manage files for their web content.

Furthermore, all the providers have base images that either ship with FTP available or easily installable (via the aforementioned helpful documentation!), so the extent of FTP exposure in cloud environments should really come as no surprise.

Exposure Information

In 2020, there is a single acceptable use of FTP, which is providing an anonymous FTP[19] download service of more or less public data. In this application, and only this application, cleartext credentials won't be exposed (but data in transit will be). Any other use should be considered insecure and unsafe, and should be avoided. Even this use case is edging into unacceptable territory, since FTP doesn't provide any native checksumming or other error checking on file transfers, making man-in-the-middle alterations of any data in transit fairly trivial.

That said, there are millions of FTP servers on the internet. We're able to fingerprint several,[20] and Table 11 illustrates with fairly high fidelity the state of the FTP universe, authenticated and anonymous alike.

Yes, you read that last line of the table correctly: 166 printers are exposing their FTP service to the internet. Perhaps more disconcerting are 3,401 Ubiquiti Unified Security Gateway (firewalls) with FTP exposed.

Fourteen of the FTP server types make it somewhat easy to fingerprint version numbers, and vsFTPd, ProFTPD, Bftpd, and Filezilla account for 95% of that corpus (which is ~2.1 million FTP servers, i.e., ~17% of exposed systems). We’ve put the top five versions of each below along with some commentary, as including the entire version summary table for each would take a few reams of e-paper.

| vsFTPd | Count |

|---|---|

| 3.0.2 | 221,481 |

| 3.0.3 | 165,776 |

| 2.2.2 | 164,761 |

| 2.0.5 | 43,347 |

| 2.3.5 | 16,598 |

Version 3.0.3 is current and appears safe from an RCE perspective, but 3.0.2 has one moderate remote exploit CVE.[21] Version 2.2.2 dates back to 2011 and has a DoS CVE.[22] Version 2.0.5 harkens back to 2008 with an edgy, remote (authenticated) CVE.[23]

| ProFTPD | Count |

|---|---|

| 1.3.5a | 118,672 |

| 1.3.5b | 100,941 |

| 1.3.4a | 59,089 |

| 1.3.5 | 57,364 |

| 1.3.5e | 45,756 |

Three most prevalent versions of ProFTPD—1.3.5/a/b—just happen to have a super-dangerous CVE[24] that allows remote attackers to read and write arbitrary files. 1.3.4.a has a nasty DoS CVE[25], followed by 1.3.5e, with a low-severity CVE[26] that gives authenticated attackers the ability to reconfigure directory structures under certain configurations.

| Bftpd | Count |

|---|---|

| 2.2 | 310,906 |

| 3.8 | 90,088 |

| 1.6.6 | 12,733 |

| 4.4 | 1,725 |

| 2.2.1 | 1,713 |

Version 2.2 (which turns 11 years old in November) has a handy DoS CVE,[27] with the remaining ones having a memory corruption CVE[28] exploitable by logged-in users.

| FileZilla | Count |

|---|---|

| 0.9.60 beta | 190,673 |

| 0.9.41 beta | 138,349 |

| 0.9.46 beta | 9,500 |

| 0.9.59 beta | 9,030 |

| 0.9.53 beta | 8,017 |

Shockingly, the top 5 FileZilla versions are CVE-free, but are incredibly old.

Attacker’s View

Project Heisenberg does not have an FTP honeypot, but we do capture all connection attempts on TCP/21, and there are often quite a few:

This activity includes attackers looking for an exploitable service on a node they can add to their C2 inventory, or both attackers and researchers searching for documents they can plunder. In either scenario, you’re much better off not giving them one more system to conquer.

Our Advice

IT and IT security teams should avoid using FTP (TCP/21) both internally and externally. This cleartext protocol is inherently unsafe, and there are far safer and just better modern alternatives. For system-to-system file transfers, the “S” (FTPS/SFTP) versions of FTP or (preferably) SCP are all safer and often faster replacements that have widespread client and server support. For more interactive use, there are many commercially available or open source file sharing solutions that work over HTTPS. If you truly need to FTP firmware updates to infrastructure components, you should at the very least ensure those endpoints are not internet-facing and consider putting them behind an internal VPN gateway to keep prying eyes from seeing those credentials.

FTP should also be flagged during vulnerability sweeps and in public attack surface monitoring. During procurement processes, vendors should be asked whether their solutions support, include, or require FTP and use that information to help pick safer products and services.

Cloud providers should discourage use of FTP by only providing base images that do not come with FTP installed or enabled by default, rather than providing friendly and helpful documentation on how to set up a woefully insecure service. Service documentation and other instructional materials should actively discourage the use of FTP, and providers should consider performing regular service scans with associated notifications to customers when they find FTP exposed. If possible, the FTP protocol itself and/or TCP/21 should be blocked by default and definitely not be part of a standard SaaS offering.

Government cybersecurity agencies should provide guidance on the dangers of FTP with suggestions for alternative services. As noted in the Exposure section, there are unauthenticated RCE vulnerabilities in a sizable population of internet-facing FTP systems, making them choice targets for attackers and an inherent weakness in public infrastructure of each country with exposed FTP services.

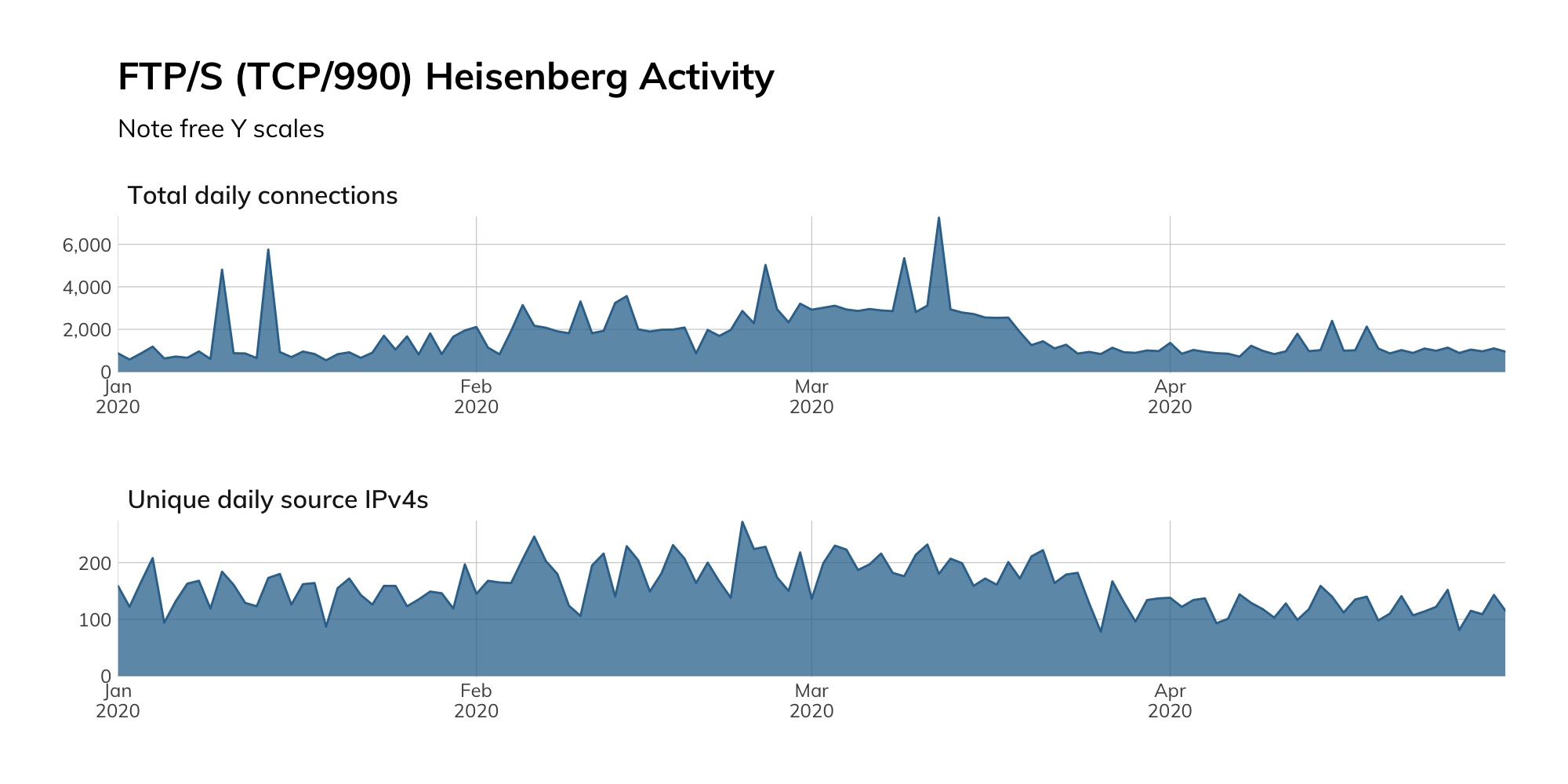

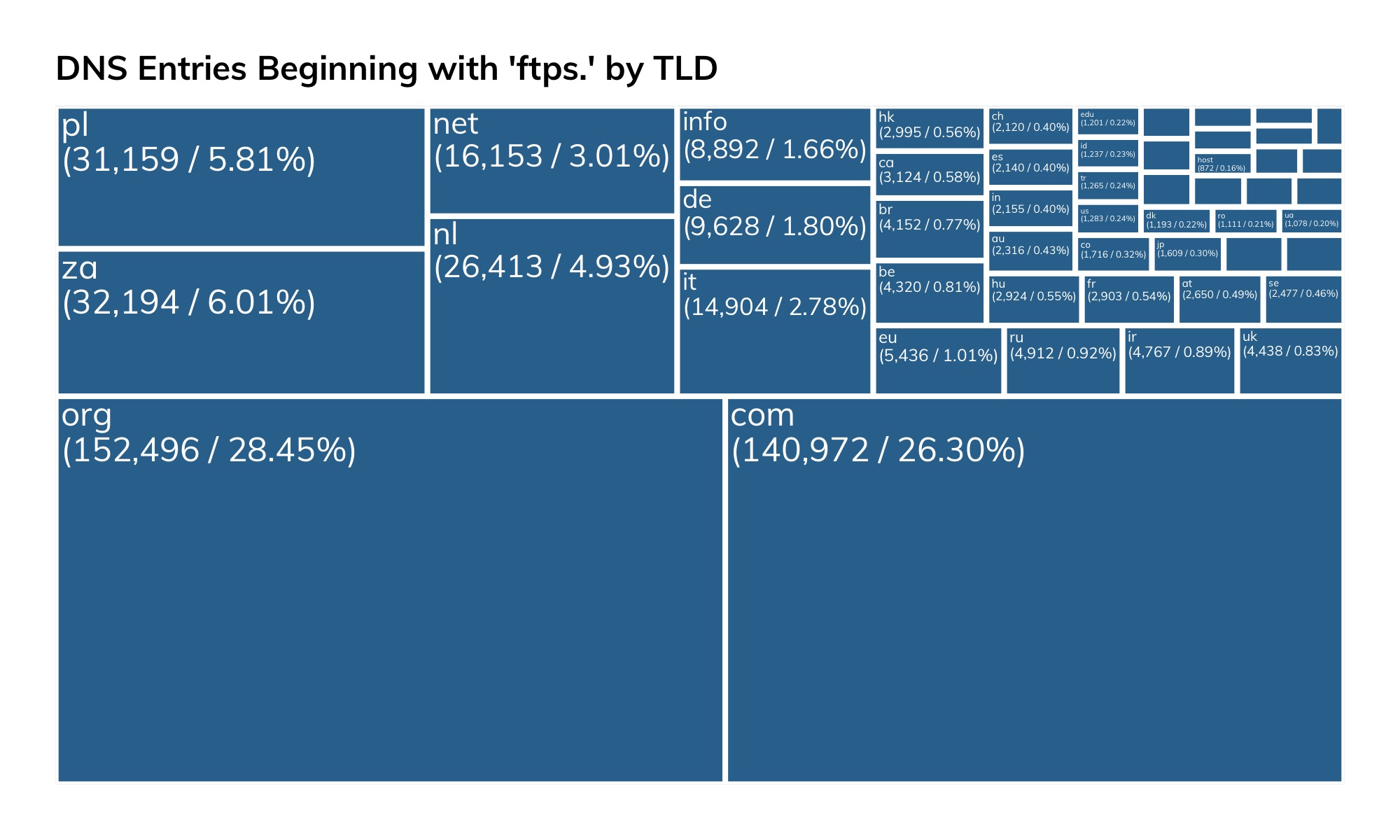

FTP/S (TCP/990)

Discovery Details

FTP/S (the TCP/990 variant) dates back to 1997 and was intended to resolve the outstanding security issues with the FTP protocol[29] described in the previous section. It is, in essence, a somewhat more secure version of FTP. Unfortunately, it only makes logins safer, as file transfers are still performed in cleartext and without any sort of protection from eavesdropping or modification in transit. One other benefit is that FTP/S servers can assert their identities with cryptographic certainty—much like how HTTPS servers can—but this assertion relies on clients actually checking the certificate details and doing the right thing when certificate identification fails.

Given that FTP/S is almost exactly like its insecure cousin but safer to use, you’d think there would be widespread adoption of it. Alas, you would be wrong. Project Sonar barely found half a million nodes on the public internet compared to millions of plain ol’ FTP servers. A possible big reason for this is the need to generate, install, and periodically update TLS certificates, a process for FTP/S that is not as simple as it is these days for HTTPS.[30]

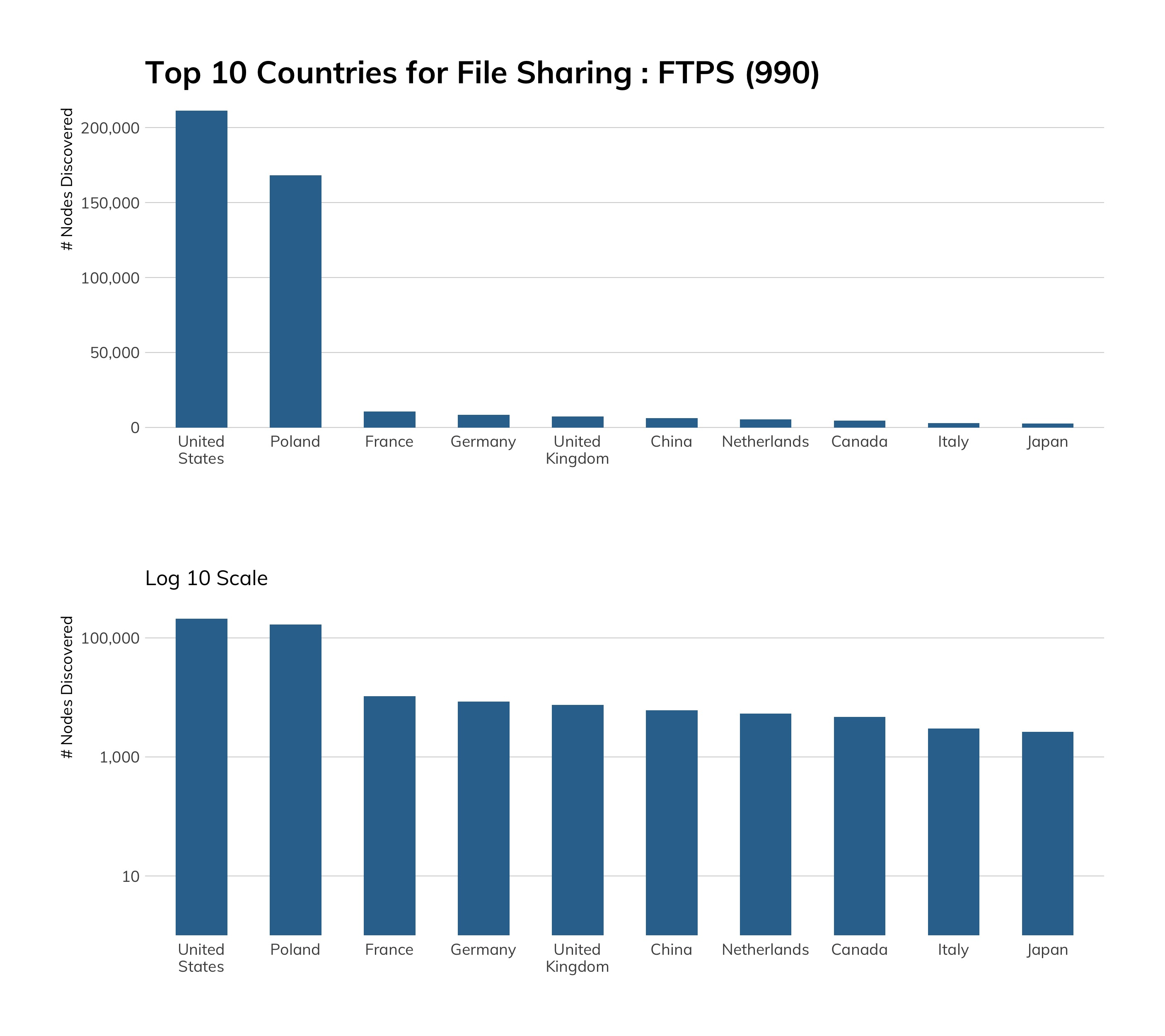

There are high concentrations of FTP/S in the U.S. (mostly due to heavy prevalence in Azure, which we’ll cover in a bit), but also in Poland due to the home.pl hosting provider, which goes out of its way[31] to get you to use it over vanilla FTP—a laudable documentation effort we would love to see other cloud providers strive toward.

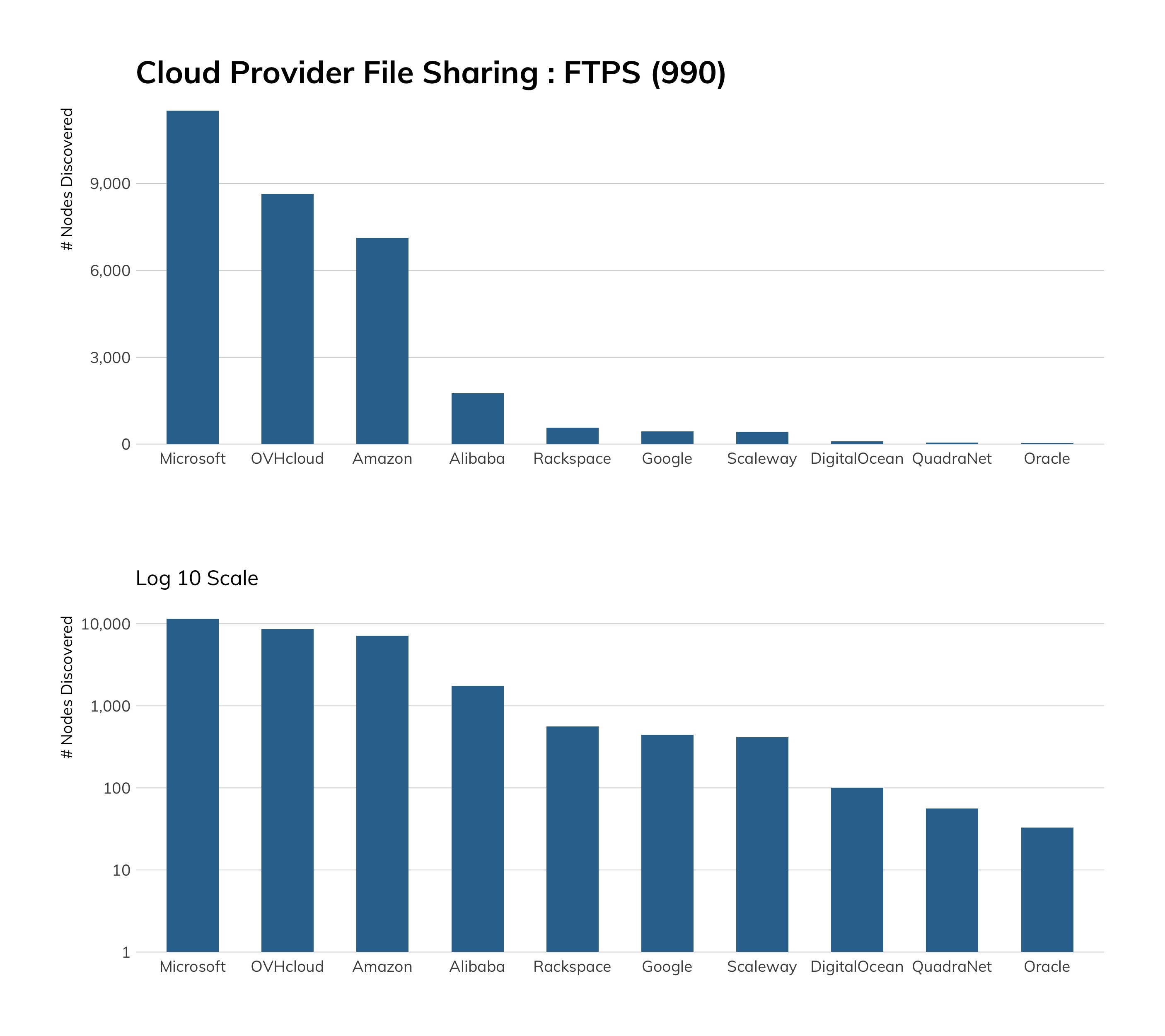

Unfortunately, home.pl didn’t expose sufficient nodes to make it into the top 10 cloud providers we used across all studies in this report, but they’re doing the right thing, as is Microsoft. Along with the aforementioned FTP documentation, they also have dedicated FTP/S setup and use instructions,[32] and it’s fairly trivial to enable FTP/S in IIS, as the table in the Exposure section hints at.

For all this effort, though, users are likely using SCP instead of FTP/S, since SCP does, in fact, encrypt the contents of files in transit as well as login credentials.

Exposure Information

Recog was only able to fingerprint 84,607 (18%) of the available FTP/S services, but IIS “wins” this time, as there are explicit configuration parameters available for FTP/S and there is a GUI for requesting and installing certificates.

Since many FTP/S servers are both FTP and FTP/S servers, the same vulnerabilities apply. We’ll be giving IIS a full workout in the Web Servers section later on in the report.

Attacker’s View

FTP/S is not used much, and that’s generally a sign it’s not going to get much love from either attackers or researchers, a posit that our Project Heisenberg activity reinforces (like FTP, we do not have a high-interaction honeypot for FTP/S, so raw total and unique time series counts are used as a proxy for attacker and researcher activity):

Besides exposing FTP/S on the default port, attackers will also have no trouble finding these systems by DNS entries, given just how many entries there are for ftps.….tld in public DNS:

Our Advice

IT and IT security teams should follow the advice given in the FTP section, since most of it still applies. If you are always sending pre-encrypted files or the content you are uploading/downloading is not sensitive (and, you’re not worried about person-in-the-middle snooping or alteration), you may be able to get away with using FTP/S a bit longer.

Cloud providers should follow home.pl’s example and be explicit about what FTP/S can and cannot do for file transfer safety. While there may be some systems that are reliant on FTP/S, cloud providers should be actively promoting total solutions, like SCP and rsync, over SSH.

Government cybersecurity agencies should provide guidance on what FTP/S can and cannot do for file transfer safety and provide suggestions for alternative services. As noted in the FTP Exposure section (which applies also to FTP/S), there are unauthenticated RCE vulnerabilities in this small-ish population of internet-facing FTP/S systems, making them available targets for attackers and an inherent weakness in public infrastructure of each country with exposed FTP/S services.

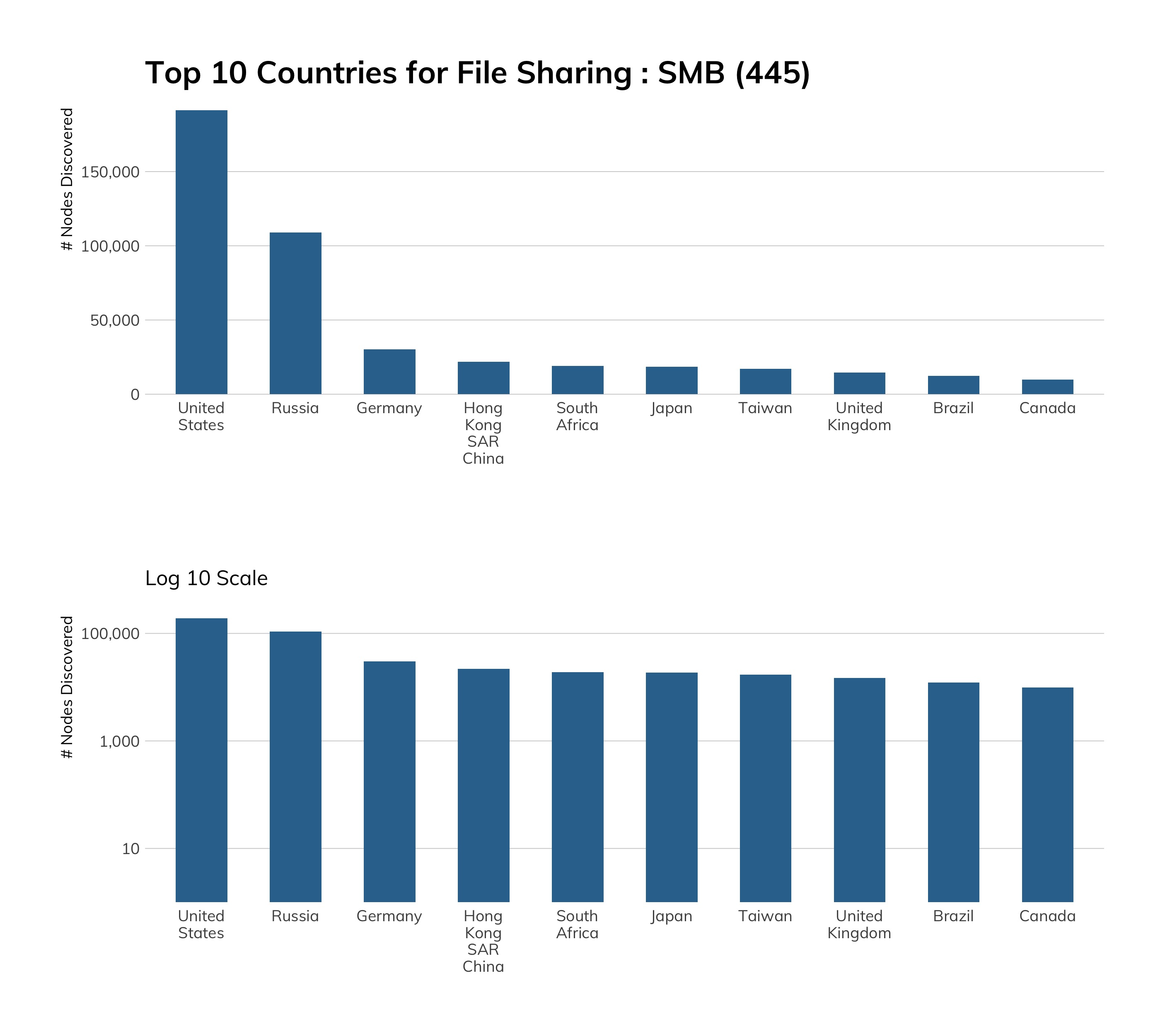

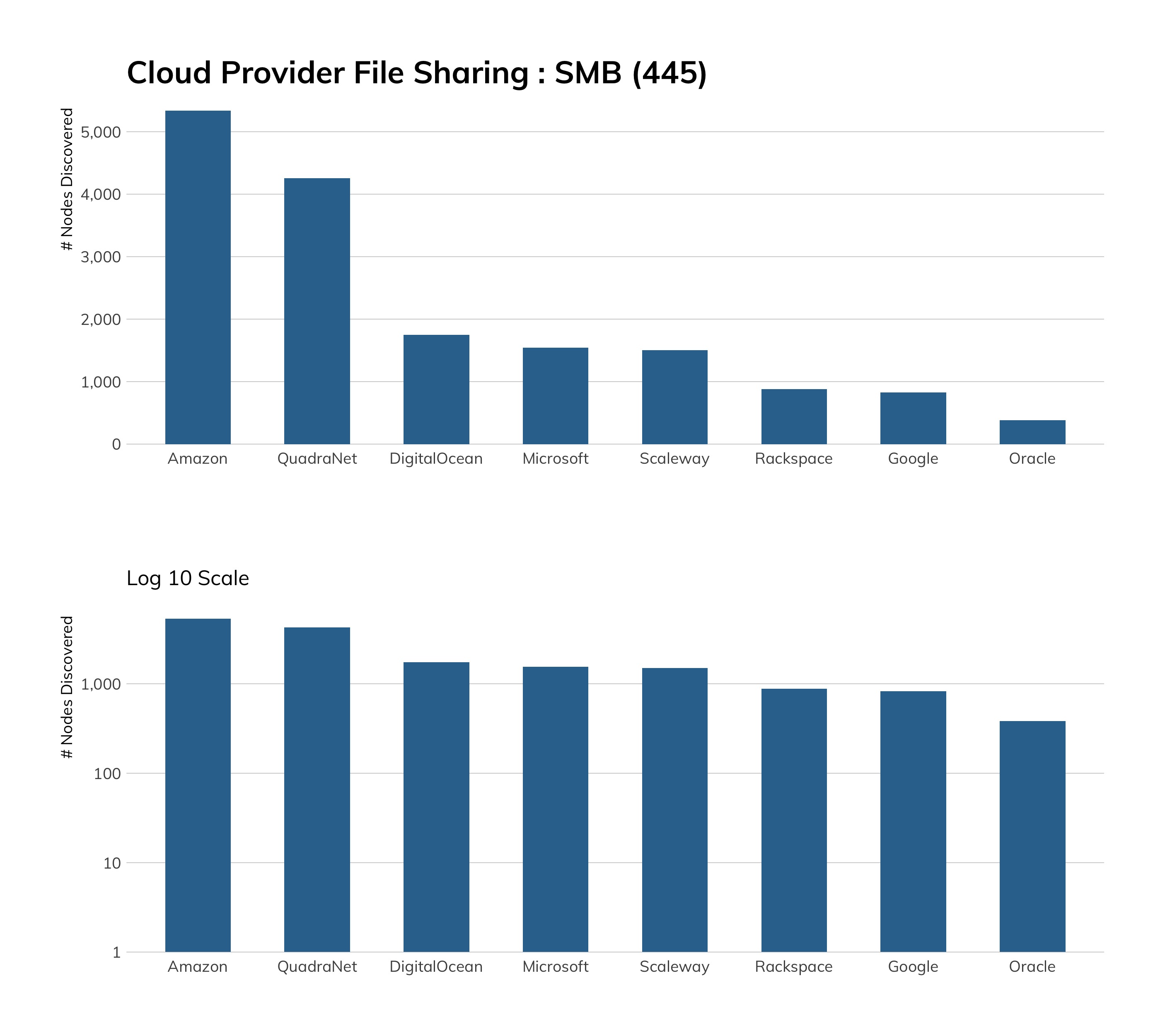

SMB (TCP/445)

Choosy worms choose SMB.

Discovery Details

SMB is a continued source of heartache and headaches for network operators the world over. Originally designed to operate on local area network protocols like NetBEUI and IPX/SPX, SMBv1 was ported to the regular TCP/IP network that the rest of the internet runs on. Since then, SMBv2 and SMBv3 have been released. While SMB is primarily associated with Windows-based computers for authentication, file sharing, print services, and process control, SMB is also maintained for non-Windows operating systems in implementations such as Samba and Netsmb. As a binary protocol with negotiable encryption capabilities, it is a complex protocol. This complexity, along with its initial proprietary nature and deep couplings with the operating system kernel, makes it an ideal field for discovering security vulnerabilities that can enable remote code execution (RCE). On top of this, the global popularity of Windows as a desktop operating system ensures it remains a popular target for bug hunters and exploiters alike.

Exposure Information

Many of the most famous vulnerabilities, exploits, and in-the-wild worms have leveraged SMB in some way. WannaCry and NotPetya are two of the most recent events that centered on SMB, both for exploitation and for transmission. Prior SMB-based attacks include the Nachi and Blaster worms (2003–2005), and future SMB-based attacks will likely include SMBGhost.[34] In addition to bugs, intended features of SMB—notably, automatic hash-passing—make it an ideal mechanism to steal password hashes from unsuspecting victims, and SMB shares (network-exposed directories of files) continue to be accidentally exposed to the internet via server mismanagement and too-easy-to-use network-attached storage (NAS) devices.

As expected, the preponderance of SMB services available on the internet are Windows-based, but the table below shows there is also a sizable minority of non-Windows SMB available.

| SMB Server Kind | Count |

|---|---|

|

Windows (Server) |

298,296 |

|

Linux/Unix/BSD/SunOS (Samba) |

170,095 |

|

Windows (Desktop) |

110,340 |

|

QNAP NAS Device |

10,164 |

|

Other/Honeypot |

1,914 |

|

Apple Time Capsule or macOS |

1,465 |

|

Windows (Embedded) |

703 |

|

Keenetic NAS |

647 |

|

Printer |

386 |

|

Zyxel NAS |

6 |

|

EMC NAS |

5 |

As you can see, these non-Windows nodes are typically some type of NAS system used in otherwise largely Windows environments, and are responsible for maintaining nearline backup systems. While these devices are unlikely to be vulnerable to exactly the same exploits that dog Windows systems, the mere fact that these backups are exposed to the internet means that, eventually, these network operators are Going To Have A Bad Time if and when they get hit by the next wave of ransomware attacks.

Unattended Installs

Of the Windows machines exposed to the internet, we can learn a little about their provenance from the Workgroup strings that we're able to see from Sonar scanning. The list below indicates that the vast majority of these machines are using the default WORKGROUP workgroup, with others being automatically generated as part of a standard, unattended installation. In a magical world where SMB is both rare and safe to expose to the internet, we would expect those machines to be manually configured and routinely patched.

This is not the case, though—these Windows operating systems were very likely installed and configured automatically, with no special care given to their exposed-to-the-internet status, so the exposure is almost certainly accidental and not serving some special, critical business function. Additionally, these aftermarket-default WORKGROUPS are also giving away hints about which specific Windows- or Samba-based build is being used in production environments, and can give attackers ideas about targeting those systems.

Attacker’s View

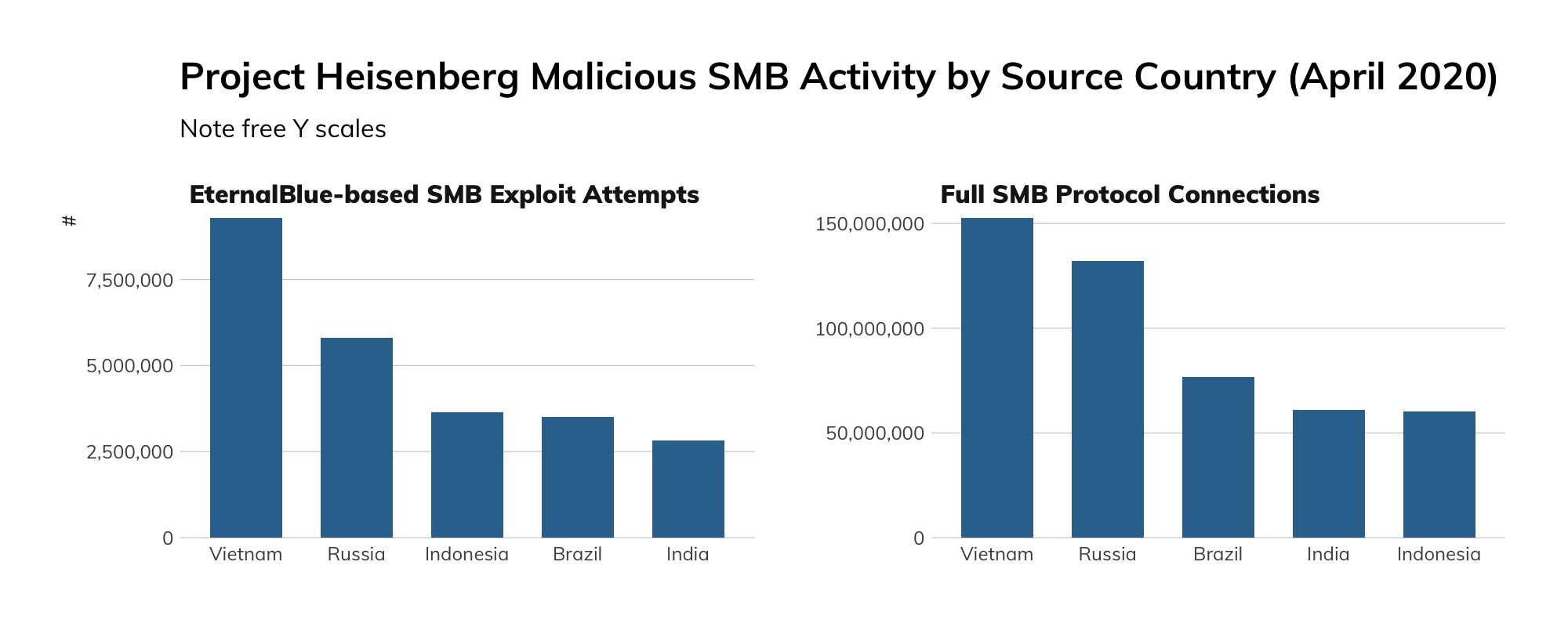

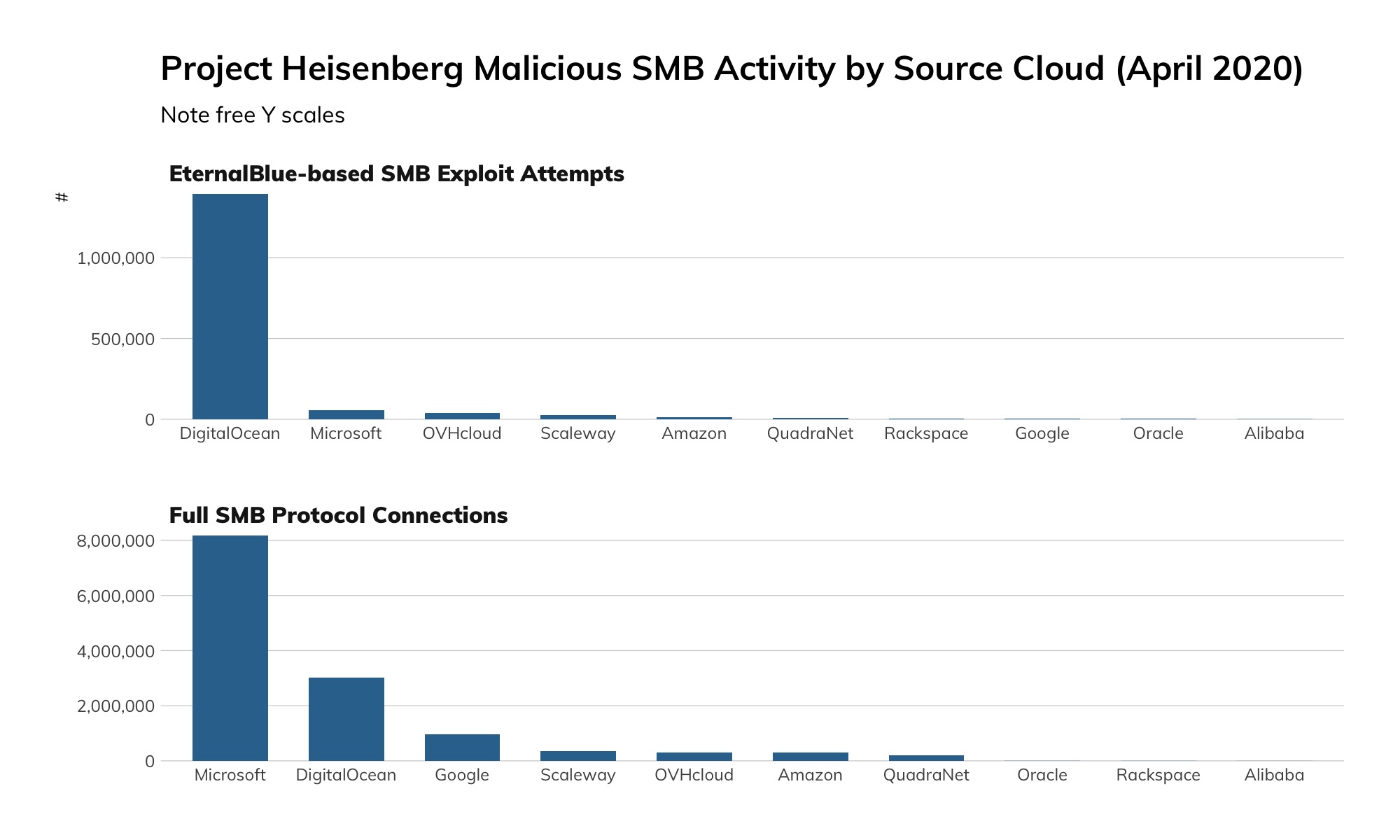

Regardless of the version and configuration of cryptographic and other security controls, SMB is inappropriate for today's internet. It is too complex to secure reliably, and critical vulnerabilities that are attractive to criminal exploitation continue to surface in the protocol. With that said, SMB continues to be a critical networking layer in office environments of any size, and since it’s native to TCP/IP, network misconfigurations can inadvertently expose SMB-based resources directly to the internet. Every organization should be continually testing its network ingress and egress filters for SMB traffic—not only to prevent outsiders from sending SMB traffic to your accidentally exposed resources, but to prevent internal users from accidentally leaking SMB authentication traffic out into the world.

Approximately 640,000 unique IP addresses visited our high-interaction SMB honeypots over the measured period, but rather than think of this as a horde of SMB criminals, we should recall that the vast majority of those connections are from machines on the internet that were, themselves, compromised. After all, that's how worms work. Very few of these connections were likely sourced from an attacker's personally owned (rather than pwned) machine. With this in mind, our honeypot traffic gives us a pretty good idea of which countries are, today, most exposed to the next SMB-based mega-worm like WannaCry: Vietnam, Russia, Indonesia, Brazil, and India are all at the top of this list.

Among the cloud providers, things are more stark. EternalBlue, the exploit underpinning WannaCry, was responsible for about 1.5 million connections to our honeypots from Digital Ocean, while Microsoft Azure was the source of about 8 million (non-EternalBlue) connections (of which, about 15%, or 1.2 million or so, were accidental connections due to a misconfiguration at Azure). We're not yet sure why this wild discrepancy in attack traffic versus accidental traffic exists between Digital Ocean and Azure, but we suspect that Microsoft is much more aggressive about making sure the default offerings at Azure are patched against MS17-010, while Digital Ocean appears to be more hands-off about patch enforcement, leaving routine maintenance to its user base.

Our Advice

IT and IT security teams should prohibit SMB access to, or from, their organization over anything but VPN-connected networks, and regularly scan their known, externally facing IP address space for misconfigured SMB servers.

Cloud providers should prohibit SMB access to cloud resources, and at the very least, routinely scrutinize SMB access to outside resources. Given that approximately 15% of our inbound honeypot connections over SMB from Microsoft Azure are actually misconfigurations, rather than attacks or research probes, Azure should be especially aware of this common flaw and make it difficult to impossible to accidentally expose SMB at the levels that are evident today.

Government cybersecurity agencies should be acutely aware of their own national exposure to SMB, and institute routine scanning and notification programs to shut down SMB access wherever it surfaces. This is especially true for those countries that are at the top of our honeypot source list.

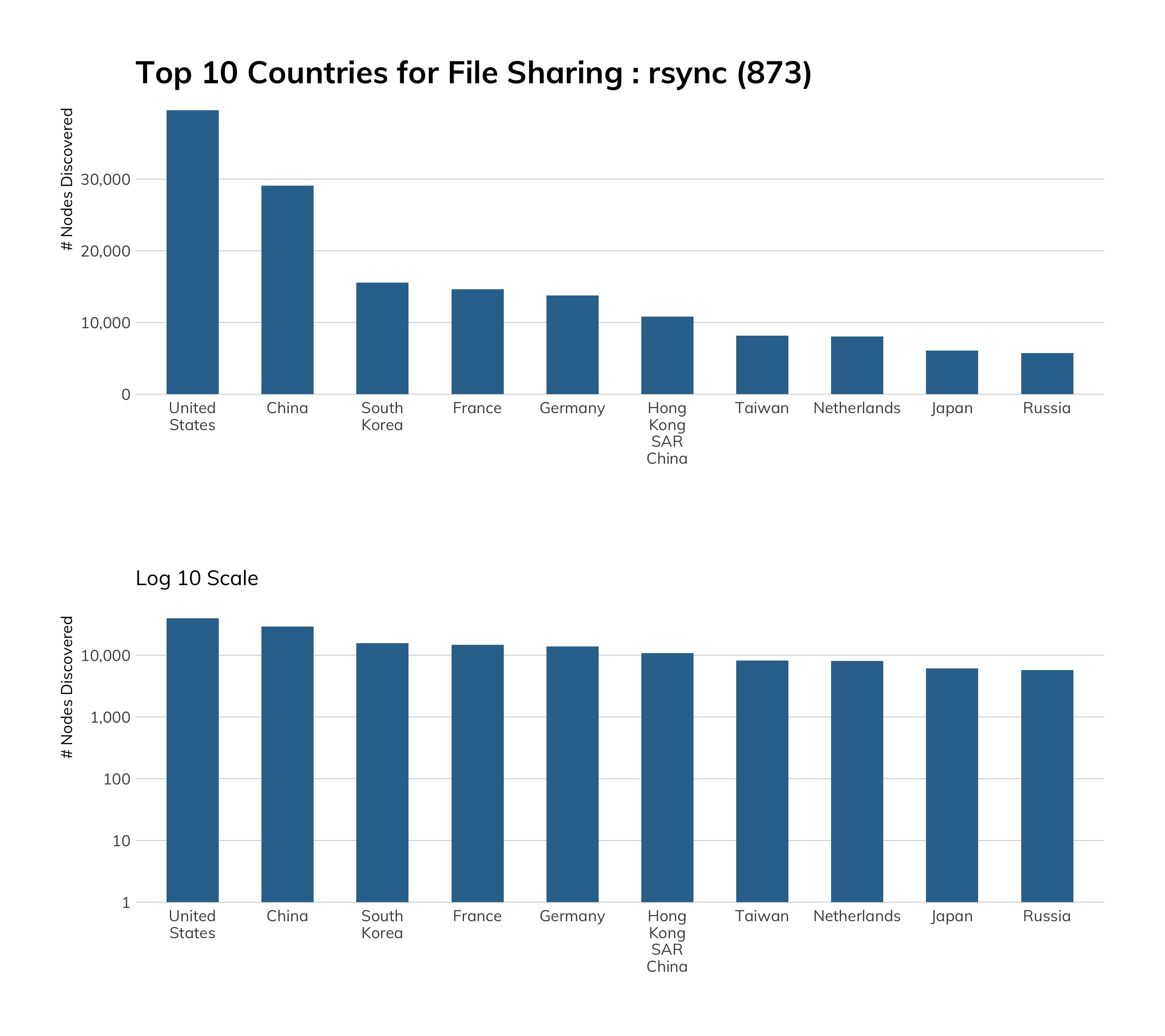

rsync (873)

Almost an accident of early internet engineering.

Discovery Details

The rsync service is almost old enough to rent a car in the U.S., given that it turned 24 this year. Unlike many network protocols, rsync has no IETF RFC for the protocol itself, but when uniform resource identifiers (URI, but you can think of them as URLs and we won’t judge you) became a thing, this venerable protocol achieved at least partial RFC status for its URI scheme.[35] It does have documentation[36] and is widely used on the modern internet for keeping software and operating system mirrors populated, populating filesystems, performing backups, and—as we’ll see later—exposing a non-insubstantial number of home network-attached storage (NAS).

Rapid7's Jon Hart and Shan Sikdar took an in-depth look at rsync exposure back in 2018,[37] and only a tiny bit has changed since then, so we’ll focus more on the changes than reinvent the wheel.

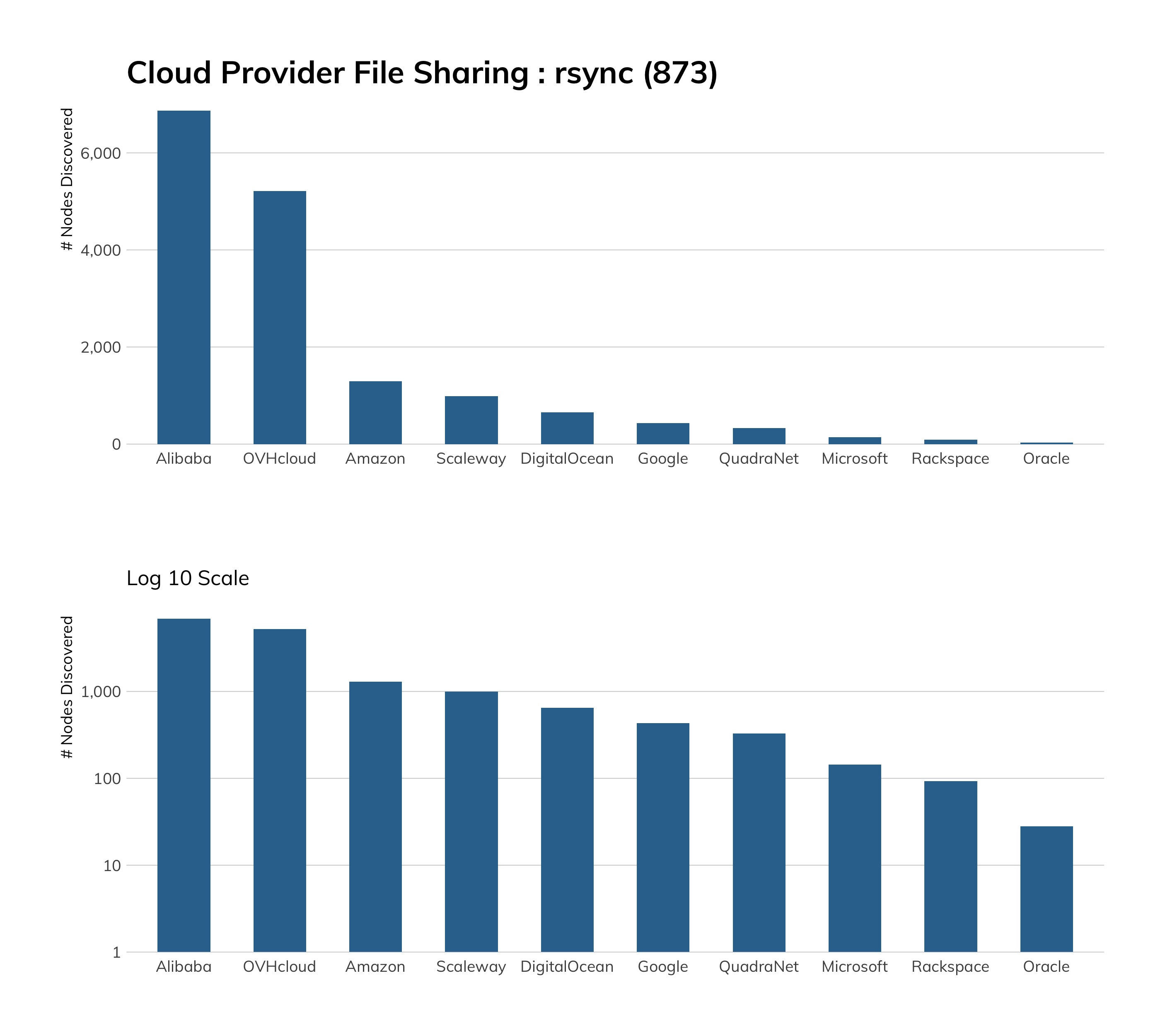

If you’re wondering why Alibaba of China and OVH of France both have a more significant rsync presence than the other providers, look no further than their documentation[38] and services[39], which most folks new to “the cloud” will rely on to get jump-started. Unfortunately, both clouds default to plain ol’ rsync but do at least mention that you can run it more securely through an SSH tunnel.

Exposure Information

Over half (~57%) of the exposed rsync systems are running with a 10-year-old protocol (version 30), which is an indicator the operating systems are potentially over 10 years old and riddled with other vulnerabilities. While protocol version 31 is most current, this can be a bit misleading. Version 31 was released in 2013. Outside of versions 30 and 31 of the protocol, there is a steep drop-off to the truly ancient versions of the protocol.

| rsync Protocol Version | Count |

|---|---|

|

30 |

117,629 |

|

31 |

80,391 |

|

29 |

7,596 |

|

26 |

2,654 |

|

28 |

510 |

|

31.14[40] |

45 |

|

27 |

36 |

|

24 |

8 |

|

34 |

7 |

|

32 |

2 |

|

20 |

1 |

|

25 |

1 |

|

29 |

1 |

|

31.12 |

1 |

We need to deviate a bit from some of the previous sections, since over 20% of rsync exposure lies in residential ISP space (top 25 providers listed in Table 20). Most of these are NAS devices with brand names you’ve likely seen in online stores or big-box electronic retailers. We’ll see why this is important in the next section.

| ISP | Count |

|---|---|

|

HiNet |

5,316 |

|

Korea Telecom |

5,300 |

|

China Unicom |

4,583 |

|

China Telecom |

4,528 |

|

Vodafone |

4,389 |

|

Orange |

4,274 |

|

Deutsche Telekom |

2,245 |

|

Comcast |

1,994 |

|

Tata Communications |

1,355 |

|

Charter Communications |

1,306 |

|

Swisscom |

810 |

|

Verizon |

732 |

|

NTT Communications |

727 |

|

Telia |

643 |

|

Virgin Media |

626 |

|

China Mobile |

550 |

|

Cogent |

489 |

|

Rostelecom |

473 |

|

AT&T |

412 |

|

Rogers |

394 |

|

Cox Communications |

383 |

|

CenturyLink |

193 |

|

Hurricane Electric |

165 |

|

Level3 |

102 |

|

China Tietong |

92 |

The core rsync service has no encryption, so all operations—including authentication—operate over cleartext. This makes it just as bad as Telnet and FTP. The protocol has been extended to support using non-cleartext credentials, but all file transfers still happen in cleartext, so any prying eyes in the right network position can still grab your data.

One of the real dangers of exposing rsync, though, is that you’re letting attackers know you have files for the taking and have some operating system that may be worth taking over.

Attacker’s View

Since rsync is a big, neon sign saying “I’ve got files!” and since a large portion of exposed rsync is on residential ISP networks, attackers can correlate other services running on those IP addresses to get an idea of the type of system that’s being exposed.

Why are these residential rsync systems sitting on the internet? Vendors such as QNAP need to make these NAS devices easy to use, so they create services such as “myQNAPcloud,” which lets consumers create a “nameyouwant.myqnapcloud.com” to access their devices over the internet (meaning they have to punch at least one hole in their home router to do so). It even has handy mobile apps that let them watch saved videos and listen to saved music. Our Sonar FDNS study found over 125,000 myqnapcloud.com entries, and it is super easy for attackers to collect that data as well. That means over 125,000 QNAP NAS device users just gave attackers their home (IP) addresses and placed a quaint “Welcome” mat out for them.

Unfortunately, QNAP (and a cadre of other NAS vendors) have a terrible track record when it comes to vulnerabilities, including seven unauthenticated, remote code execution vulnerabilities, the most recent of which is a doozy.[41] While these vulnerabilities are not exposed over rsync, the presence of rsync is, as previously noted, a leading indicator that a NAS device is very likely present.

Attackers use these devices as launch points for other malicious activities on the internet (such as DDoS attacks) and can lock up all the files on these devices for consumer-grade ransomware attacks.

As you can see, exposing one innocent service such as rsync can ultimately get you into a world of trouble.

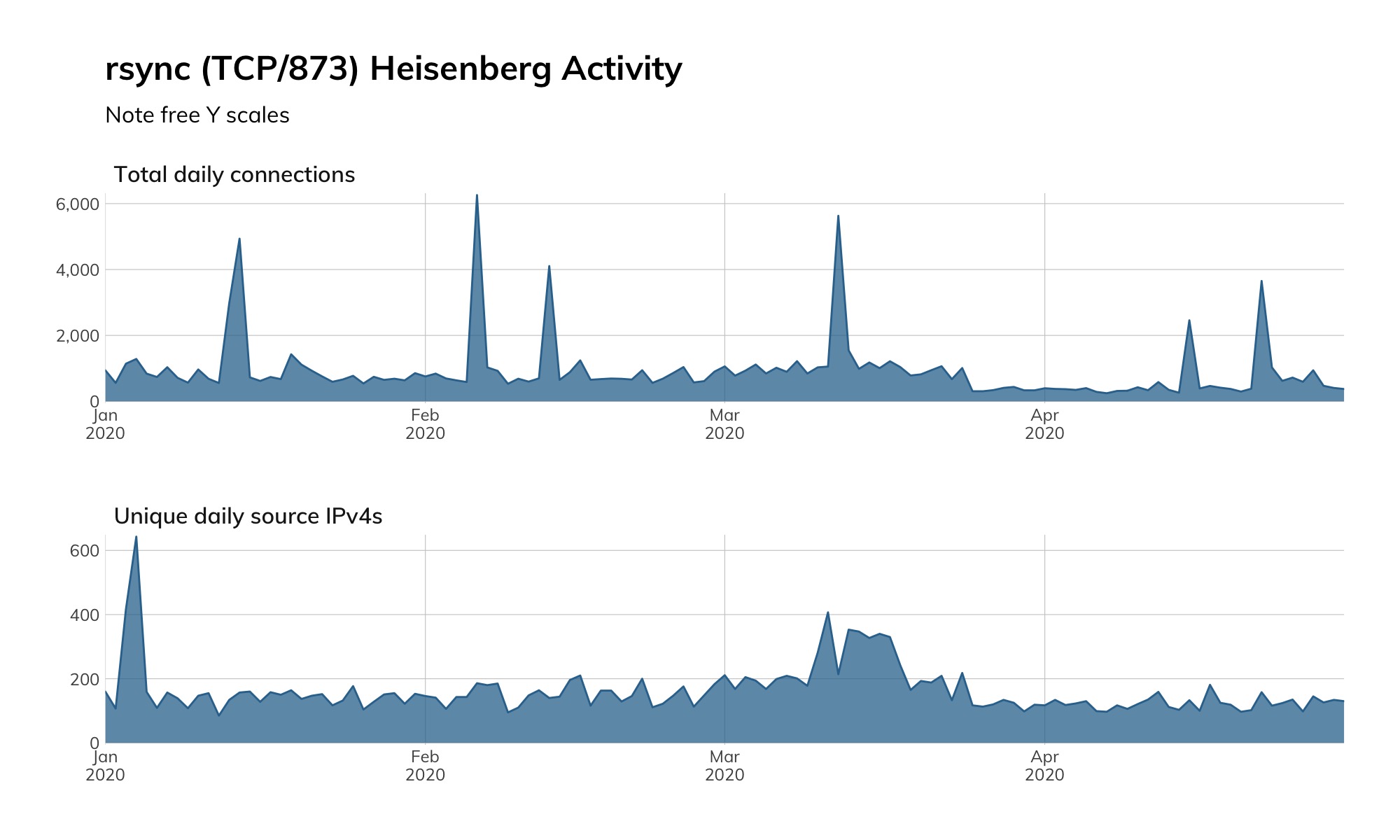

Our Heisenberg honeypot fleet does not have a high-interaction honeypot for rsync, but we do watch for all connection attempts on TCP/873 and see regular inventory scans (the blue spikes in the figure below) by researchers and attackers looking for new rsync endpoints to conquer.

Our Advice

IT and IT security teams should never use vanilla rsync and should always opt to wrap it in a tasty chocolate secure shell (i.e., only use it when tunnelling over certificate-based, authenticated SSH sessions). There is no other safe way to use rsync for confidential information. None.

Cloud providers should know better than to offer rsync services over anything but certificate-based, authenticated SSH. Existing insecure services should be phased out as soon as possible. The most obvious path toward this brave new world of encryption everywhere is to offer excellent, well-maintained, easy-to-find documentation, with examples, on how exactly to "get started" with rsync over SSH, and on the flip side, little to no documentation on how to run rsync naked (users who are determined to do the wrong thing can fight through man pages like we used to in the old days).

Government cybersecurity agencies should regularly and strongly encourage consumers, cloud providers, and organizations to only use certificate-based, authenticated SSH-tunnelled rsync services and work with cloud providers and NAS vendors to eradicate the scourge of rsync exposure from those platforms.

Measuring Exposure

Email

Measuring Exposure

Email

Email, in its currently recognizable form, emerged as the SMTP standard for transferring messages between networks and computers and was enshrined in RFC 788 in November 1981. Email had been in use for at least a decade earlier, but protocols and applications differed wildly among systems and networks. It took SMTP to unify and universalize email, and it continues to evolve today.

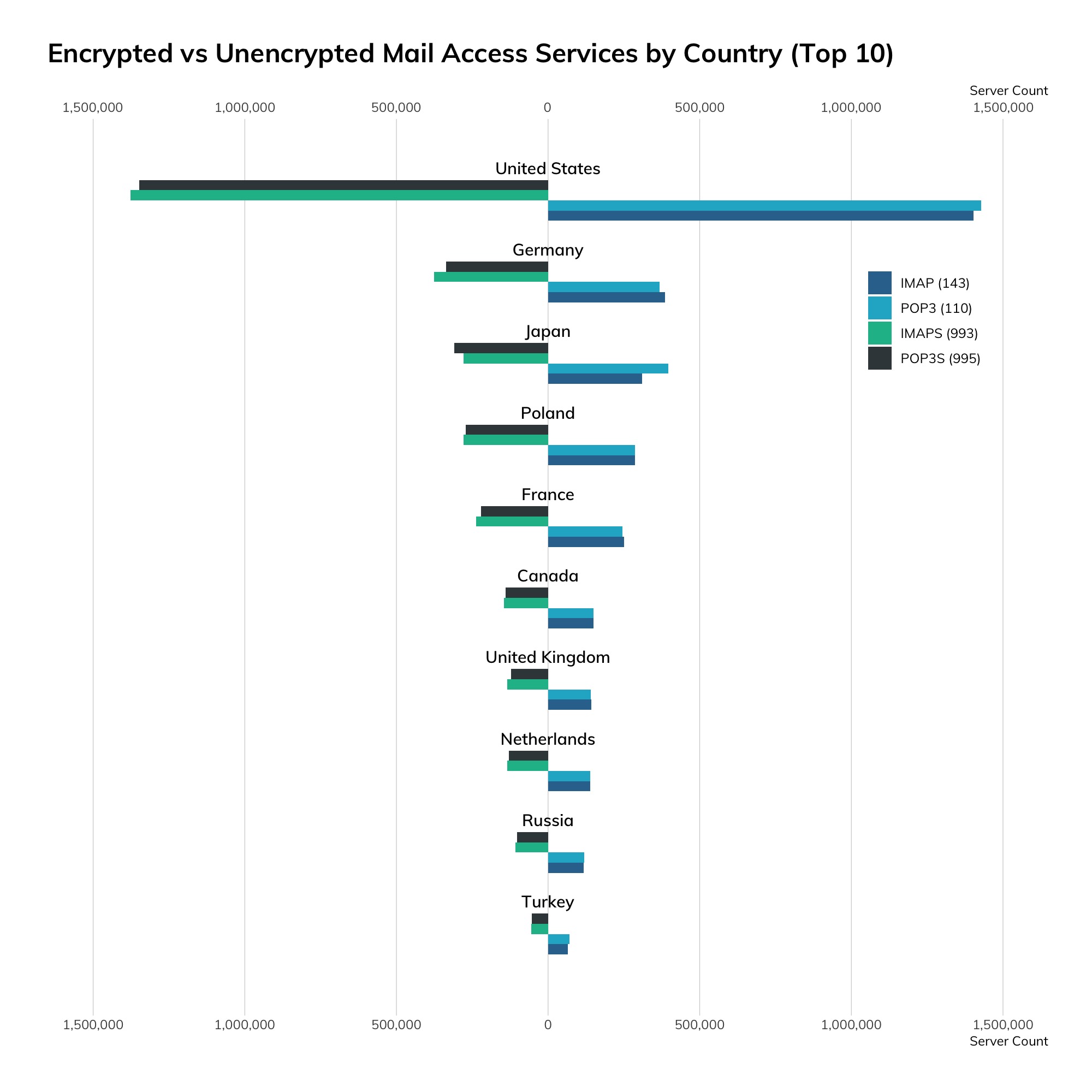

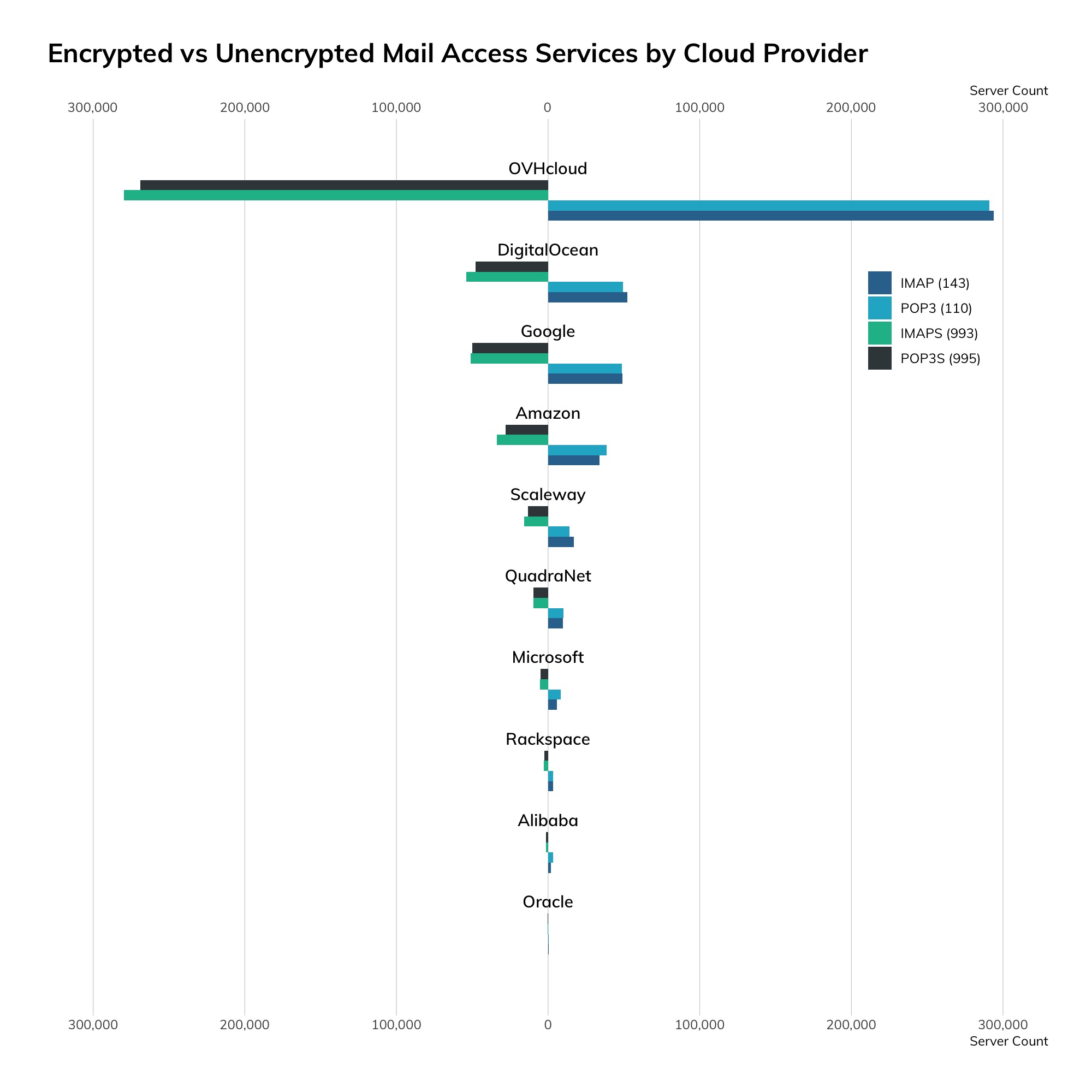

On the client side, we've seen shifting habits over time. The POP (Post Office Protocol) and IMAP (Internet Message Access Protocol) families of client-to-server protocols dominated in the 1990s. When the world moved to the World Wide Web, there was a brief decline in their use when people turned to webmail, rather than stand-alone mail user agents (MUAs). This trend reversed in the late 2000s with the rise of Apple and Android mobile devices, which tend to use IMAPv4 to interact with email servers.

SMTP (25/465/587)

The “Simple” in SMTP is intended to be ironic.

Discovery Details

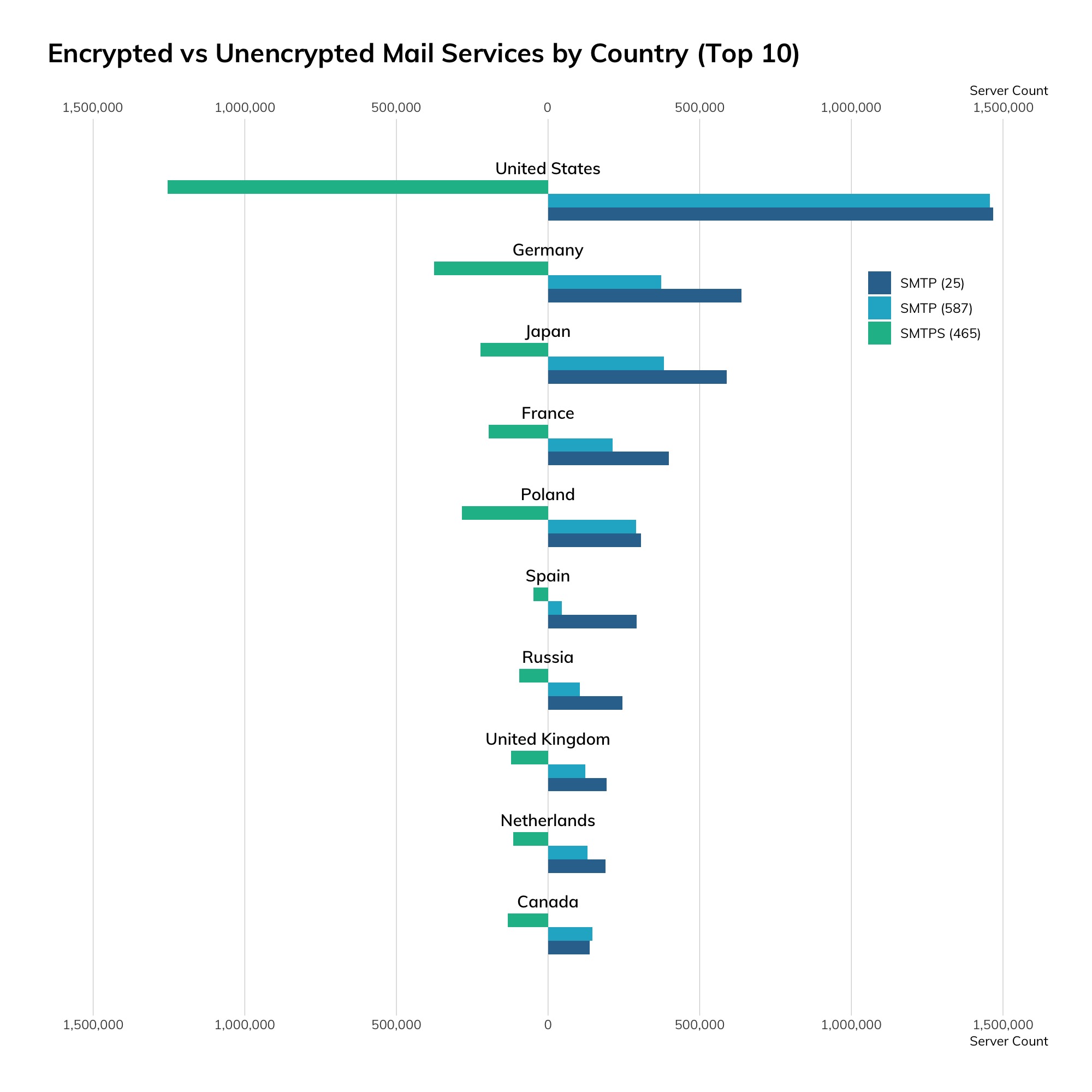

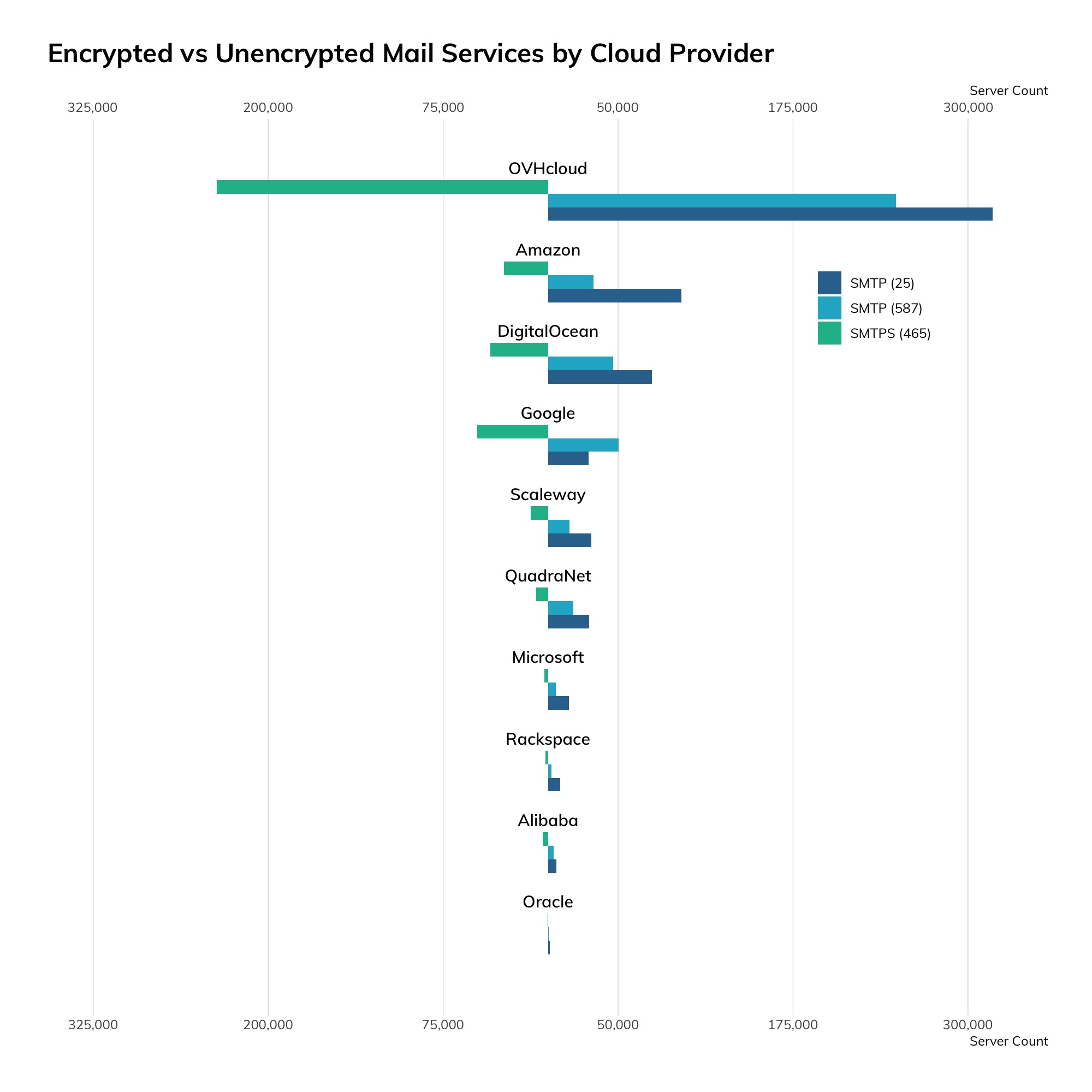

While SMTP is traditionally cleartext with an optional secure protocol negotiation called STARTTLS, we're seeing more SSL-wrapped SMTP, also known as SMTPS, in the world today. The following charts and tables illustrate the distribution of SMTP over port 25, SMTP on port 587 (which is intended for SMTP-to-SMTP relaying of messages), and SMTPS on port 465.

| Country | SMTP (25) | SMTP (587) | SMTPS (465) |

|---|---|---|---|

|

United States |

1,467,077 |

1,456,598 |

1,253,805 |

|

Germany |

637,569 |

373,266 |

375,526 |

|

Japan |

589,222 |

382,133 |

222,633 |

|

France |

398,390 |

212,937 |

196,177 |

|

Poland |

306,368 |

289,522 |

284,297 |

|

Spain |

291,844 |

44,435 |

48,694 |

|

Russia |

245,814 |

104,709 |

95,972 |

|

United Kingdom |

193,073 |

121,902 |

122,069 |

|

Netherlands |

189,456 |

129,690 |

115,211 |

|

Canada |

137,342 |

146,323 |

132,133 |

| Provider | SMTP (25) | SMTP (587) | SMTPS (465) |

|---|---|---|---|

|

OVHcloud |

317,584 |

248,695 |

236,772 |

|

Amazon |

95,175 |

32,579 |

31,438 |

|

DigitalOcean |

74,097 |

46,521 |

41,234 |

|

Scaleway |

30,876 |

15,332 |

12,594 |

|

QuadraNet |

29,282 |

18,200 |

8,667 |

|

|

29,030 |

50,422 |

50,561 |

|

Microsoft |

14,945 |

5,576 |

2,790 |

|

Rackspace |

8,459 |

2,511 |

1,841 |

|

Alibaba |

5,729 |

3,863 |

3,826 |

|

Oracle |

1,274 |

509 |

345 |

As far as top-level domains are concerned, we see that the vast majority of SMTP lives in dot-com land—we counted over 100 million MX records in dot-com registrations, with a sharp drop-off in dot-de, dot-net, and dot-org, with about 10 million MX records in each.

Exposure Information

There are dozens of SMTP servers to choose from, each with their own idiosyncratic methods of configuration, spam filtering, and security. The top SMTP server we're able to fingerprint is Postfix, with over a million and a half installs, followed by Exim, Exchange, and trusty Sendmail. In Table 23 is the complete list of every SMTP server we positively identified—mail administrators will recognize the vestiges of old, little-used mail servers, such as the venerable Lotus Domino and ZMailer. If these are your mail servers, think long and hard about why you’re still running these as opposed to simply farming this thankless chore out to a dedicated mail service provider.

Finally, let's take a quick look at the Exim mail server. Like most other popular software on the internet, we can find all sorts of versions. Unlike other popular software, Exim versioning moves pretty quickly—the current version of Exim at the time of scanning was v 4.93, and has already incremented to 4.94 by the time of publication. However, the popularity of the latest version (4.93) versus next-to-latest (4.92.x) is in the 100,000 range, and given the intense scrutiny afforded to Exim by national intelligence agencies, this delta can be pretty troubling. It’s so troubling that the American National Security Agency issued an advisory urging Exim administrators to patch and upgrade as soon as possible to avoid exploitation by the “Sandworm team.”[42] Specifically, the vulnerability exploited was CVE-2019-10149, and affects versions 4.87 through 4.91—as of the time of our scans, we found approximately 87,500 such servers exposed to the internet. While this is about a fifth of all Exim servers out there, exposed vulnerabilities in mail servers tend to shoot to the top of any list of “must patch now” vulns.

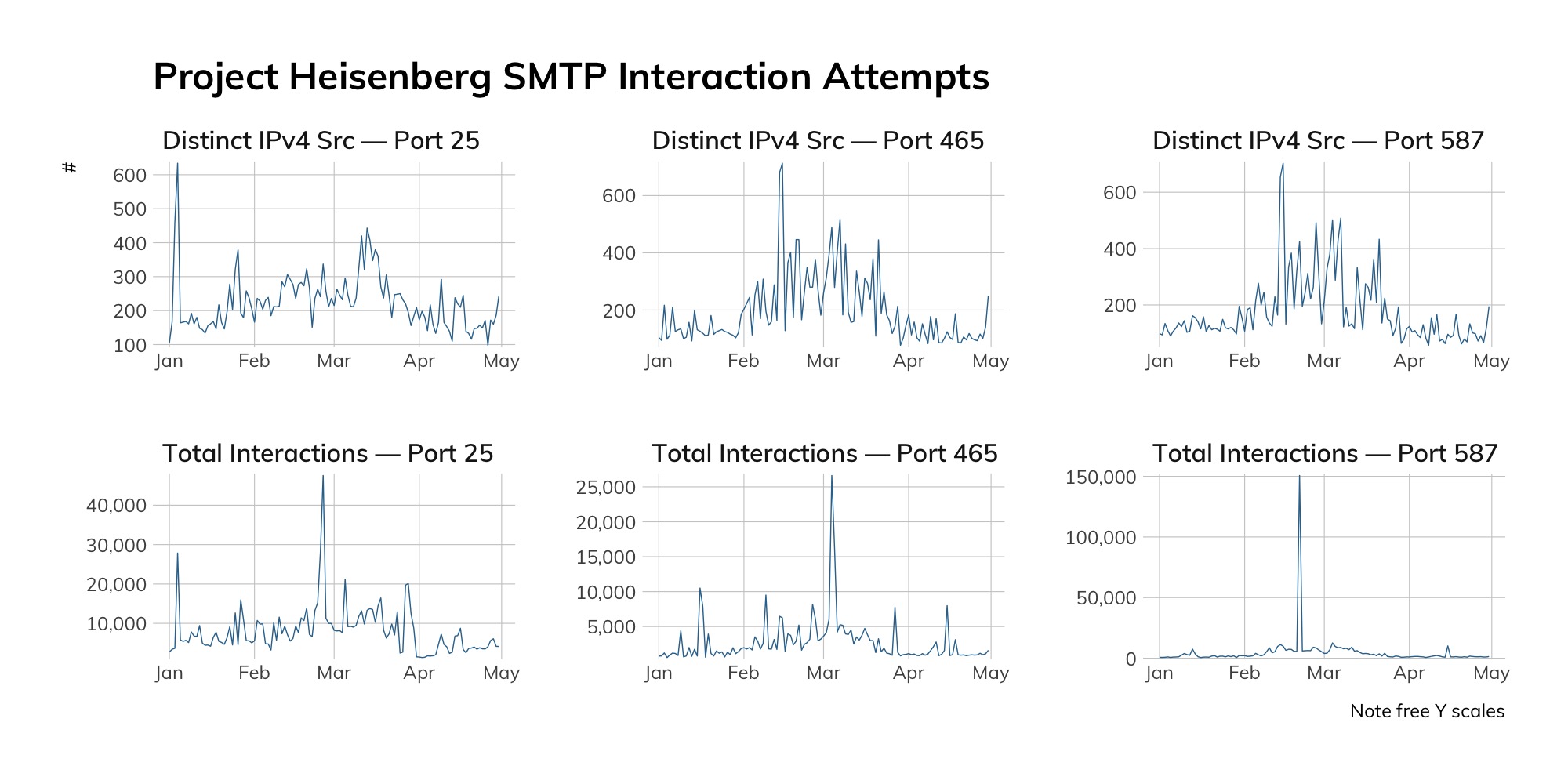

Attacker’s View

Given the high value attackers tend to assign to SMTP vulnerabilities, it’s no surprise that we see fairly consistent scanning action among threat actors in our SMTP honeypots.

| Date | SMTP Port | Count | Percentage | Provider |

|---|---|---|---|---|

|